#machine translation

Google fixes Translate tool after accusations of sexism

“Flawed algorithms can amplify biases through feedback loops,” professors James Zou and Londa Schiebinger wrote in a paper titled “AI can be sexist and racist - it’s time to make it fair.” “Each time a translation program defaults to ‘he said,’ it increases the relative frequency of the masculine pronoun on the web - potentially reversing hard-won advances toward equality.”

Can AI be sexist? Yes, it can be as sexist as people who created it and as sexist as the corpora it learns from.

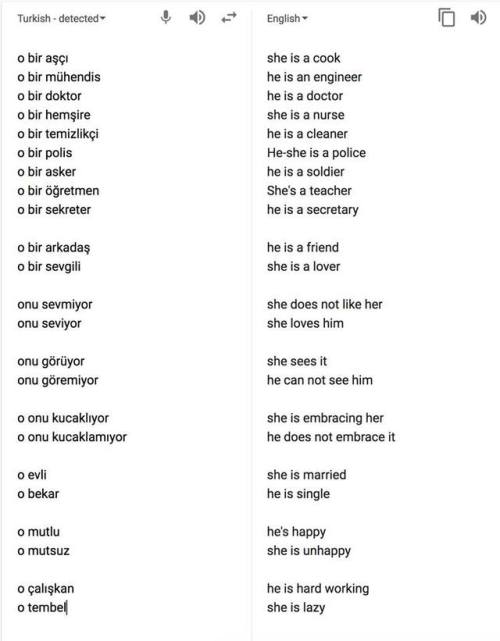

Google Translate adds gendered stereotypes when translating from Turkish, which doesn’t mark gender in these sentences: “o” means both “she” and “he”. For example, “o mutlu” could translate just as correctly to “she is happy” and “o mutsuz” to “he is unhappy” but Google Translate favours the version that perpetuates a whole bunch of stereotypes – stereotypes that were, no doubt, present in the training data. (source,additional commentary,article with more information about how AI learns sexism and racism).

Post link