#brain activity

“It was like red-hot pokers needling one side of my face,” says Catherine, recalling the cluster headaches she experienced for six years. “I just wanted it to stop.” But it wouldn’t – none of the drugs she tried had any effect.

Thinking she had nothing to lose, last year she enrolled in a pilot study to test a handheld device that applies a bolt of electricity to the neck, stimulating the vagus nerve – the superhighway that connects the brain to many of the body’s organs, including the heart.

The results of the trial were presented last month at the International Headache Congress in Boston, and while the trial is small, the findings are positive. Of the 21 volunteers, 18 reported a reduction in the severity and frequency of their headaches, rating them, on average, 50 per cent less painful after using the device daily and whenever they felt a headache coming on.

This isn’t the first time vagal nerve stimulation has been used as a treatment – but it is one of the first that hasn’t required surgery. Some people with epilepsy have had a small generator that sends regular electrical signals to the vagus nerve implanted into their chest. Implanted devices have also been approved to treat depression. What’s more, there is increasing evidence that such stimulation could treat many more disorders from headaches to stroke and possibly Alzheimer’s disease.

The latest study suggests it is possible to stimulate the nerve through the skin, rather than resorting to surgery. “What we’ve done is figured out a way to stimulate the vagus nerve with a very similar signal, but non-invasively through the neck,” says Bruce Simon, vice-president of research at New Jersey-based ElectroCore, makers of the handheld device. “It’s a simpler, less invasive way to stimulate the nerve.”

Cluster headaches are thought to be triggered by the overactivation of brain cells involved in pain processing. The neurotransmitter glutamate, which excites brain cells, is a prime suspect. ElectroCore turned to the vagus nerve as previous studies had shown that stimulating it in people with epilepsy releases neurotransmitters that dampen brain activity.

When the firm used a smaller version of ElectroCore’s device on rats, it found it reduced glutamate levels and excitability in these pain centres. Other studies have shown that vagus nerve stimulation causes the release of inhibitory neurotransmitters which counter the effects of glutamate.

The big question is whether a non-implantable device can really trigger changes in brain chemistry in humans, or whether people are simply experiencing a placebo effect. “The vagus nerve is buried deep in the neck, and something that’s delivering currents through the skin can only go so deep,” says Mike Kilgard of the University of Texas at Dallas. As you turn up the voltage, there’s a risk of it activating muscle fibres that trigger painful cramps, he adds.

Simon says that volunteers using the device haven’t reported any serious side effects. He adds that ElectroCore will soon publish data showing changes in brain activity in humans after using the device. Placebo-controlled trials are also about to start.

Catherine has been using it for a year without ill effect. “I can now function properly as a human being again,” she says.

The many uses of the wonder nerve

Coma, irritable bowel syndrome, asthma and obesity are just some of the disparate conditions that vagus nerve stimulation may benefit and for which human trials are under way.

It might also help people with tinnitus. Although people with tinnitus complain of ringing in their ears, the problem actually arises because too many neurons fire in the auditory part of the brain when certain frequencies are heard.

Mike Kilgard of the University of Texas at Dallas reasoned that if people were played tones that didn’t trigger tinnitus while the vagus nerve was stimulated, this might coax the rogue neurons into firing in response to these frequencies instead. “By activating this nerve we can enhance the brain’s ability to rewire itself,” he says.

He has so far tested the method in rats and in 10 people with tinnitus, using an implanted device to stimulate the nerve. Not everyone noticed an improvement, but even so Kilgard is planning a larger trial. The work was presented at a meeting of the International Union of Physiological Sciences in Birmingham, UK, last month. The technique is also being tested in people who have had a stroke.

“If these studies stand up it could be worth changing the name of the vagus nerve to the wonder nerve,” says Sunny Ogbonnaya at Cork University Hospital in Ireland.

Autistic kids who best peers at math show different brain organization

Children with autism and average IQs consistently demonstrated superior math skills compared with nonautistic children in the same IQ range, according to a study by researchers at the Stanford University School of Medicine and Lucile Packard Children’s Hospital.

“There appears to be a unique pattern of brain organization that underlies superior problem-solving abilities in children with autism,” said Vinod Menon, PhD, professor of psychiatry and behavioral sciences and a member of the Child Health Research Institute at Packard Children’s.

The autistic children’s enhanced math abilities were tied to patterns of activation in a particular area of their brains — an area normally associated with recognizing faces and visual objects.

Menon is senior author of the study, published online Aug. 17 in Biological Psychiatry. Postdoctoral scholar Teresa luculano, PhD, is the lead author.

Children with autism have difficulty with social interactions, especially interpreting nonverbal cues in face-to-face conversations. They often engage in repetitive behaviors and have a restricted range of interests.

But in addition to such deficits, children with autism sometimes exhibit exceptional skills or talents, known as savant abilities. For example, some can instantly recall the day of the week of any calendar date within a particular range of years — for example, that May 21, 1982, was a Friday. And some display superior mathematical skills.

“Remembering calendar dates is probably not going to help you with academic and professional success,” Menon said. “But being able to solve numerical problems and developing good mathematical skills could make a big difference in the life of a child with autism.”

The idea that people with autism could employ such skills in jobs, and get satisfaction from doing so, has been gaining ground in recent years.

The participants in the study were 36 children, ages 7 to 12. Half had been diagnosed with autism. The other half was the control group. Each group had 14 boys and four girls. (Autism disproportionately affects boys.) All participants had IQs in the normal range and showed normal verbal and reading skills on standardized tests administered as part of the recruitment process for the study. But on the standardized math tests that were administered, the children with autism outperformed children in the control group.

After the math test, researchers interviewed the children to assess which types of problem-solving strategies each had used: Simply remembering an answer they already knew; counting on their fingers or in their heads; or breaking the problem down into components — a comparatively sophisticated method called decomposition. The children with autism displayed greater use of decomposition strategies, suggesting that more analytic strategies, rather than rote memory, were the source of their enhanced abilities.

Then, the children worked on solving math problems while their brain activity was measured in an MRI scanner, in which they had to lie down and remain still. The brain scans of the autistic children revealed an unusual pattern of activity in the ventral temporal occipital cortex, an area specialized for processing visual objects, including faces.

“Our findings suggest that altered patterns of brain organization in areas typically devoted to face processing may underlie the ability of children with autism to develop specialized skills in numerical problem solving,” Iuculano said.

“These findings not only empirically confirm that high-functioning children with autism have especially strong number-problem-solving abilities, but show that this cognitive strength in math is based on different patterns of functional brain organization,” said Carl Feinstein, MD, director of the Center for Autism and Related Disorders at Packard Children’s and professor of psychiatry and behavioral sciences at the School of Medicine. He was not involved in the study.

Menon added that previous research “has focused almost exclusively on weaknesses in children with autism. Our study supports the idea that the atypical brain development in autism can lead, not just to deficits, but also to some remarkable cognitive strengths. We think this can be reassuring to parents.”

The research team is now gathering data from a larger group of children with autism to learn more about individual differences in their mathematical abilities. Menon emphasized that not all children with autism have superior math abilities, and that understanding the neural basis of variations in problem-solving abilities is an important topic for future research.

(Image: Corbis)

Post link

Remembering to Remember Supported by Two Distinct Brain Processes

You plan on shopping for groceries later and you tell yourself that you have to remember to take the grocery bags with you when you leave the house. Lo and behold, you reach the check-out counter and you realize you’ve forgotten the bags.

Remembering to remember — whether it’s grocery bags, appointments, or taking medications — is essential to our everyday lives. New research sheds light on two distinct brain processes that underlie this type of memory, known as prospective memory.

The research is published in Psychological Science, a journal of the Association for Psychological Science.

To investigate how prospective memory is processed in the brain, psychological scientist Mark McDaniel of Washington University in St. Louis and colleagues had participants lie in an fMRI scanner and asked them to press one of two buttons to indicate whether a word that popped up on a screen was a member of a designated category. In addition to this ongoing activity, participants were asked to try to remember to press a third button whenever a special target popped up. The task was designed to tap into participants’ prospective memory, or their ability to remember to take certain actions in response to specific future events.

When McDaniel and colleagues analyzed the fMRI data, they observed that two distinct brain activation patterns emerged when participants made the correct button press for a special target.

When the special target was not relevant to the ongoing activity — such as a syllable like “tor” — participants seemed to rely on top-down brain processes supported by the prefrontal cortex. In order to answer correctly when the special syllable flashed up on the screen, the participants had to sustain their attention and monitor for the special syllable throughout the entire task. In the grocery bag scenario, this would be like remembering to bring the grocery bags by constantly reminding yourself that you can’t forget them.

When the special target was integral to the ongoing activity—such as a whole word, like “table” — participants recruited a different set of brain regions, and they didn’t show sustained activation in these regions. The findings suggest that remembering what to do when the special target was a whole word didn’t require the same type of top-down monitoring. Instead, the target word seemed to act as an environmental cue that prompted participants to make the appropriate response – like reminding yourself to bring the grocery bags by leaving them near the front door.

“These findings suggest that people could make use of several different strategies to accomplish prospective memory tasks,” says McDaniel.

McDaniel and colleagues are continuing their research on prospective memory, examining how this phenomenon might change with age.

(Image: Shutterstock)

Post link

Researchers Link Brain Memory Signals to Blood Sugar Levels

A set of brain signals known to help memories form may also influence blood sugar levels, finds a new study in rats.

Researchers at NYU Grossman School of Medicine discovered that a peculiar signaling pattern in the brain region called the hippocampus, linked by past studies to memory formation, also influences metabolism, the process by which dietary nutrients are converted into blood sugar (glucose) and supplied to cells as an energy source.

The study revolves around brain cells called neurons that “fire” (generate electrical pulses) to pass on messages. Researchers in recent years discovered that populations of hippocampal neurons fire within milliseconds of each other in cycles. The firing pattern is called a “sharp wave ripple” for the shape it takes when captured graphically by EEG, a technology that records brain activity with electrodes.

Published online in Nature, the new study found that clusters of hippocampal sharp wave ripples were reliably followed within minutes by decreases in blood sugar levels in the bodies of rats. While the details need to be confirmed, the findings suggest that the ripples may regulate the timing of the release of hormones, possibly including insulin, by the pancreas and liver, as well of other hormones by the pituitary gland.

“Our study is the first to show how clusters of brain cell firing in the hippocampus may directly regulate metabolism,” says senior study author György Buzsáki, MD, PhD, the Biggs Professor of Neuroscience in the Department of Neuroscience and Physiology at NYU Langone Health.

“We are not saying that the hippocampus is the only player in this process, but that the brain may have a say in it through sharp wave ripples,” says Dr. Buzsáki, also a faculty member in the Neuroscience Institute at NYU Langone.

Known to keep blood sugar at normal levels, insulin is released by pancreatic cells, not continually, but periodically in bursts. As sharp wave ripples mostly occur during non-rapid eye movement (NREM) sleep, the impact of sleep disturbance on sharp wave ripples may provide a mechanistic link between poor sleep and high blood sugar levels seen in type 2 diabetes, say the study authors.

Previous work by Dr. Buzsáki’s team had suggested that the sharp wave ripples are involved in permanently storing each day’s memories the same night during NREM sleep, and his 2019 study found that rats learned faster to navigate a maze when ripples were experimentally prolonged.

“Evidence suggests that the brain evolved, for reasons of efficiency, to use the same signals to achieve two very different functions in terms of memory and hormonal regulation,” says corresponding study author David Tingley, PhD, a postdoctoral scholar in Dr. Buzsáki’s lab.

Dual Role

The hippocampus is a good candidate brain region for multiple roles, say the researchers, because of its wiring to other brain regions, and because hippocampal neurons have many surface proteins (receptors) sensitive to hormone levels, so they can adjust their activity as part of feedback loops. The new findings suggest that hippocampal ripples reduce blood glucose levels as part of such a loop.

“Animals could have first developed a system to control hormone release in rhythmic cycles, but then applied the same mechanism to memory when they later developed a more complex brain,” adds Dr. Tingley.

The study data also suggest that hippocampal sharp wave ripple signals are conveyed to hypothalamus, which is known to innervate and influence the pancreas and liver, but through an intermediate brain structure called the lateral septum. Researchers found that ripples may influence the lateral septum just by amplitude (the degree to which hippocampal neurons fire at once), not by the order in which the ripples are combined, which may encode memories as their signals reach the cortex.

In line with this theory, short-duration ripples that occurred in clusters of more than 30 per minute, as seen during NREM sleep, induced a decrease in peripheral glucose levels several times larger than isolated ripples. Importantly, silencing the lateral septum eliminated the impact of hippocampal sharp wave ripples on peripheral glucose.

To confirm that hippocampal firing patterns caused the glucose level decrease, the team used a technology called optogenetics to artificially induce ripples by re-engineering hippocampal cells to include light-sensitive channels. Shining light on such cells through glass fibers induces ripples independent of the rat’s behavior or brain state (e.g., resting or waking). Similar to their natural counterparts, the synthetic ripples reduced sugar levels.

Moving forward, the research team will seek to extend its theory that several hormones could be affected by nightly sharp wave ripples, including through work in human patients. Future research may also reveal devices or therapies that can adjust ripples to lower blood sugar and improve memory, says Dr. Buzsáki.

The music of silence: imagining a song triggers similar brain activity to moments of mid-music silence

Imagining a song triggers similar brain activity as moments of silence in music, according to a pair of just-published studies in the Journal of Neuroscience (1,2).

The results collectively reveal how the brain continues responding to music, even when none is playing, and provide new insights into how human sensory predictions work.

Music is more than a sensory experience

When we listen to music, the brain attempts to predict what comes next. A surprise, such as a loud note or disharmonious chord, increases brain activity.

To isolate the brain’s prediction signal from the signal produced in response to the actual sensory experience, researchers used electroencephalograms (EEGs) to measure the brain activity of musicians while they listened to or imagined Bach piano melodies.

When imagining music, the musicians’ brain activity had the opposite electrical polarity to when they listened to it – indicating different brain activations – but the same type of activity as for imagery occurred in silent moments of the songs when people would have expected a note but there wasn’t one.

Explaining the significance of the results, Giovanni Di Liberto, Assistant Professor in Intelligent Systems in Trinity’s School of Computer Science and Statistics, said:

“There is no sensory input during silence and imagined music, so the neural activity we discovered is coming purely from the brain’s predictions e.g., the brain’s internal model of music. Even though the silent time-intervals do not have an input sound, we found consistent patterns of neural activity in those intervals, indicating that the brain reacts to both notes and silences of music.

“Ultimately, this underlines that music is more than a sensory experience for the brain as it engages the brain in a continuous attempt of predicting upcoming musical events. Our study has isolated the neural activity produced by that prediction process. And our results suggest that such prediction processes are at the foundation of both music listening and imagery.

“We used music listening in these studies to investigate brain mechanisms of sound processing and sensory prediction, but these curious findings have wider implications – from boosting our basic, fundamental scientific understanding, to applied settings such as in clinical research.

“For example, imagine a cognitive assessment protocol involving music listening. From a few minutes of EEG recordings during music listening, we could derive several useful cognitive indicators, as music engages a variety of brain functions, from sensory and prediction processes to emotions. Furthermore, consider that music listening is much more pleasant than existing tasks.”

Motivation depends on how the brain processes fatigue

Fatigue – the feeling of exhaustion from doing effortful tasks – is something we all experience daily. It makes us lose motivation and want to take a break. Although scientists understand the mechanisms the brain uses to decide whether a given task is worth the effort, the influence of fatigue on this process is not yet well understood.

The research team conducted a study to investigate the impact of fatigue on a person’s decision to exert effort. They found that people were less likely to work and exert effort – even for a reward – if they were fatigued. The results are published in Nature Communications.

Intriguingly, the researchers found that there were two different types of fatigue that were detected in distinct parts of the brain. In the first, fatigue is experienced as a short-term feeling, which can be overcome after a short rest. Over time, however, a second, longer term feeling builds up, stops people from wanting to work, and doesn’t go away with short rests.

“We found that people’s willingness to exert effort fluctuated moment by moment, but gradually declined as they repeated a task over time,” says Tanja Müller, first author of the study, based at the University of Oxford. “Such changes in the motivation to work seem to be related to fatigue – and sometimes make us decide not to persist.”

The team tested 36 young, healthy people on a computer-based task, where they were asked to exert physical effort to obtain differing amounts of monetary rewards. The participants completed more than 200 trials and in each, they were asked if they would prefer to ‘work’ – which involved squeezing a grip force device – and gain the higher rewards offered, or to rest and only earn a small reward.

The team built a mathematical model to predict how much fatigue a person would be feeling at any point in the experiment, and how much that fatigue was influencing their decisions of whether to work or rest.

While performing the task, the participants also underwent an MRI scan, which enabled the researchers to look for activity in the brain that matched the predictions of the model.

They found areas of the brain’s frontal cortex had activity that fluctuated in line with the predictions, while an area called the ventral striatum signalled how much fatigue was influencing people’s motivation to keep working.

“This work provides new ways of studying and understanding fatigue, its effects on the brain, and on why it can change some people’s motivation more than others” says Dr Matthew Apps, senior author of the study, based at the University of Birmingham’s Centre for Human Brain Health. “This helps begin to get to grips with something that affects many patients lives, as well as people while at work, school, and even elite athletes.

A baby’s cry not only commands our attention, it also rattles our executive functions—the very neural and cognitive processes we use for making everyday decisions, according to a new University of Toronto study.

“Parental instinct appears to be hardwired, yet no one talks about how this instinct might include cognition,” says David Haley, co-author and Associate Professor of psychology at U of T Scarborough.

“If we simply had an automatic response every time a baby started crying, how would we think about competing concerns in the environment or how best to respond to a baby’s distress?”

The study looked at the effect infant vocalizations—in this case audio clips of a baby laughing or crying—had on adults completing a cognitive conflict task. The researchers used the Stroop task, in which participants were asked to rapidly identify the color of a printed word while ignoring the meaning of the word itself. Brain activity was measured using electroencephalography (EEG) during each trial of the cognitive task, which took place immediately after a two-second audio clip of an infant vocalization.

The brain data revealed that the infant cries reduced attention to the task and triggered greater cognitive conflict processing than the infant laughs. Cognitive conflict processing is important because it controls attention—one of the most basic executive functions needed to complete a task or make a decision, notes Haley, who runs U of T’s Parent-Infant Research Lab.

“Parents are constantly making a variety of everyday decisions and have competing demands on their attention,” says Joanna Dudek, a graduate student in Haley’s Parent-Infant Research Lab and the lead author of the study.

“They may be in the middle of doing chores when the doorbell rings and their child starts to cry. How do they stay calm, cool and collected, and how do they know when to drop what they’re doing and pick up the child?”

A baby’s cry has been shown to cause aversion in adults, but it could also create an adaptive response by “switching on” the cognitive control parents use in effectively responding to their child’s emotional needs while also addressing other demands in everyday life, adds Haley.

“If an infant’s cry activates cognitive conflict in the brain, it could also be teaching parents how to focus their attention more selectively,” he says.

“It’s this cognitive flexibility that allows parents to rapidly switch between responding to their baby’s distress and other competing demands in their lives—which, paradoxically, may mean ignoring the infant momentarily.”

The findings add to a growing body of research suggesting that infants occupy a privileged status in our neurobiological programming, one deeply rooted in our evolutionary past. But, as Haley notes, it also reveals an important adaptive cognitive function in the human brain.

Children’s brains are far more engaged by their mother’s voice than by voices of women they do not know, a new study from the Stanford University School of Medicine has found.

Brain regions that respond more strongly to the mother’s voice extend beyond auditory areas to include those involved in emotion and reward processing, social functions, detection of what is personally relevant and face recognition.

The study, which is the first to evaluate brain scans of children listening to their mothers’ voices, published online May 16 in the Proceedings of the National Academy of Sciences. The strength of connections between the brain regions activated by the voice of a child’s own mother predicted that child’s social communication abilities, the study also found.

“Many of our social, language and emotional processes are learned by listening to our mom’s voice,” said lead author Daniel Abrams, PhD, instructor in psychiatry and behavioral sciences. “But surprisingly little is known about how the brain organizes itself around this very important sound source. We didn’t realize that a mother’s voice would have such quick access to so many different brain systems.”

Preference for mom’s voice

Decades of research have shown that children prefer their mother’s voices: In one classic study, 1-day-old babies sucked harder on a pacifier when they heard the sound of their mom’s voice, as opposed to the voices of other women. However, the mechanism behind this preference had never been defined.

“Nobody had really looked at the brain circuits that might be engaged,” senior author Vinod Menon, PhD, professor of psychiatry and behavioral sciences, said. “We wanted to know: Is it just auditory and voice-selective areas that respond differently, or is it more broad in terms of engagement, emotional reactivity and detection of salient stimuli?”

The study examined 24 children ages 7 to 12. All had IQs of at least 80, none had any developmental disorders, and all were being raised by their biological mothers. Parents answered a standard questionnaire about their child’s ability to interact and relate with others. And before the brain scans, each child’s mother was recorded saying three nonsense words.

“In this age range, where most children have good language skills, we didn’t want to use words that had meaning because that would have engaged a whole different set of circuitry in the brain,” said Menon, who is the Rachael L. and Walter F. Nichols, MD, Professor.

Two mothers whose children were not being studied, and who had never met any of the children in the study, were also recorded saying the three nonsense words. These recordings were used as controls.

MRI scanning

The children’s brains were scanned via magnetic resonance imaging while they listened to short clips of the nonsense-word recordings, some produced by their own mother and some by the control mothers. Even from very short clips, less than a second long, the children could identify their own mothers’ voices with greater than 97 percent accuracy.

The brain regions that were more engaged by the voices of the children’s own mothers than by the control voices included auditory regions, such as the primary auditory cortex; regions of the brain that handle emotions, such as the amygdala; brain regions that detect and assign value to rewarding stimuli, such as the mesolimbic reward pathway and medial prefrontal cortex; regions that process information about the self, including the default mode network; and areas involved in perceiving and processing the sight of faces.

“The extent of the regions that were engaged was really quite surprising,” Menon said.

“We know that hearing mother’s voice can be an important source of emotional comfort to children,” Abrams added. “Here, we’re showing the biological circuitry underlying that.”

Children whose brains showed a stronger degree of connection between all these regions when hearing their mom’s voice also had the strongest social communication ability, suggesting that increased brain connectivity between the regions is a neural fingerprint for greater social communication abilities in children.

‘An important new template’

“This is an important new template for investigating social communication deficits in children with disorders such as autism,” Menon said. His team plans to conduct similar studies in children with autism, and is also in the process of investigating how adolescents respond to their mother’s voice to see whether the brain responses change as people mature into adulthood.

“Voice is one of the most important social communication cues,” Menon said. “It’s exciting to see that the echo of one’s mother’s voice lives on in so many brain systems.”

Bilingualism Comes Naturally to Our Brains

The brain uses a shared mechanism for combining words from a single language and for combining words from two different languages, indicating that language switching is natural for those who are bilingual.

The brain uses a shared mechanism for combining words from a single language and for combining words from two different languages, a team of neuroscientists has discovered. Its findings indicate that language switching is natural for those who are bilingual because the brain has a mechanism that does not detect that the language has switched, allowing for a seamless transition in comprehending more than one language at once.

“Our brains are capable of engaging in multiple languages,” explains Sarah Phillips, a New York University doctoral candidate and the lead author of the paper, which appears in the journal eNeuro. “Languages may differ in what sounds they use and how they organize words to form sentences. However, all languages involve the process of combining words to express complex thoughts.”

“Bilinguals show a fascinating version of this process–their brains readily combine words from different languages together, much like when combining words from the same language,” adds Liina Pylkkänen, a professor in NYU’s Department of Linguistics and Department of Psychology and the senior author of the paper.

An estimated 60 million in the U.S. use two or more languages, according to the U.S. Census. However, despite the widespread nature of bi- and multilingualism, domestically and globally, the neurological mechanisms used to understand and produce more than one language are not well understood.

This terrain is an intriguing one; bilinguals often mix their two languages together as they converse with one another, raising questions about how the brain functions in such exchanges.

To better understand these processes, Phillips and Pylkkänen, who is also part of the NYU Abu Dhabi Institute, explored whether bilinguals interpret these mixed-language expressions using the same mechanisms as when comprehending single-language expressions or, alternatively, if understanding mixed-language expressions engages the brain in a unique way.

To test this, the scientists measured the neural activity of Korean/English bilinguals.

Here, the study’s subjects viewed a series of word combinations and pictures on a computer screen. They then had to indicate whether or not the picture matched the preceding words. The words either formed a two-word sentence or were simply a pair of verbs that did not combine with each other into a meaningful phrase (e.g., “icicles melt” vs. “jump melt”). In some instances, the two words came from a single language (English or Korean) while in others both languages were used, with the latter mimicking mixed-language conversations.

In order to measure the study subjects’ brain activity during these experiments, the researchers deployed magnetoencephalography (MEG), a technique that maps neural activity by recording magnetic fields generated by the electrical currents produced by our brains.

The recordings showed that Korean/English bilinguals, in interpreting mixed-language expressions, used the same neural mechanism as they did while interpreting single-language expressions.

Specifically, the brain’s left anterior temporal lobe, a brain region well-studied for its role in combining the meanings of multiple words, was insensitive to whether the words it received were from the same language or from different languages. This region, then, proceeded to combine words into more complex meanings so long as the meanings of the two words combined together into a more complex meaning.

These findings suggest that language switching is natural for bilinguals because the brain has a combinatory mechanism that does not “see” that the language has switched.

“Earlier studies have examined how our brains can interpret an infinite number of expressions within a single language,” observes Phillips. “This research shows that bilingual brains can, with striking ease, interpret complex expressions containing words from different languages.”

How Shared Neural Codes Help Us Recognize Familiar Faces

The ability to recognize familiar faces is fundamental to social interaction, as visual information activates social and personal knowledge about a person who is familiar. But how individuals in social groups process this information in their brains has long been a question. Distinct information about familiar faces is stored in a neural code that is shared across brains, according to a new study published in the Proceedings of the National Academy of Sciences.

“Within visual processing areas, we found that information about personally familiar and visually familiar faces is shared across the brains of people who have the same friends and acquaintances,” says first author Matteo Visconti di Oleggio Castello, Guarini ’18, a postdoctoral neuroscience scholar at the University of California, Berkeley, who conducted this research as a graduate student in psychological and brain sciences. “We were surprised to find that the shared information about personally familiar faces also extends to areas that are non-visual and important for social processing, suggesting that there is shared social information across brains.”

For the study, the research team applied a method called hyperalignment to create a common representational space for understanding how brain activity is similar among participants. The team obtained fMRI data from 14 graduate students in the same PhD program in three sessions. In one session, participants watched parts of the film The Grand Budapest Hotel. This data was used to align to participants’ brain responses to a common representational space. In the other two fMRI sessions, participants were asked to look at faces of fellow graduate students with whom they were personally familiar and at faces of strangers with whom they were visually familiar, but about whom they had no other information. The researchers used machine learning classifiers to predict what face a participant was looking at based on the brain activity of the other participants.

For visually familiar identities, participants only knew what the faces looked like. The results showed that the identity of visually familiar faces could be decoded with accuracy only in brain areas that are involved in visual processing of faces. On the other hand, the identity of personally familiar faces could be decoded with accuracy across participants in brain areas involved in visual processing and, surprisingly, also in areas involved in social cognition. These areas included the dorsal medial prefrontal cortex, which is involved in processing other people’s intentions and traits; the precuneus, an area active when processing personally familiar faces; the insula, which is involved in emotional processing; and the temporal parietal junction, which plays an important role in social cognition and in representing the mental states of others—what’s known as the “theory of the mind.”

This research builds on the team’s earlier work, which found that these theory-of-mind areas in the brain are activated when a person sees someone personally familiar.

“This is what allows us to interact in the most appropriate way with someone who is familiar,” says senior author Maria (Ida) Gobbini, a research associate professor in the Center for Cognitive Neuroscience and associate professor in the department of experimental, diagnostic, and specialty medicine at the University of Bologna. For example, how you interact with a friend or family member may be quite different from the way you interact with a colleague or boss.

“It would have been quite possible that everybody has their own private code for what people are like, but this is not the case,” says co-author James Haxby, professor of psychological and brain sciences. “Our research shows that processing familiar faces really has to do with general knowledge about people.”

Dopamine’s many roles, explained

Among the neurotransmitters in the brain, dopamine has gained an almost mythical status. Decades of research have established its contribution to several seemingly unrelated brain functions including learning, motivation, and movement, raising the question of how a single neurotransmitter can play so many different roles.

(Image caption: Researchers record the activity of neurons in the brain’s olfactory learning center (bottom), as the fly receives a drop of sucrose)

Untangling dopamine’s diverse functions has been challenging, in part because the advanced brain of humans and other mammals contain different kinds of dopamine neurons, all embedded in highly complex circuits. In a new study, Rockefeller’s Vanessa Ruta and her team dive deep into the question by looking instead at the much simpler brain of the fruit fly, whose neurons and their connections have been mapped in detail.

As in humans, a fly’s dopamine neurons provide a signal for learning, helping them to link a particular odor to a particular outcome. Learning that, for example, apple cider vinegar contains sugar serves to shape the animals’ future behavior on their next encounter with that odor. But Ruta’s team discovered that the same dopamine neurons also correlate strongly with the animal’s ongoing behavior. The activity of these dopamine neurons does not simply encode the mechanics of movement, but rather appears to reflect the motivation or goal underlying the fly’s actions in real time. In other words, the same dopamine neurons that teach animals long-term lessons also provide moment-to-moment reinforcement, encouraging the flies to continue with a beneficial action.

“There seems to be an intimate connection between learning and motivation, two different facets of what dopamine does,” says Ruta, who published the findings in Nature Neuroscience.

Continuous learning

Smells are important to flies. A brain center for olfactory learning, called the mushroom body, is responsible for teaching them which smells signify tasty sugar. There, three types of neurons come together: Kenyon cells that respond to odors, the output neurons that send signals to the rest of the brain, and the dopamine-producing neurons. When the fly encounters an odor and then gets a sugar reward, a quick release of dopamine alters the strength of connections between neurons of the mushroom body, essentially helping the fly to make new associations and change its future response to that odor.

But Ruta and her colleagues have noticed ongoing dopamine signaling even in the absence of rewards. The same neurons that helped the flies learn associations also fired frequently as the animal moved. “That raised the question, are these neurons representing specific aspects of the movement, like how the animal is moving its legs, or are they related to something else, like the goal of the animal?” Ruta says.

To find out, the team developed a virtual-reality system in which fruit flies can navigate an olfactory environment, walking on a treadmill-like ball while their brain activity is monitored by a microscope over their head. A stream of air delivers odors through a small tube. When the fly gets a whiff of an attractive odor, like apple cider vinegar, it reorients and starts to move upwind, towards the source.

Using this system, the researchers were able to examine the fly’s brain activity under different conditions. They found that the activity of dopamine neurons closely reflects movements as they were happening, but only when the flies engage in purposeful tracking, and not when they are just wandering about.

When the researchers suppressed the activity of the dopamine neurons, the animals diminished their tracking of the odor, even when they were starving and therefore had a heightened interest in food-related smells. In contrast, activating the neurons in food-indifferent, fully fed flies, propelled them into active pursuit of the odor.

Together, the findings reveal how one dopamine pathway can perform two functions: conveying motivational signals to rapidly shape ongoing behaviors while also providing instructive signals to guide future behavior through learning. “It gives us a deeper understanding of how a single pathway can generate different forms of flexible behavior,” Ruta says.

The next step is to understand how the other neurons know what a burst of dopamine means at any given time. One possibility, Ruta says, is that learning is a more continuous, dynamic process than often thought: On short timescales, animals continuously evaluate their behavior at every step, learning not just the final associations, but also the actions that lead them there.

New Study Helps in Finally Breaking the “Silence” on the Brain Network

Studying the complex network of operations in the brain not only helps us understand its working better, but also provides avenues for potential treatments for brain disorders. But linking the brain network to actual behavior is challenging. In a new study, researchers have discovered that pinpointed suppression or “silencing” of certain areas of monkey brains using genetically engineered drugs can be used to reveal changes to their operational network and the subsequent behavioral effects.

Scientists have been studying the human brain for centuries, yet they have only scratched the surface of all there is to know about this complex organ. In 1990, the face of neuroscience changed with the invention of functional magnetic resonance imaging (fMRI). fMRI works on the idea that when a certain area of the brain is being used, it experiences an increase in blood flow. This technique has been used to study neurological activity in the brains of myriad animals and humans as well, providing valuable information on cognitive and movement-based functions.

fMRI has also revealed that “activating” a certain part of the brain has effects on other anatomically or functionally connected regions. Trying to solve this “network” of operations in the brain is one of the key issues in neuroscience.

In a recent study, a research team—including Toshiyuki Hirabayashi and Takafumi Minamimoto from the National Institutes for Quantum Science and Technology (QST)—has shown that gene-targeting drugs in macaque monkeys can cause multifaceted behavioral effects via the altered operation of relevant brain networks, thus opening a critical path towards understanding the network of operations underlying higher functions in primates (monkeys, humans etc.). “Our technique will allow us to study how disturbances to the functional brain network lead to certain symptoms. This will help us to work backwards to clarify the network mechanisms behind brain disorders with similar symptoms, thus leading to new treatments,” reveals Dr. Toshiyuki Hirabayashi, principal researcher at QST, who led the study.

Conventional approaches to activating or suppressing areas of the brain include electrical stimulation or the injection of a psychoactive substance called muscimol, but recent research has focused on using genetic techniques of targeting due to how specific they are. Called chemogenetics, these techniques rely on artificial drugs that are designed to specifically bind to genetically induced artificial protein called “receptors.” The drugs bind to the receptors, and thus influence physiological and neurological processes in the brain, spinal cord, and other parts of the body where the receptors are genetically expressed. Combining chemogenetics with fMRI enables non-invasive visualization of network-level changes induced by local activity manipulation. But, unlike the recent study, chemogenetic fMRI studies so far have focused on a resting state, which might not provide the most useful results when trying to study task-related or sensory-related activities.

For their investigation into functional brain networks, the research team studied the effects of fMRI guided chemogenetics on hand-grasping in macaques. To do this, they first studied the part of the brain that is responsible for precise finger movement, and then silenced (suppressed) it for one hand using a “designer receptor exclusively activated by designer drugs” (DREADD). They then gave the monkeys with silenced hand-grasping skills a task that involved picking up food pellets from a board consisting of small slots. They found that the monkeys could pick up pellets well with the non-silenced hand, which was on the same side of the body as the silenced brain region. But they struggled with using the affected hand, which was on the opposite side. The scientists also saw that the designer drug caused a reduced fMRI signal from “downstream” areas of the monkey brains, providing insight into the rest of the network dysfunction underlying the altered hand-grasping behavior. Finally, they saw that silencing the hand sensory region caused unexpectedly elevated activity in the foot sensory region with an increased sensitivity in the foot on the opposite side of the body, which further suggested that portions of the network have inhibitory effects on other parts of the system.

These findings demonstrate that targeted chemogenetic silencing in macaques can cause stimulatory and inhibitory, i.e., bidirectional changes in brain activity, which can be identified using fMRI. Furthermore, chemogenetics offer a minimally invasive way to repeatedly manipulate the same location on the brain without causing damage to the brain tissue, making the approach beneficial for the study of the functional brain network. According to Dr. Hirabayashi, “Applying chemogenetic fMRI to higher brain functions in macaques like memory or affection will lead to translational understanding of causal network mechanisms for those functions in the human brain.”

With developments like these, it is easy to see humankind having a clearer picture of the workings of the human brain soon!

(Image caption: Schematic representation of the targeted silencing experiment. The scientists found that silencing the brain area (SI) for hand sensation surprisingly increased activity in the brain area for foot sensation. Credit: Toshiyuki Hirabayashi from National Institutes for Quantum Science and Technology)

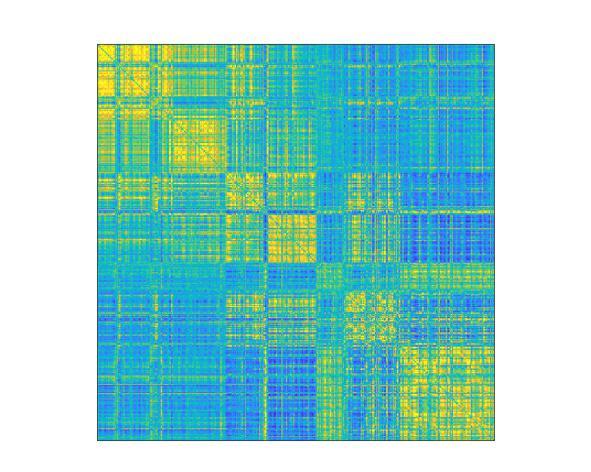

Our brains have a “fingerprint” too

An EPFL scientist has pinpointed the signs of brain activity that make up our brain fingerprint, which – like our regular fingerprint – is unique.

“I think about it every day and dream about it at night. It’s been my whole life for five years now,” says Enrico Amico, a scientist and SNSF Ambizione Fellow at EPFL’s Medical Image Processing Laboratory and the EPFL Center for Neuroprosthetics. He’s talking about his research on the human brain in general, and on brain fingerprints in particular. He learned that every one of us has a brain “fingerprint” and that this fingerprint constantly changes in time. His findings have just been published in Science Advances.

“My research examines networks and connections within the brain, and especially the links between the different areas, in order to gain greater insight into how things work,” says Amico. “We do this largely using MRI scans, which measure brain activity over a given time period.” His research group processes the scans to generate graphs, represented as colorful matrices, that summarize a subject’s brain activity. This type of modeling technique is known in scientific circles as network neuroscience or brain connectomics. “All the information we need is in these graphs, that are commonly known as “functional brain connectomes”. The connectome is a map of the neural network. They inform us about what subjects were doing during their MRI scan – if they were resting or performing some other tasks, for example. Our connectomes change based on what activity was being carried out and what parts of the brain were being used,” says Amico.

Two scans are all it takes

A few years ago, neuroscientists at Yale University studying these connectomes found that every one of us has a unique brain fingerprint. Comparing the graphs generated from MRI scans of the same subjects taken a few days apart, they were able to correctly match up the two scans of a given subject nearly 95% of the time. In other words, they could accurately identify an individual based on their brain fingerprint. “That’s really impressive because the identification was made using only functional connectomes, which are essentially sets of correlation scores,” says Amico.

(Image caption: Map of functional brain connectomes Credit: © 2021 EPFL)

He decided to take this finding one step further. In previous studies, brain fingerprints were identified using MRI scans that lasted several minutes. But he wondered whether these prints could be identified after just a few seconds, or if there was a specific point in time when they appear – and if so, how long would that moment last? “Until now, neuroscientists have identified brain fingerprints using two MRI scans taken over a fairly long period. But do the fingerprints actually appear after just five seconds, for example, or do they need longer? And what if fingerprints of different brain areas appeared at different moments in time? Nobody knew the answer. So, we tested different time scales to see what would happen,” says Amico.

A brain fingerprint in just 1 minute and 40 seconds

His research group found that seven seconds wasn’t long enough to detect useful data, but that around 1 minute and 40 seconds was. “We realized that the information needed for a brain fingerprint to unfold could be obtained over very short time periods,” says Amico. “There’s no need for an MRI that measures brain activity for five minutes, for example. Shorter time scales could work too.” His study also showed that the fastest brain fingerprints start to appear from the sensory areas of the brain, and particularly the areas related to eye movement, visual perception and visual attention. As time goes by, also frontal cortex regions, the ones associated to more complex cognitive functions, start to reveal unique information to each of us.

The next step will be to compare the brain fingerprints of healthy patients with those suffering from Alzheimer’s disease. “Based on my initial findings, it seems that the features that make a brain fingerprint unique steadily disappear as the disease progresses,” says Amico. “It gets harder to identify people based on their connectomes. It’s as if a person with Alzheimer’s loses his or her brain identity.”

Along this line, potential applications might include early detection of neurological conditions where brain fingerprints get disappear. Amico’s technique can be used in patients affected by autism, or stroke, or even in subjects with drug addictions. “This is just another little step towards understanding what makes our brains unique: the opportunities that this insight might create are limitless.”

Teaching Ancient Brains New Tricks

The science of physics has strived to find the best possible explanations for understanding matter and energy in the physical world across all scales of space and time. Modern physics is filled with complex concepts and ideas that have revolutionized the way we see (and don’t see) the universe. The mysteries of the physical world are increasingly being revealed by physicists who delve into non-intuitive, unseen worlds, involving the subatomic, quantum and cosmological realms. But how do the brains of advanced physicists manage this feat, of thinking about worlds that can’t be experienced?

In a recently published paper in npj: Science of Learning, researchers at Carnegie Mellon University have found a way to decode the brain activity associated with individual abstract scientific concepts pertaining to matter and energy, such as fermion or dark matter.

Robert Mason, senior research associate, Reinhard Schumacher, professor of physics and Marcel Just, the D.O. Hebb University Professor of Psychology, at CMU investigated the thought processes of their fellow CMU physics faculty concerning advanced physics concepts by recording their brain activity using functional Magnetic Resonance Imaging (fMRI).

Unlike many other neuroscience studies that use brain imaging, this one was not out to find “the place in the brain” where advanced scientific concepts reside. Instead, this study’s goal was to discover how the brain organizes highly abstract scientific concepts. An encyclopedia organizes knowledge alphabetically, a library organizes it according to something like the Dewey Decimal System, but how does the brain of a physicist do it?

The study examined whether the activation patterns evoked by the different physics concepts could be grouped in terms of concept properties. One of the most novel findings was that the physicists’ brains organized the concepts into those with measureable versus immeasurable size. Here on Earth for most of us mortals, everything physical is measureable, given the right ruler, scale or radar gun. But for a physicist, some concepts like dark matter, neutrinos or the multiverse, their magnitude is not measureable. And in the physicists’ brains, the measureable versus immeasurable concepts are organized separately.

Of course, some parts of the brain organization of the physics professors resembled the organization in physics students’ brains such as concepts that had a periodic nature. Light, radio waves and gamma rays have a periodic nature but concepts like buoyancy and the multiverse do not.

But how can this interpretation of the brain activation findings be assessed? The study team found a way to generate predictions of the activation patterns of each of the concepts. But how can the activation evoked by dark matter be predicted? The team recruited an independent group of physics faculty to rate each concept on each of the hypothesized organizing dimensions on a 1-7 scale. For example, a concept like “duality” would tend to be rated as immeasurable (i.e., low on the measureable magnitude scale). A computational model then determined the relation between the ratings and activation patterns for all of the concepts except one of them and then used that relation to predict the activation of the left-out concept. The accuracy of this model was 70% on average, well above chance at 50%. This result indicates that the underlying organization is well-understood. This procedure is demonstrated for the activation associated with the concept of dark matter in the accompanying figure.

(Image caption: Based on how other physics concepts are represented in terms of their underlying dimensions, a mathematical model can accurately predict the brain activation pattern of a new concept like dark matter)

Creativity of Thought

Near the beginning of the 20th century, post-Newtonian physicists radically advanced the understanding of space, time, matter, energy and subatomic particles. The new concepts arose not from their perceptual experience, but from the generative capabilities of the human brain. How was this possible?

The neurons in the human brain have a large number of computational capabilities with various characteristics, and experience determines which of those capabilities are put to use in various possible ways in combination with other brain regions to perform particular thinking tasks. For example, every healthy brain is prepared to learn the sounds of spoken language, but an infant’s experience in a particular language environment shapes which phonemes of which language are learned.

The genius of civilization has been to use these brain capabilities to develop new skills and knowledge. What makes all of this possible is the adaptability of the human brain. We can use our ancient brains to think of new concepts, which are organized along new, underlying dimensions. An example of a “new” physics dimension significant in 20th century, post-Newtonian physics is “immeasurability” (a property of dark matter, for example) that stands in contrast to the “measurability” of classical physics concepts, (such as torque or velocity). This new dimension is present in the brains of all university physics faculty tested. The scientific advances in physics were built with the new capabilities of human brains.

Another striking finding was the large degree of commonality across physicists in how their brains represented the concepts. Even though the physicists were trained in different universities, languages and cultures, there was a similarity in brain representations. This commonality in conceptual representations arises because the brain system that automatically comes into play for processing a given type of information is the one that is inherently best suited to that processing. As an analogy, consider that the parts of one’s body that come into play to perform a given task are the best suited ones: to catch a tennis ball, a closing hand automatically comes into play, rather than a pair or knees or a mouth or an armpit. Similarly, when physicists are processing information about oscillation, the brain system that comes into play is the one that would normally process rhythmic events, such as dance movements or ripples in a pond. And that is the source of the commonality across people. It is the same brain regions in everyone that are recruited to process a given concept.

So the secret of teaching ancient brains new tricks, as the advance of civilization has repeatedly done, is to empower creative thinkers to develop new understandings and inventions, by building on or repurposing the inherent information processing capabilities of the human brain. By communicating these newly developed concepts to others, they will root themselves in the same information processing capabilities of the recipients’ brains as the original developers used. Mass communication and education can propagate the advances to entire populations. Thus the march of science, technology and civilization continue to be driven by the most powerful entity on Earth, the human brain.

‘Fight or flight’ – unless internal clocks are disrupted

Here’s how it’s supposed to work: Your brain sends signals to your body to release different hormones at certain times of the day. For example, you get a boost of the hormone cortisol — nature’s built-in alarm system — right before you usually wake up.

But hormone release actually relies on the interconnected activity of clocks in more than one part of the brain. New research from Washington University in St. Louis shows how daily release of glucocorticoids depends on coordinated clock-gene and neuronal activity rhythms in neurons found in two parts of the hypothalamus, the suprachiasmatic nucleus (SCN) and paraventricular nucleus (PVN).

The new study, conducted with freely behaving mice, is published in Nature Communications.

“Normal behavior and physiology depends on a near 24-hour circadian release of various hormones,” said Jeff Jones, who led the study as a postdoctoral research scholar in biology in Arts & Sciences and recently started work as an assistant professor of biology at Texas A&M University. “When hormone release is disrupted, it can lead to numerous pathologies, including affective disorders like anxiety and depression and metabolic disorders like diabetes and obesity.

“We wanted to understand how signals from the central biological clock — a tiny brain area called the SCN — are decoded by the rest of the brain to generate these diverse circadian rhythms in hormone release,” said Jones, who worked with Erik Herzog, the Viktor Hamburger Distinguished Professor in Arts & Sciences at Washington University and senior author of the new study.

The daily timing of hormone release is controlled by the SCN. Located in the hypothalamus, just above where the optic nerves cross, neurons in the SCN send daily signals that are decoded in other parts of the brain that talk to the adrenal glands and the body’s endocrine system.

“Cortisol in humans (corticosterone in mice) is more typically known as a stress hormone involved in the ‘fight or flight’ response,” Jones said. “But the stress of waking up and preparing for the day is one of the biggest regular stressors to the body. Having a huge amount of this glucocorticoid released right as you wake up seems to help you gear up for the day.”

Or for the night, if you’re a mouse.

The same hormones that help humans prepare for dealing with the morning commute or a challenging work day also help mice meet their nightly step goals on the running wheel.

(Image caption: Projections from SCN neurons (magenta) send daily signals to rhythmic PVN neurons (cyan) to regulate circadian glucocorticoid release. Credit: Jeff Jones)

Using a novel neuronal recording approach, Jones and Herzog recorded brain activity in individual mice for up to two weeks at a time.

“Recording activity from identified types of neurons for such a long period of time is difficult and data intensive,” Herzog said. “Jeff pioneered these methods for long-term, real-time observations in behaving animals.”

Using information about each mouse’s daily rest-activity and corticosterone secretion, along with gene expression and electrical activity of targeted neurons in their brains, the scientists discovered a critical circuit between the SCN and neurons in the PVN that produce the hormone that triggers release of glucocorticoids.

Turns out, it’s not enough for the neurons in the SCN to send out daily signals; the ‘local’ clock in the PVN neurons also has to be working properly in order to produce coordinated daily rhythms in hormone release.

Experiments that eliminated a clock gene in the circadian-signal-receiving area of the brain broke the regular daily cycle.

“There’s certain groups of neurons in the SCN that communicate timing information to groups of neurons in the PVN that regulate daily hormone release,” Jones said. “And for a normal hormone rhythm to proceed, you need clocks in both the central pacemaker and this downstream region to work in tandem.”

The findings in mice could have implications for humans down the road, Jones said. Future therapies for cortisol-related diseases and genetic conditions in humans will need to take into account the importance of a second internal clock.

Scientists Pinpoint the Uncertainty of Our Working Memory

The human brain regions responsible for working memory content are also used to gauge the quality, or uncertainty, of memories, a team of scientists has found. Its study uncovers how these neural responses allow us to act and make decisions based on how sure we are about our memories.

“Access to the uncertainty in our working memory enables us to determine how much to ‘trust’ our memory in making decisions,” explains Hsin-Hung Li, a postdoctoral fellow in New York University’s Department of Psychology and Center for Neural Science and the lead author of the paper, which appears in the journal Neuron. “Our research is the first to reveal that the neural populations that encode the content of working memory also represent the uncertainty of memory.”

Working memory, which enables us to maintain information in our minds, is an essential cognitive system that is involved in almost every aspect of human behavior—notably decision-making and learning.

For example, when reading, working memory allows us to store the content we just read a few seconds ago while our eyes keep scanning through the new sentences. Similarly, when shopping online, we may compare, “in our mind,” the item in front of us on the screen with previous items already viewed and still remembered.

“It is not only crucial for the brain to remember things, but also to weigh how good the memory is: How certain are we that a specific memory is accurate?” explains Li. “If we feel that our memory for the previously viewed online item is poor, or uncertain, we would scroll back and check that item again in order to ensure an accurate comparison.”

While studies on human behaviors have shown that people are able to evaluate the quality of their memory, less clear is how the brain achieves this.

More specifically, it had previously been unknown whether the brain regions that hold the memorized item also register the quality of that memory.

In uncovering this, the researchers conducted a pair of experiments to better understand how the brain stores working memory information and how, simultaneously, the brain represents the uncertainty—or, how good the memory is—of remembered items.

In the first experiment, human participants performed a spatial visual working memory task while a functional magnetic resonance imaging (fMRI) scanner recorded their brain activity. For each task, or trial, the participant had to remember the location of a target—a white dot shown briefly on a computer screen—presented at a random location on the screen and later report the remembered location through eye movement by looking in the direction of the remembered target location.

Here, fMRI signals allowed the researchers to decode the location of the memory target—what the subjects were asked to remember—in each trial. By analyzing brain signals corresponding to the time during which participants held their memory, they could determine the location of the target the subjects were asked to memorize. In addition, through this method, the scientists could accurately predict memory errors made by the participants; by decoding their brain signals, the team could determine what the subjects were remembering and therefore spot errors in their recollections.

In the second experiment, the participants reported not only the remembered location, but also how uncertain they felt about their memory in each trial. The resulting fMRI signals recorded from the same brain regions allowed the scientists to decode the uncertainty reported by the participants about their memory.

Taken together, the results yielded the first evidence that the human brain registers both the content and the uncertainty of working memory in the same cortical regions.

“The knowledge of uncertainty of memory also guides people to seek more information when we are unsure of our own memory,” Li says in noting the utility of the findings.

Statistical model defines ketamine anesthesia’s effects on the brain

Neuroscientists at MIT and Massachusetts General Hospital have developed a statistical framework that rigorously describes the brain state changes that patients experience under ketamine-induced anesthesia.

By developing the first statistical model to finely characterize how ketamine anesthesia affects the brain, a team of researchers at MIT’s Picower Institute for Learning and Memory and Massachusetts General Hospital have laid new groundwork for three advances: understanding how ketamine induces anesthesia; monitoring the unconsciousness of patients in surgery; and applying a new method of analyzing brain activity.

Based on brain rhythm measurements from nine human and two animal subjects, the new model published in PLOS Computational Biology defines the distinct, characteristic states of brain activity that occur during ketamine-induced anesthesia, including how long each lasts. It also tracks patterns of how the states switch from one to the next. The “beta-hidden Markov model” therefore provides anesthesiologists, neuroscientists, and data scientists alike with a principled guide to how ketamine anesthesia affects the brain and what patients will experience.

In parallel work the lab of senior author Emery N. Brown, an anesthesiologist at MGH and Edward Hood Taplin Professor of Computational Neuroscience at MIT, has developed statistical analyses to characterize brain activity under propofol anesthesia, but as the new study makes clear, ketamine produces entirely different effects. Efforts to better understand the drug and to improve patient outcomes therefore depend on having a ketamine-specific model.

“Now we have an extremely solid statistical stake in the ground regarding ketamine and its dynamics,” said Brown, a professor in MIT’s Department of Brain and Cognitive Sciences and Institute for Medical Engineering & Science, as well as at Harvard Medical School.

Making a model

After colleagues at MGH showed alternating patterns of high-frequency gamma rhythms and very low-frequency delta rhythms in patients under ketamine anesthesia, Brown’s team, led by graduate student Indie Garwood and postdoc Sourish Chakravarty set out to conduct a rigorous analysis. Chakravarty suggested to Garwood that a hidden Markov model might fit the data well because it is suited to describing systems that switch among discrete states.

(Image caption: A multitaper spectrogram of 120 seconds of readings from a human patient under ketamine anesthesia shows distinct bands of high power (warmer colors) at high “gamma” frequencies and very low “delta” frequencies)

To conduct the analysis, Garwood and the team gathered data from two main sources. One set of measurements came from forehead-mounted EEGs on nine surgical patients who volunteered to undergo ketamine-induced anesthesia for a period of time before undergoing surgery with additional anesthetic drugs. The other came from electrodes implanted in the frontal cortex of two animals in the lab of Earl Miller, Picower Professor of Neuroscience at MIT.

Analysis of the readings with the hidden Markov model, using a beta distribution as an observation model, not only captured and characterized the previously observed alternations between gamma and delta rhythms, but a few other more subtle states that mixed the two rhythms.

Importantly, the model showed that the various states move through a characteristic order and defined how long each state lasts. Garwood said understanding these patterns allows for making predictions much in the same way that a new driver can learn to predict traffic lights. For instance, learning that lights change from green to yellow to red and that the yellow light only lasts a few seconds can help a new driver predict what to do when coming to an intersection. Similarly, anesthesiologists monitoring rhythms in a patient can use the findings to ensure that brain states are changing as they should, or make adjustments if they are not.

Characterizing the patterns of brain states and their transitions will also help neuroscientists better understand how ketamine acts in the brain, Brown added. As researchers create computational models of the underlying brain circuits and their response to the drug, he said, the new findings will give them important constraints. For instance, for a model to be valid, it should not only produce alternating gamma and slow rhythm states but also the more subtle ones. It should produce each state for the proper duration and yield state transitions in the proper order.

“Lacking this model was preventing some of our other work from going forward in a rigorous way,” Garwood said. “Developing this method allowed us to get that quantitative description that we need to be able to understand what’s going on and what sort of neural activity is generating these states.”

New ideas

As neuroscientists learn more about how ketamine induces unconsciousness from such efforts, one major implication is already apparent, Brown said. Whereas propofol causes brain activity to become dominated by very low-frequency rhythms, ketamine includes periods of high power in high frequency rhythms. Those two very different means of achieving unconsciousness seems to suggest that consciousness is a state that can be lost in multiple ways, Brown said.

“I can make you unconscious by making your brain hyperactive in some sense, or I can make you unconscious by slowing it down,” he said. “The more general concept is there’s a dynamic—we can’t define it precisely—which is associated with you being conscious and as soon as you move away from that dynamic by being too fast or too slow, or too discoordinated or hypercoordinated, you can be unconscious.”

In addition to considering that hypothesis, the team is looking at several new projects including measuring ketamine’s effects across wider areas of the brain and measuring the effects as subjects awaken from anesthesia.

Developing systems that can monitor unconsciousness under ketamine anesthesia in a clinical setting will require developing versions of the model that can run in real-time, the authors added. Right now, the system can only be applied to data post-hoc.

20 Herbs to Power Memory and Boost Brain Activity

Our mind is our most important asset. We use our brain every day in carrying out tasks. It is thus important to keep our mind in top shape.

This is true for students who rely on a good memory and effective cognitive function. The same is true for people who mostly have to depend on their brainpower for work, e.g. writers, journalists, scientist, etc.

But keeping the brain healthy more is often needed than getting enough rest, a balanced diet, and adequate exercise.

Sometimes supplements for brain health are needed for better memory and mental focus. To learn more about the benefits of herbs to boost your memory CLICK HERE:https://www.herbal-supplement-resource.com/20-herbs-to-power-memory-and-boost-brain-activity/

Post link