#fanfic community

A study on fanfiction stories’ update frequency and number of reviews received

As a grad student, I often find myself debating over finishing tasks all at once or spacing them out over a reasonable time period. In the fanfiction community, we have seen stories where multiple chapters are posted on the same day, while others are updated every few months or even years. As authors, if our goal is to attract readers and reviews, how long should we wait between chapters? Is it better off to satisfy our readers with content all at once, or to keep them hooked by posting a bit at a time?

Our Approach

To answer these questions, we defined the “frequency” of updates in a story as the average number of days between chapters posted, and looked at stories with more than one chapter and at least one review from fanfiction.net during the period of 1997 to 2017. In this particular study, we are considering the first story posted by each author to avoid miscalculating the accumulated review count for their subsequent stories. Note that the original chapter publish date/time was not available in the dataset so researchers estimated it from either the story publish time or time of the first review. As a result, this dataset is representative of stories with more reviews.

What We Found

In this graph, each data point is a story mapped to the total number of reviews received (y-axis) and average days between chapters posted (x-axis). The x-axis is then divided into 14 bins, to represent “buckets” of stories where chapters were posted every 1, 2, 3… 14 days on average. While there are quite a few stories with up to hundreds of reviews, the median line plotted for each bin indicates that the data is skewed to the right.

My initial guess was that stories with chapters posted 3-4 days between each other might receive the most reviews, as readers are likely to revisit the same story for updates every few days. This graph seems to be consistent with this speculation and shows that the first peak is at five days. This means that half of the stories with chapters published five days between each other are observed to have 9 reviews. Other peaks are observed at ten and thirteen days.

Your Thoughts?

How often do YOU update a story? What factors do you consider when planning to post a new chapter? As a reader, would you prefer coming back every few days to read the new chapter and review, or reading them all at once? We look forward to seeing your comments and learning more on this topic!

Author: Sourojit Ghosh

As a creative writer myself, I’ve always been anxious about getting reviews on the content I put out there. As I’m sure others who publish any form of writing can attest to, reviews form an integral part of our development as writers. However, I also find myself paying attention to not just what a review says, but also how it is said. Specifically, the emotions expressed in a review often shape my interpretation of it.

With that in mind, we at the University of Washington Human-Centered Data Science Lab (UW-HDSL) are interested in researching the emotions present in the multitude of reviews by the fanfiction community. By investigating a correlation between the lengths of reviews and the emotions expressed in them, we aim to understand the growth of relationships between members of the community as they share likes and dislikes.

Introduction

Our previous research with the fanfiction community has found widespread encouragement for budding relationships in its distributed-mentoring setting. The members of the community, mostly young adults from all over the world, are incredibly expressive in their words and often eager to support each other in the writing process. Most of the reviews we have seen in the community are rife with emotion, with the words jumping off the page with their expressiveness. This collectively supportive environment not only seeks to bring out the best in each individual but also to form meaningful relationships that extend beyond that of anonymous writers and readers of fanfiction.

Methods and Findings

For this exploration, we examined 1000 reviews of various fanfiction stories published on the site. We decided to classify them as exhibiting one of 11 emotions: Like, Joy/Happiness, Anticipation/Hope, Dislike, Discomfort/Disgust, Anger/Frustration, Sadness, Surprise, Confused, Unknown, and No Emotion. Figure 1 shows an example of a review coded in this way using TextPrizm, a web tool developed by members of the UW-HDSL.

Figure 1: An example of a review being coded for emotions

By coding these reviews for emotions, we are trying to gain a better understanding of the trends in emotions expressed by reviewers across the community. By identifying such trends, we hope to learn how relationships are formed between users sharing common interests and having similar reactions to certain content.

Figures 2 and 3 display our preliminary results so far. Figure 2 represents the number of reviews being classified as having each emotion, while Figure 3 shows the average lengths of reviews in the dataset expressing each emotion.

Figure 2: A bar graph showing the no. of reviews each emotion was assigned to.

Figure 3: A bar graph showing the average no. of words in a review expressing each emotion.

The high number of reviews expressing Joy / Happiness and Like is an encouraging indication of the fact that most users took adequate time to express their positivity and support towards the writers. Another emerging trend can be seen in the reviews marked as No Emotion. This small number of reviews averaging at about 80 words per review was found to contain thoughtful discussions on global issues like religious tensions and sexual violence. While the previously discussed reviews highlight the positivity inherent in the community, these reviews remind us of the incredible maturity and depth of thought that the members also possess, a fact even more inspiring given that the community is mostly comprised of young adults.

Conclusion and Future Work

This initial examination of a small set of reviews offers some insight into the correlations between emotions and review length. An exploration of a larger set of reviews may offer some basis for providing statistically significant findings along the lines of the currently observed trends and can provide further insight into the ways in which reviews are integral in the process of users on relationship building on Fanfiction.net.

We would love to hear from you, members of the fanfiction community, about what you think of our work and how you view the emotions expressed in reviews of your writing. At the same time, we would also be interested in knowing if you express certain emotions in your reviews more extensively than others! If you have any questions or concerns about our data, feel free to respond to this post or send up an ask, and we would be happy to get back to you. And, as always, stay tuned for our future work with your wonderful fanfiction community!

Acknowledgments

We are incredibly grateful to Dr. Cecilia Aragon and undergraduate researcher Niamh Froelich at the UW Human-Centered Data Science Lab for the initial ideas behind the project, their insightful feedback, and constant support throughout the process. We are also grateful for the fantastic Fanfiction.net community, which continues to prosper each day and exist as a positively supportive environment for budding and seasoned writers alike.

A time-shifted serial correlation analysis of reviewing and being reviewed.

Acknowledgements: Investigation by Arthur Liu with thanks to Dr. Cecilia Aragon and Jenna Frens for feedback and editing and also to team lead Niamh Froelich.

Is it true that giving someone a review will make that person more likely to write reviews as well? Conversely, is it true instead that writing more reviews yourself will help you get more reviews from others?

In this post, we explore one avenue of reciprocity by analyzing the time series of reviews given vs. reviews received.

Of course, you have to be careful with this technique. The inspiration of the analysis we utilized comes partly from Tyler Vigen’s Spurious Correlations site (http://www.tylervigen.com/spurious-correlations) where he shows interesting correlations between clearly unrelated events. With a humorous perspective, he reminds us that correlation is not evidence of causation (since sociology doctorates and rocket launches are totally coincidental), but the analysis techniques here are an interesting technique to investigate potential relationships between two different time series.

Back to our topic of reciprocity, we wanted to investigate the relationship between reviews given and reviews received. We had two hypotheses that we were interested in testing: first, we were curious if users who received more reviews would be more inclined to give reviews themselves. Second, we were curious if giving reviews would help increase the number of reviews you personally received.

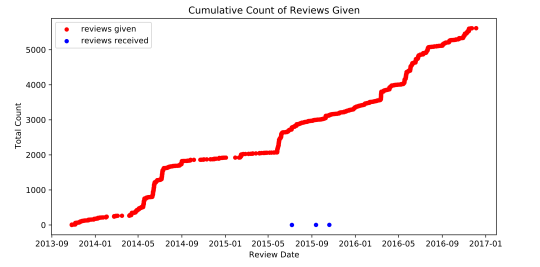

To get into specifics, here is an example plot of a real user’s review activity.

Let’s break it down. This plot follows the activity of a single user over the course of several years. It plots the total amount of reviews that they gave (in red) and also the total number of reviews that they had received on their fan fictions (in blue). What this chart shows us is that this is a user who has had a very consistent amount of activity in terms of giving out reviews. It also captures spikes in the number of reviews received (blue) which may correspond to having released a new chapter.

If there was a strong link between reviews given and reviews received in either direction, we would expect to see that increases in one is followed by increases in the other. Here is an example where we witness such a relationship:

Since it is harder to analyze the change in activity level from these cumulative plots, we then looked at the total number of reviews given each month. Here’s what that looks like for the same person:

This time, it is more apparent that there is a similar pattern in the activity behavior for the reviews given and reviews received. For this example, that similarity is a similar spiking pattern.

From Vigen’s website, we could naively apply a correlation calculation here, but there is a glaring flaw: one of the time series is clearly ahead of the other. So, what if we just shifted one of the time series so they overlapped and then computed the correlation? This is the basic intuition of serial correlation: we apply a range of possible shifts and then compute the correlation between these shifted graphs. The one with the highest correlation would be the one with the best match.

The results for different shifts:

The best shift of “11 frames”:

In other words, for this person, giving a lot of reviews correlates well with receiving a lot of reviews roughly 11 months later. Of course, this doesn’t prove any sort of causation, but we can speculate that the increased amount of reviews this user gave helped boost the amount of reviews they got later!

From this analysis of an individual person, we were curious how this extended to the larger community to see if these same trends existed! The short answer, “eh, not really,” but it is interesting to see why this cool pattern might not generalize adequately.

1. Not all individuals get reviews and give reviews at the same scale

Some users just like to give reviews and some users just like to write reviews!

For instance, here is someone who gives a lot of reviews and didn’t get many themselves.

Here is someone who gave some reviews, but then focused on writing stories and received a lot more reviews instead!

For graphs like these, it is hard to apply the analysis we did earlier because the relationship is likely a lot weaker or there might just not be enough data points to capture it anyway.

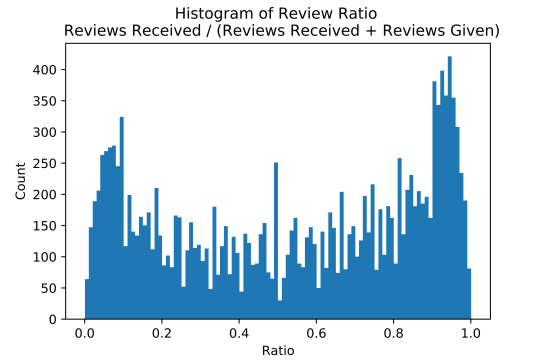

We can summarize these examples for the overall population by looking at the ratio between reviews given to reviews received.

For this sample of 10k users, we see that those who primarily receive reviews will have a larger ratio (right), and users who primarily give reviews will have a smaller ratio (left). In more detail, a ratio of 1.0 means that they only received reviews. For example: 10 reviews received / (10 reviews received + 0 reviews given) = 1. For a ratio of 0.0, it means they received no reviews. For each ratio, the graph shows the total count of the 10k users who had that ratio.

To address issue (1), we reduced the scope down to users who had a relatively equal ratio of reviews given vs. reviews received.

Additionally, we pruned for users who had received at least 10 reviews. This way, we would have enough data points to use for our analysis. In fact, this is also why there is a large spike in the 0.5 ratio which consisted of a lot of users who had written one or two reviews and received an equal amount.

With this cleaned up, we also computed the lags on a finer scale–weeks–instead of months since we noticed that months were not granular enough. We computed the most common lags, and here is a plot of the results. This lag is the shift applied to received reviews, and the correlation is how well the two series correlated with each other after the shift. A correlation of 1 means that as one increased, the other increased as well, a correlation of -1 means that as one decreased, the other increased, and smaller values such as 0.8 mean that the correlation was positive, but less strong.

So the result here is both a little messier and structured than we had hoped from our hypothesis, but that’s part of the research process!

To elaborate, in the X dimension, the lag, there isn’t a particular range that was significantly denser than the rest. In fact, if we looked at the histogram, we see something like this:

So we lied a little, it looks like that last lag of +20 weeks looks really popular, but this is actually an artifact caused by the serial correlation process. If you recall this graph:

The red line is the chosen lag at the peak. In this case, the shifting actually peaked, but if we had truncated the graph at 5, it would have simply picked that highest shift.

Not convinced? Here’s the same analytics, but now we calculated up to a lag of 40.

Looks like the 20 bucket wasn’t particularly special after all.

So ignoring this last bucket (and the first bucket for a similar reason), we notice that our histogram matches this noisiness that we observed for the lags.

What does this mean? It suggests that there is no general pattern that can succinctly summarize the larger population, and that we are unable to conclude that there is a common average positive or negative lag relationship between the number of reviews someone has given and the number of reviews that they have received. Some authors sent more reviews after receiving more reviews (positive lags), some authors received more reviews after getting reviews (negative lags), and some authors did not exhibit much of a relationship either way (the first and last buckets which didn’t find a reasonable shift). Although these relationships do exist, the timing was not consistent overall so we can’t say anything about fanfiction.net authors in general.

So…

2. Looking across users, we do not see consistent behavior in a time-shifted relationship between a person’s received and given review count

Even when we look at the lags with the highest correlation (r > 0.7), we see that this even distribution of lags still holds.

In summary, this isn’t the dead end! (With research, it rarely is!) But it helps paint a better picture of the users in the community and why this approach may not be well suited to encapsulate it well. We see that the relationship between reviews received and given doesn’t follow a necessarily time-shifted relationship and that in fact, this shift can go either direction. Try taking a look at your own reviewing trends, and see where you would be located within these graphs! Are you someone who has a positive shift or a negative time shift… or no strong correlation at all?

In the meanwhile, we’re still exploring some other interesting approaches in reciprocity! Stay tuned :)

Introduction

One of the questions we occasionally get from authors is: “What kinds of submissions get the most reviews?” We think this is a really interesting question and we’ve started doing some exploratory analyses related to the quantity of reviews that authors receive based on a variety of factors. One of the factors that we decided to check out was the number of words in a chapter. We were curious: Would shorter chapters get more reviews because they might take less time to read? Or longer chapters because there is more for reviewers to dig into? Or maybe there’s a sweet spot somewhere in between?

Methods

To look into this we took a random subset of 10,000 authors from FanFiction.net with chapter publications over a 20 year period from 1997 to 2017. We then created a scatterplot with each point being one of these 10,000 authors, the x-axis showing the median number of words across their published chapters, and the y-axis showing the median number of reviews received on those chapters. The points are segmented into six groups based on percentile of the total number of reviews received on all chapters they have ever published. We then put trendlines in for each of these segments, so we can more easily observe if there are any relationships between chapter length and reviews received across each of these groups. We also performed this analysis at the chapter with similar findings. The results are preliminary and warrant further exploration, but we’ll share what we’ve found so far.

Results

It turns out that the small number of most highly reviewed authors in the top 1% saw an increase in reviews received up until chapters of almost 5,000 words in length, at which point their chapters began to receive fewer reviews on average.

For those authors whose works are in the top 25% of reviews received (excluding the top 1%), as chapter length increases, the number of reviews received on those chapters does as well. Interestingly, there does not appear to be the same drop off in reviews received for longer stories for these authors as there was for the authors in the top 1% of reviews received.

On the other hand, the remaining authors whose chapters are less highly reviewed saw little change in the length of chapter published with the number of reviews received.

Conclusion

These preliminary results point to some interesting potential implications on how an author might be able to get the most reviews. For the most highly reviewed authors, shooting for a chapter of around 5,000 words in length is most likely to result in the highest levels of engagement. However, for the vast majority of authors, writing longer chapters is not likely to have a negative impact on engagement from reviewers, and may even result in more reviews.

How about you?

What are your experiences with receiving or providing reviews based on chapter length? We’d love to hear whether this is a factor that motivates you or something that you consider when writing or reviewing!

In January and February 2019, our research group did an interview study on how fanfiction authors seek feedback for their fiction. We interviewed 29 fanfiction authors and learned about their insights on feedback and relationship with feedback providers. In this blog post, we are going to talk about our findings on the impact of public comments, and the particular positive outcomes of comments that contain specific thoughts and insights.

Comments Are Generally Valued

Fanfiction authors appreciated public comments on their works. “All I want for Christmas is comments, if you liked it please let me know,” (P2) one of the authors we interviewed once wrote in her author’s note. All kinds of comments are welcomed as long as they are conveyed in a friendly and respectful tone.

Concise positive comments, such as “wow”, “amazing”, “awwww this is so cute”, though simple and maybe not that informative, are still valuable to authors. Those comments “tell you that you’re hitting the right emotional chords, that you’ve been on the right track” (P21). “That’s really helpful.” One author said:

“If there’s a session where you’re getting absolutely none of that, that might prompt you. Like okay, I meant that to be really eliciting a certain emotional response and it wasn’t getting it, so that might also be a sign to go in and kind of work on that” (P21).

Comments Help New Authors Enter the Community

Comments were especially valuable to new writers in the fandom. They made authors feel welcomed in the community, and helped authors learn about community norms and writing styles. One author told us that when they posted their first work in a new fandom, comments helped them get connected in the new community:

“You enter into new fandoms, you’re writing for a new audience and you don’t really know anyone… I don’t really know the rules of this particular fandom and I don’t really know what people are going to think of my stories is going to fit. And that initial bit of support and positive feedback to get that on early works, and to feel, okay, I’ve just sort of arrived in this in this fandom, and in this community, but people are making me feel welcome, and making me feel like what I’m writing is valued and appreciated by people” (P1).

Specific Comments Are Particularly Appreciated

While in general all kinds of comments were welcomed, almost all of the authors we talked to expressed particular appreciation for comments in which the reader expressed opinions and thoughts about particular aspects of the story. One author talked about how she formed a personal practice to write substantive comments when reading others’ fictions:

“I try to copy certain lines while I’m reading and try to leave a substantial comment… and say I really liked your story because of ZYX…I do both because I’ve been writing fanfiction for a long time and I know it’s fun to have substantial comments. I do it because that’s what I like and I know it can make someone’s day” (P29).

Authors recognized the effort that readers put into substantial comments, so they regarded receiving those long specific comments as an honor:

“You don’t write a big long comment like that, if you’re not affected by something. Because it’s hard enough to get readers to click the Kudos button and just give me a little heart, let alone write a comment, let alone write a long detailed comment whenever that happens” (P14).

Specific Comments Recognize Authors’ Effort

Many authors mentioned that receiving long and specific comments made them feel that their effort had been recognized. When they were proud of a part of their story in particular, they liked to hear about whether the emotion and thoughts that they tried to convey impacted readers in the way they expected.

“It just feels great when I spent so much time working on something and working on a particular detail, I absolutely love hearing someone’s reaction to it, like, what specifically they liked about it… It’s great getting those compliments but I want to know about their experience living in the story that I’ve created” (P2).

“The most interesting and in depth sort of feedback, people really seem to connect with the characters and the characterization of the story and the writing of the story, which I really, really like. It’s very satisfying when you put a lot of effort into something and they actually noticed and they’re like, and they comment on it like, oh my God, the way you wrote this, the flow of it, the thing… that’s my own kink, hearing people say that they understood what I was writing and that they understood what I was going for” (P14).

Specific Comments Teach Authors about Writing

Being able to hear what the audience thought about their fiction was not only a joyful experience to fanfiction authors, but also a valuable learning opportunity. Authors learned from specific comments about whether their writing style and the direction of the story worked for their readers. Some regarded specific comments as feedback for parts of their writing that they were not sure about. One author told us they endured writer’s block when writing a certain character in their story. When they received positive comments on that character,

“they were commenting on my characterization for the character. And I was like ‘oh, thank you god,’ because I struggle with this particular character a lot” (P20).

The author was able to validate their writing from comments that specifically pointed to a characterization.

Specific Comments Connect Readers and Authors

Another important benefit of specific comments was that it fueled connection with readers. Specific comments elicited discussion between authors and readers. As one author said in the interview:

“I like having comments that are thoughtful and trying to analyze what I wrote, and are picking up what I put down basically… I usually respond to the comment and say thank you, and if they left analysis I talk back and forth” (P29).

These back-and-forth discussions lead to further connections outside the specific story.“I’ve made friends with a lot of people who started out just commenting on my fics a lot. You end up commenting back, and start talking” (P13). In some cases, these connections developed into later beta reading relationships and friendships. “Most of the people that I sent work to… they’ve made comments that are the right sort of comments I suppose…” one author said while telling us the story of meeting her beta readers,

“and so I sent work to them after that sort of built up a bit of a relationship by then so that I know what sort of person they are and what sort of comments they might make… I know that if I sent it to them, they will be looking for the sorts of things that I’m looking for them to look for. The comment that they’ve made tells me that they’re reading it the way I want a beta reader to read it” (P26).

Summary

Our findings about public comments suggest that it is more than valuable for fanfiction authors to receive comments, especially comments that provide thoughts and insights on specific aspects of the story and writing. We suggest that encouraging exchange of specific public comments will be beneficial to fanfiction communities.

We are actively posting blog posts about other findings from our interview study. Check out our blog later for more findings!

Dammit I have another idea.

And it’s all your fault, @mystical-blaise

Busy with school but came down with strep and spend the few days of rest that I had re-reading all the comments left on AO3 for YITM and let me just say it always keeps me motivated to continue that damn thing, even after all these years. I haven’t forgotten the fic, I’m just up to my eyeballs in CAD and math.

So seriously, thanks to everyone who comments on YITM, those that comment just once and those that keep coming back to comment. You’re amazing and I love you.