#content moderation

I wonder how many people who treat stringent content moderation and cancel culture as civilization’s first, last, and only line of defense against a world of widespread misogyny and racism understand how many of their favorite bits of entertainment would be unacceptable by today’s standards.

And no, I’m not talking about books written in 1884, when Mark Twain could drop the n-word more often than a hyperactive squirrel with paws coated in butter would drop an acorn and have no one bat an eye. I’m not talking about movies released in 1961, when a white actor could play a racist caricature of a Japanese landlord to widespread praise from critics. I’m talking about 2006.

That year, Markus Zusak gave us The Book Thief, an eerily beautiful coming-of-age book set in Nazi Germany whose virtues would be drowned out by the flood of trigger warnings modern gatekeepers would attach to it. Opening with the death of Liesel’s brother (tw:death, tw:child death, tw:parental abandonment) it includes a loud, abrasive foster mother (tw:abuse, tw:child abuse, tw:verbal abuse, tw:mental abuse) who is portrayed as a headstrong protector of her family (tw:abuse apologism) and the Jew they hide in their basement (tw:white saviorism), as well as a meek foster father who kowtows to his wife’s ways (tw:domestic abuse) and teaches Liesel to roll cigarettes (tw:smoking). It’s narrated by Death (are there even enough trigger warnings for that?) who, rather than condemn characters who have embraced Hitler and Nazism, points to the bitterness, grief, and misinformation catalyzing their fervor (tw:Nazi apologism).

For those of you readying a barrage of rebuttals to that summary, scrolling down to the comments to tell me that I stripped the book of any nuance—that’s the whole point. The Book Thief is a very nuanced story that conveys its message in shades of grey. Few characters are wholly good or wholly evil. Death is a neutral figure, condemning the horrors of war while pitying those who fight it no matter their side, portraying the nightmarish consequences of hatred while showing the reader how it is born. But since when has nuance ever mattered to someone riding high on a wave of righteous anger?

Moving on, 2006 was also the year My Chemical Romance released The Black Parade, which sees Death (tw tw tw) telling the story of The Patient, a man whose life was filled with war, depression, political unrest, PTSD, religious guilt, self-loathing, broken relationships, and near-constant suicidal ideation—a life that ends in his thirties from heart complications due to a long, painful, emotionally draining battle with cancer. Millions of depressed kids, teens, and adults have found catharsis in the album’s raw, honest lyrics, but those same lyrics would earn the band a #CancelMCR hashtag today. To wit:

Another contusion, my funeral jag/Here’s my resignation, I’ll serve it in drag: Mocking drag queens and men who crossdress. Using a very real expression of gender identity for shock value. Blatantly transphobic.

Juliet loves the beast and the lust it commands/So drop the dagger and lather the blood on your hands Romeo: Toxic relationship. Probably violently abusive. #DumpThePatient, lady, and #MCRStopRomanticizingAbuse.

Wouldn’t it be grand to take a pistol by the hand?/And wouldn’t it be great if we were dead?: Oh my fucking god, they’re romanticizing suicide now? How was this album even allowed to be made? Who let this happen and how soon can we #cancel them?

If you’ve heard the album, you know none of the above interpretations are remotely true. You’ve probably shaken your head at the Daily Mail’s infamous claim that My Chem promoted self-harm and suicide, but the sad truth is that if The Black Parade were released in today’s climate, that claim would probably be taken up by the very people who now consider themselves fans. The raw honesty that resonated with so many could easily be taken as a stamp of approval on the very suicides its songs have prevented. The anti-suicide anthem, “Famous Last Words,” could be ignored or twisted into a mockery of those who condemn suicide, and the darkly wholesome “Welcome to the Black Parade” music video would likely be taken as enticement toward teens who want to end their lives: “Look at all the cool things you’ll get to see once you’re dead and gone!”

Again, anyone who is even a casual fan of The Black Parade knows this is a deliberately malicious misreading of the material. My Chem’s music has been gratefully embraced by LGBTQ+ kids looking for a place to belong, and the band members have been outspoken in their support. They’ve been quoted, on multiple occasions, speaking out against suicide and self-harm. We know Parade is not pro-anything except pro-keep on living. But we know this because we gave the band a chance to tell us. We assumed good intent when we listened to their music, and so their intended message came across without interference. Were Parade released today, in the era of AED (Assume the worst, Exaggerate the damage, and Demand outsized retribution), the resulting furor (and refusal to hear their objections to the rampant misinterpretations) could very well have forced My Chem to vanish into obscurity.

And look. I’m not against content moderation wholesale. I actually think it’s done some good in the world of entertainment. Podcast hosts and book reviewers who warn audience members about triggering content allow them to avoid that content before they suffer an anxiety attack. As a librarian, I have personally and enthusiastically recommended Does the Dog Die?, a website (doesthedogdie.com) that tracks hundreds of anxiety triggers in media, to colleagues who work with kids so they can allow their students to request a different book or movie if the assigned one would cause undue distress. Trigger warnings can prevent anxiety attacks. Content moderation allows audiences to make informed choices.

But some things are toxic in high amounts, and when it comes to content moderation, we’ve long since passed that mark.

When trigger warnings are used not as honest labels of content, but as a means to frighten people away from material they might otherwise enjoy, trigger warnings become toxic.

When self-appointed content moderators tell others what interpretations they should take from a piece of entertainment, rather than allowing them to come to their own conclusions, content moderation becomes toxic.

When artists are afraid to produce their most honest work for fear their honesty will be twisted into something dark and ugly, the world of fandom becomes toxic.

Content moderation is not bad in itself. It can actually be a valuable tool for sufferers of anxiety, PTSD, and other disorders. But when it goes hand in glove with cancel culture, it becomes a monster, keeping audiences from discovering something they might otherwise enjoy by twisting the content into something it’s not.

By all means, tag your triggers. Warn about your content. But don’t tell your followers to expect something horrible that isn’t even there.

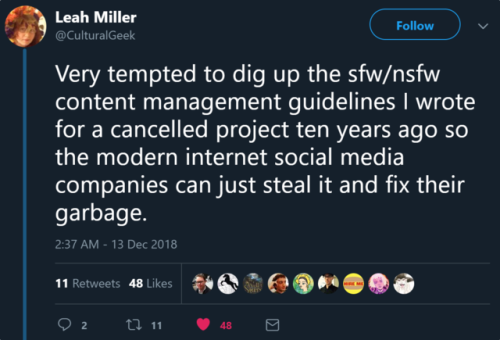

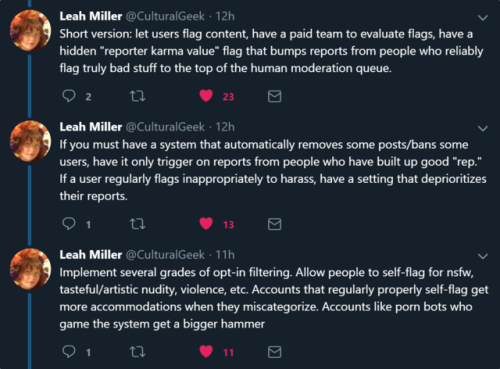

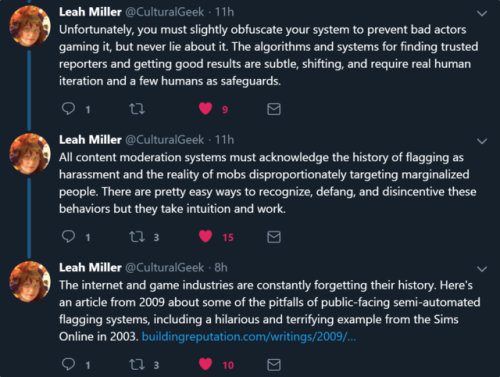

A few days ago I tweeted some thoughts about current conversations around content moderation and racism on AO3. I thought I’d reproduce here:

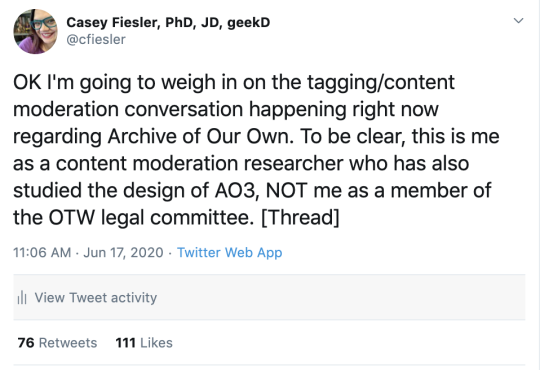

OK I’m going to weigh in on the tagging/content moderation conversation happening right now regarding Archive of Our Own. To be clear, this is me as a content moderation researcher who has also studied the design of AO3, NOT me as a member of the OTW legal committee.

To clarify the issue for folks: Racism is a problem in fandom. In addition to other philosophical and structural things regarding OTW, there have been suggestions for adding required content warnings or other mechanisms to deal with racism in stories posted to the archive.

Here’s a description of the content warning system from a paper I published about the design of AO3, in which I used this as an example of designing to mitigate the value tension of inclusivity versus safety:

“Knowing that this tension would exist, and wanting to protect users from being triggered or stumbling across content they did not want to see, AO3 added required warnings for stories. These include graphic violence, major character death, rape, and underage sex. These warnings were chosen based on conventions at the time, what fan fiction writers already tended to warn for when posting stories elsewhere. Warnings are not only required, but are part of a visual display that shows up in search results. One early concern was that requiring these warnings might necessitate spoilers—for example, telling the reader ahead of time that there was a major character death. Therefore, AO3 added an additional warning tag: ‘Choose not to use archive warnings.’ Seeing this tag in search results essentially means ‘read at your own risk.’ Most interviewees found this to be a solution that did a good job at taking into account different kinds of needs.“

The idea is certain kinds of objectionable content is allowed to be there as long as it’s properly labeled. Content will not be removed for having X, but it can be removed for not being tagged properly for having X. This allows users to not have to see content labeled with X.

My students and I have studied content moderation systems in a number of contexts, including on Reddit and Discord, and I actually think that this system is kind of elegant and other platforms can learn from it. That said, it relies on strong social norms to work.

One of the big problems with content moderation is that not everyone has the same definition of what constitutes a rule violation. Like… folks on a feminist hashtag almost certainly have a different definition of what constitutes "harassment” than on the gg hashtag.

A few years ago we analyzed harassment policies on a bunch of different platforms and usually it’s just like “don’t harass people” but okay what does that mean? I guarantee you there are people on twitter who think rape threats aren’t harassment.

So a nice thing about communities moderating themselves–like on subreddits–is that they can create their own rules and have a shared understanding of what they mean (here’s a paper about rules on Reddit). So you can have a rule about harassment and within your community know what that means.

I’ve talked for a long time about the strong social norms in fandom and how this has allowed in particular for really effective self regulation around copyright. In fact I wrote about this really recently based on interviews conducted in 2014. HOWEVER -

Generally, I think social norms are not as strong in fandom as they used to be, in part because it’s just gotten bigger and there are more people and also some generational differences and we’re more spread out. (My PhD advisee) Brianna & I wrote about this for TWC.

So the point here is that without those very strong shared norms, definitions differ - across sub-fandoms, across platforms, across people. Whether that thing we’re defining is commercialism, harassment, or racism.

This isn’t to say that there *shouldn’t* be a required content warning for racism in fics, but I think it’s important to be aware how wrought enforcement will be because no matter how it is defined, a subset of fandom will not agree with that definition.

That said, design decisions like this are statements. When AO3 chose the required warnings, it was a statement about what types of content it is important to protect the community from. I would personally support a values-based decision that racism falls into that category.

Design also *influences* values. An example of this was AO3’s decision to include the “inspired by” tag which directly signaled (through design) that remixing fics without explicit permission was okay. Another quote from the paper:

“Similarly, Naomi described a policy decision of AO3 that was a deliberate attempt to influence a value. Prior work understanding fandom norms towards re-use of content has shown something of a disconnect, with different standards for different types of work [15,16]. Surprisingly, although fan authors themselves are building on others’ work, some don’t want people to remix their remixes. In Naomi’s original blog post, there is some argument between fans about what AO3 should do about this, with suggestions for providing a mechanism for fan writers to give permission for remixing. Naomi described their ultimate design, and feels that in the time that has followed the creation of AO3, the values of the community have actually shifted to be more accepting of this practice:

‘We had baked in right from the beginning that you could post a work to the archive that was a remix, or sequel, or translation, or a podfic or whatever, based on another work, another fannish work. As long as you gave credit, you didn’t need permission. In fact, we built a system into the archive where it notifies [the original author]. That was because we were coming from a philosophy where what we’re doing is fair use. It’s legal. We are making transformative work. We don’t need permission from the original copyright holder. That’s why fanfic is legitimate. But what’s sauce for the goose is sauce for the gander… So, I think that’s an example, actually, where archive and OTW almost got a little bit ahead of the curve, got a little bit ahead of the broader community’s internal values. That was a deliberate concerted decision on our part.’”

A design change to AO3 that forces consideration of whether there is racism in a story you’re posting could have an impact on the overall values of the community by signaling that this consideration is necessary AND that you should be thinking of a community definition of racism.

Also important: content moderation has the potential to be abused. And I know that this happens in fandom, even around something as innocuous as copyright, since I’ve heard stories of harassment-based DMCA takedown campaigns.

There’s a LOT of tension in fandom around public shaming as a norm enforcement mechanism, and I would want to see this feature used as a “gentle reminder” and not a way to drum people out of fandom. (See more about that in this paper.)

That said, I think these reminders are needed. There will be a LOT of “uh I didn’t know that was racist” and that requires some education. Which is important but also an additional burden on volunteer moderators who may be grappling with this themselves.

This is more a thread of cautions than solutions, unfortunately. Because before there can be solutions, we need: (1) an answer to a very hard question, which is “what is racism in fanfiction/fandom?” and this answer needs to come from a diverse group of stakeholders, and (2) a solution for enforcement/moderation that both makes sure that folks directly impacted by racism are involved AND that we’re not asking for burdensome labor from already marginalized groups.

All of this comes down to: Content moderation is HARD for so many reasons, both on huge platforms and in communities. AO3 is kind of a unique case with its own problems but I am optimistic about solutions because so many people care about the values in fandom.

I’m still thinking about this and welcome others’ thoughts!

Twitter recently re-activated conservative commentator Jesse Kelly’s account after telling him that he was permanently banned from the platform.

While some might be infuriated with what happened to Kelly’s Twitter account, we should be wary of calls for government regulation of social media and related investigations in the name of free speech or the First Amendment.

Companies such as Twitter and Facebook will sometimes make content moderation decisions that seem hypocritical, inconsistent, and confusing. But private failure is better than government failure, not least because unlike government agencies, Twitter has to worry about competition and profits.

You know what the stupidest part about Musk wanting to reinstate Trump’s Twitter is? It actually violates Musk’s stated goal for Twitter: to make a place where there are transparent rules that are fairly applied.

The problem with Trump’s social media bans is that they landed after he had repeatedly, flagrantly flouted the rules that the platforms used to kick off *lots* of other people, of all political persuasions.

Musk’s whole (notional) deal is: “set some good rules up and apply them fairly.” There are some hard problems in that seemingly simple proposition, but “I would let powerful people break the rules with impunity” is totally, utterly antithetical to that proposition.

Of course, Musk’s idea of the simplicity of setting up good rules and applying them fairly is also stupid, not because these aren’t noble goals but because attaining them at scale creates intractable, well-documented, well-understood problems.

I remember reading a book in elementary school (maybe “Mr Popper’s Penguins”?) in which a person calls up the TV meteorologist demanding to know what the weather will be like in ten years. The meteorologist says that’s impossible to determine.

This person is indignant. Meteorologists can predict tomorrow’s weather! Just do whatever you do to get tomorrow’s weather again, and you’ll get the next day’s weather. Do it ten times, you’ll have the weather in 10 days. Do it 3,650 times and you’ll have the weather in 10 years.

Musk - and other “good rules, fairly applied” people - think that all you need to do is take the rules you use to keep the conversation going at a dinner party and do them over and over again, and you can have a good, 100,000,000 person conversation.

There are lots of ways to improve the dumpster fire of content moderation at scale. Like, we could use interoperability and other competition remedies to devolve moderation to smaller communities - IOW, just stop trying to scale moderation.

https://www.eff.org/deeplinks/2021/07/right-or-left-you-should-be-worried-about-big-tech-censorship

And/or we could adopt the Santa Clara Principles, developed by human rights and free expression advocates as a consensus position on balancing speech, safety and due process:

https://santaclaraprinciples.org/

And if we *must* have systemwide content-moderation, we could take a *systemic* approach to it, rather than focusing on individual cases:

https://pluralistic.net/2022/03/12/move-slow-and-fix-things/#second-wave

I disagree with Musk about most things, but he is right about some things. Content moderation is terrible. End-to-end encryption for direct messages is good.

But this “I’d reinstate Trump nonsense”?

Not only do *I* disagree with that, but so does Musk.

Allegedly.