#neuroscience

Having a Good Listener Improves Your Brain Health

Supportive social interactions in adulthood are important for your ability to stave off cognitive decline despite brain aging or neuropathological changes such as those present in Alzheimer’s disease, a new study finds.

In the study, published in JAMA Network Open, researchers observed that simply having someone available most or all of the time whom you can count on to listen to you when you need to talk is associated with greater cognitive resilience—a measure of your brain’s ability to function better than would be expected for the amount of physical aging or disease-related changes in the brain, which many neurologists believe can be boosted by engaging in mentally stimulating activities, physical exercise, and positive social interactions.

“We think of cognitive resilience as a buffer to the effects of brain aging and disease,” says lead researcher Joel Salinas, MD, the Lulu P. and David J. Levidow Assistant Professor of Neurology at NYU Grossman School of Medicine and member of the Department of Neurology’s Center for Cognitive Neurology. “This study adds to growing evidence that people can take steps, either for themselves or the people they care about most, to increase the odds they’ll slow down cognitive aging or prevent the development of symptoms of Alzheimer’s disease—something that is all the more important given that we still don’t have a cure for the disease.”

An estimated 5 million Americans are living with Alzheimer’s disease, a progressive condition that affects mostly those over 65 and interferes with memory, language, decision-making, and the ability to live independently. Dr. Salinas says that while the disease usually affects an older population, the results of this study indicate that people younger than 65 would benefit from taking stock of their social support. For every unit of decline in brain volume, individuals in their 40s and 50s with low listener availability had a cognitive age that was 4 years older than those with high listener availability.

“These four years can be incredibly precious. Too often we think about how to protect our brain health when we’re much older, after we’ve already lost a lot of time decades before to build and sustain brain-healthy habits,” says Dr. Salinas. “But today, right now, you can ask yourself if you truly have someone available to listen to you in a supportive way, and ask your loved ones the same. Taking that simple action sets the process in motion for you to ultimately have better odds of long-term brain health and the best quality of life you can have.”

Dr. Salinas also recommends that physicians consider adding this question to the standard social history portion of a patient interview: asking patients whether they have access to someone they can count on to listen to them when they need to talk.

“Loneliness is one of the many symptoms of depression, and has other health implications for patients,” says Dr. Salinas. “These kinds of questions about a person’s social relationships and feelings of loneliness can tell you a lot about a patient’s broader social circumstances, their future health, and how they’re really doing outside of the clinic.”

How the Study Was Conducted

Researchers used one of the longest running and most closely monitored community-based cohorts in the U.S., the Framingham Heart Study (FHS), as the source of their study’s 2,171 participants, with an average age of 63. FHS participants self-reported information on the availability of supportive social interactions including listening, good advice, love and affection, sufficient contact with people they’re close with, and emotional support.

Study participants’ cognitive resilience was measured as the relative effect of total cerebral brain volume on global cognition, using MRI scans and neuropsychological assessments taken as part of the FHS. Lower brain volumes tend to associate with lower cognitive function, and in this study, researchers examined the modifying effect of individual forms of social support on the relationship between cerebral volume and cognitive performance.

The cognitive function of individuals with greater availability of one specific form of social support was higher relative to their total cerebral volume. This key form of social support was listener availability and it was highly associated with greater cognitive resilience.

Researchers note that further study of individual social interactions may improve understanding of the biological mechanisms that link psychosocial factors to brain health. “While there is still a lot that we don’t understand about the specific biological pathways between psychosocial factors like listener availability and brain health, this study gives clues about concrete, biological reasons why we should all seek good listeners and become better listeners ourselves,” says Dr. Salinas.

Scrap the nap: Study shows short naps don’t relieve sleep deprivation

A nap during the day won’t restore a sleepless night, says the latest study from Michigan State University’s Sleep and Learning Lab.

“We are interested in understanding cognitive deficits associated with sleep deprivation. In this study, we wanted to know if a short nap during the deprivation period would mitigate these deficits,” said Kimberly Fenn, associate professor of MSU, study author and director of MSU’s Sleep and Learning Lab. “We found that short naps of 30 or 60 minutes did not show any measurable effects.”

The study was published in the journal Sleep and is among the first to measure the effectiveness of shorter naps — which are often all people have time to fit into their busy schedules.

“While short naps didn’t show measurable effects on relieving the effects of sleep deprivation, we found that the amount of slow-wave sleep that participants obtained during the nap was related to reduced impairments associated with sleep deprivation,” Fenn said.

Slow-wave sleep, or SWS, is the deepest and most restorative stage of sleep. It is marked by high amplitude, low frequency brain waves and is the sleep stage when your body is most relaxed; your muscles are at ease, and your heart rate and respiration are at their slowest.

“SWS is the most important stage of sleep,” Fenn said. “When someone goes without sleep for a period of time, even just during the day, they build up a need for sleep; in particular, they build up a need for SWS. When individuals go to sleep each night, they will soon enter into SWS and spend a substantial amount of time in this stage.”

Fenn’s research team – including MSU colleague Erik Altmann, professor of psychology, and Michelle Stepan, a recent MSU alumna currently working at the University of Pittsburgh - recruited 275 college-aged participants for the study.

The participants completed cognitive tasks when arriving at MSU’s Sleep and Learning Lab in the evening and were then randomly assigned to three groups: The first was sent home to sleep; the second stayed at the lab overnight and had the opportunity to take either a 30 or a 60 minute nap; and the third did not nap at all in the deprivation condition.

The next morning, participants reconvened in the lab to repeat the cognitive tasks, which measured attention and placekeeping, or the ability to complete a series of steps in a specific order without skipping or repeating them — even after being interrupted.

“The group that stayed overnight and took short naps still suffered from the effects of sleep deprivation and made significantly more errors on the tasks than their counterparts who went home and obtained a full night of sleep,” Fenn said. “However, every 10-minute increase in SWS reduced errors after interruptions by about 4%.”

These numbers may seem small but when considering the types of errors that are likely to occur in sleep-deprived operators — like those of surgeons, police officers or truck drivers — a 4% decrease in errors could potentially save lives, Fenn said.

“Individuals who obtained more SWS tended to show reduced errors on both tasks. However, they still showed worse performance than the participants who slept,” she said.

Fenn hopes that the findings underscore the importance of prioritizing sleep and that naps — even if they include SWS — cannot replace a full night of sleep.

Researchers Link Brain Memory Signals to Blood Sugar Levels

A set of brain signals known to help memories form may also influence blood sugar levels, finds a new study in rats.

Researchers at NYU Grossman School of Medicine discovered that a peculiar signaling pattern in the brain region called the hippocampus, linked by past studies to memory formation, also influences metabolism, the process by which dietary nutrients are converted into blood sugar (glucose) and supplied to cells as an energy source.

The study revolves around brain cells called neurons that “fire” (generate electrical pulses) to pass on messages. Researchers in recent years discovered that populations of hippocampal neurons fire within milliseconds of each other in cycles. The firing pattern is called a “sharp wave ripple” for the shape it takes when captured graphically by EEG, a technology that records brain activity with electrodes.

Published online in Nature, the new study found that clusters of hippocampal sharp wave ripples were reliably followed within minutes by decreases in blood sugar levels in the bodies of rats. While the details need to be confirmed, the findings suggest that the ripples may regulate the timing of the release of hormones, possibly including insulin, by the pancreas and liver, as well of other hormones by the pituitary gland.

“Our study is the first to show how clusters of brain cell firing in the hippocampus may directly regulate metabolism,” says senior study author György Buzsáki, MD, PhD, the Biggs Professor of Neuroscience in the Department of Neuroscience and Physiology at NYU Langone Health.

“We are not saying that the hippocampus is the only player in this process, but that the brain may have a say in it through sharp wave ripples,” says Dr. Buzsáki, also a faculty member in the Neuroscience Institute at NYU Langone.

Known to keep blood sugar at normal levels, insulin is released by pancreatic cells, not continually, but periodically in bursts. As sharp wave ripples mostly occur during non-rapid eye movement (NREM) sleep, the impact of sleep disturbance on sharp wave ripples may provide a mechanistic link between poor sleep and high blood sugar levels seen in type 2 diabetes, say the study authors.

Previous work by Dr. Buzsáki’s team had suggested that the sharp wave ripples are involved in permanently storing each day’s memories the same night during NREM sleep, and his 2019 study found that rats learned faster to navigate a maze when ripples were experimentally prolonged.

“Evidence suggests that the brain evolved, for reasons of efficiency, to use the same signals to achieve two very different functions in terms of memory and hormonal regulation,” says corresponding study author David Tingley, PhD, a postdoctoral scholar in Dr. Buzsáki’s lab.

Dual Role

The hippocampus is a good candidate brain region for multiple roles, say the researchers, because of its wiring to other brain regions, and because hippocampal neurons have many surface proteins (receptors) sensitive to hormone levels, so they can adjust their activity as part of feedback loops. The new findings suggest that hippocampal ripples reduce blood glucose levels as part of such a loop.

“Animals could have first developed a system to control hormone release in rhythmic cycles, but then applied the same mechanism to memory when they later developed a more complex brain,” adds Dr. Tingley.

The study data also suggest that hippocampal sharp wave ripple signals are conveyed to hypothalamus, which is known to innervate and influence the pancreas and liver, but through an intermediate brain structure called the lateral septum. Researchers found that ripples may influence the lateral septum just by amplitude (the degree to which hippocampal neurons fire at once), not by the order in which the ripples are combined, which may encode memories as their signals reach the cortex.

In line with this theory, short-duration ripples that occurred in clusters of more than 30 per minute, as seen during NREM sleep, induced a decrease in peripheral glucose levels several times larger than isolated ripples. Importantly, silencing the lateral septum eliminated the impact of hippocampal sharp wave ripples on peripheral glucose.

To confirm that hippocampal firing patterns caused the glucose level decrease, the team used a technology called optogenetics to artificially induce ripples by re-engineering hippocampal cells to include light-sensitive channels. Shining light on such cells through glass fibers induces ripples independent of the rat’s behavior or brain state (e.g., resting or waking). Similar to their natural counterparts, the synthetic ripples reduced sugar levels.

Moving forward, the research team will seek to extend its theory that several hormones could be affected by nightly sharp wave ripples, including through work in human patients. Future research may also reveal devices or therapies that can adjust ripples to lower blood sugar and improve memory, says Dr. Buzsáki.

(Image caption: Protein expression in the mouse brain for mass spectrometry analysis, visualized by fluorescence microscopy. Blue shows the outline of the brain. Green and magenta are selectively tagged proteins)

New technique identifies proteins in the living brain

For the first time, researchers have developed a successful approach for identifying proteins inside different types of neurons in the brain of a living animal.

Led by Northwestern University and the University of Pittsburgh, the new study offers a giant step toward understanding the brain’s millions of distinct proteins. As the building blocks of all cells including neurons, proteins hold the keys to better understanding complex brain diseases such as Parkinson’s and Alzheimer’s, which can lead to the development of new treatments.

The study was published in the journal Nature Communications.

In the new study, researchers designed a virus to send an enzyme to a precise location in the brain of a living mouse. Derived from soybeans, the enzyme genetically tags its neighboring proteins in a predetermined location. After validating the technique by imaging the brain with fluorescence and electron microscopy, the researchers found their technique took a snapshot of the entire set of proteins (or proteome) inside living neurons, which can then be analyzed postmortem with mass spectroscopy.

“Similar work has been done before in cellular cultures. But cells in a dish do not work the same way they do in a brain, and they don’t have the same proteins in the same places doing the same things,” said Northwestern’s Yevgenia Kozorovitskiy, senior author of the study. “It’s a lot more challenging to do this work in the complex tissue of a mouse brain. Now we can take that proteomics prowess and put it into more realistic neural circuits with excellent genetic traction.”

By chemically tagging proteins and their neighbors, researchers can now see how proteins work within a specific, controlled area and how they work with one another in a proteome. Along with the virus carrying the soybean enzyme, the researchers also used their virus to carry a separate green fluorescent protein.

“The virus essentially acts as a message that we deliver,” Kozorovitskiy said. “In this case, the message carried this special soybean enzyme. Then, in a separate message, we sent the green fluorescent protein to show us which neurons were tagged. If the neurons are green, then we know the soybean enzyme was expressed in those neurons.”

Kozorovitskiy is the Soretta and Henry Shapiro Research Professor of Molecular Biology, an associate professor of neurobiology in Northwestern’s Weinberg College of Arts and Sciencesand a member of the Chemistry of Life Processes Institute. She co-led the work with Matthew MacDonald, an assistant professor of psychiatry at the University of Pittsburgh Medical Center.

Protein targeting plays catch-up

While genetic targeting has completely transformed biology and neuroscience, protein targeting has woefully lagged behind. Researchers can amplify and sequence genes and RNA to identify their exact building blocks. Proteins, however, cannot be amplified and sequenced in the same manner. Instead, researchers have to divide proteins into peptides and then put them back together, which is a slow and imperfect process.

“We have been able to gain a lot of traction with genetic and RNA sequencing, but proteins have been out of the loop,” Kozorovitskiy said. “Yet everyone recognizes the importance of proteins. Proteins are the ultimate effectors in our cells. Understanding where proteins are, how they work and how they work relative to each other is really important.”

“Mass spectroscopy-based proteomics is a powerful technique,” said Vasin Dumrongprechachan, a Ph.D. candidate in Kozorovitskiy’s laboratory and the paper’s first author. “With our approach, we can start mapping the proteome of various brain circuits with high precision and specificity. We can even quantify them to see how many proteins are present in different parts of neurons and the brain.”

Next step: Better understanding brain diseases

Now that this new system has been validated and is ready to go, the researchers can apply it to mouse models for disease to better understand neurological illnesses.

“We are hoping to extend this approach to start identifying the biochemical modifications on neuronal proteins that occur during specific patterns of brain activity or with changes induced by neuroactive drugs to facilitate clinical advances,” Dumrongprechachan said.

“We look forward to taking this to models related to brain diseases and connect those studies to postmortem proteomics work in the human brain,” Kozorovitskiy said. “It’s ready to be applied to those models, and we can’t wait to get started.”

New findings on how ketamine acts against depression

The discovery that the anaesthetic ketamine can help people with severe depression has raised hopes of finding new treatment options for the disease. Researchers at Karolinska Institutet have now identified novel mechanistic insights of how the drug exerts its antidepressant effect. The findings have been published in the journal Molecular Psychiatry.

According to the World Health Organization, depression is a leading cause of disability worldwide and the disease affects more than 360 million people every year.

The risk of suffering is affected by both genetics and environmental factors. The most commonly prescribed antidepressants, such as SSRIs, affect nerve signalling via monoamines in the brain.

However, it can take a long time for these drugs to help, and over 30 percent of sufferers experience no relief at all.

The need for new types of antidepressants with faster action and wider effect is therefore considerable.

An important breakthrough is the anaesthetic ketamine, which has been registered for some years in the form of a nasal spray for the treatment of intractable depression.

Relieves depressive symptoms quickly

Unlike classic antidepressants, ketamine affects the nerve signalling that occurs via the glutamate system, but it is unclear exactly how the antidepressant effect is mediated. When the medicine has an effect, it relieves depressive symptoms and suicidal thoughts very quickly.

However, ketamine can cause unwanted side effects such as hallucinations and delusions and there may be a risk of abuse so alternative medicines are needed.

The researchers want to better understand how ketamine works in order to find substances that can have the same rapid effect but without the side effects.

Explains ketamine’s effects

In a new study, researchers at Karolinska Institutet have further investigated the molecular mechanisms underlying ketamine’s antidepressant effects. Using experiments on both cells and mice, the researchers were able to show that ketamine reduced so-called presynaptic activity and the persistent release of the neurotransmitter glutamate.

“Elevated glutamate release has been linked to stress, depression and other mood disorders, so lowered glutamate levels may explain some of the effects of ketamine,” says Per Svenningsson, professor at the Department of Clinical Neuroscience, Karolinska Institutet, and the study’s last author.

When nerve signals are transmitted, the transmission from one neuron to the next occurs via synapses, a small gap where the two neurons meet.

The researchers were able to see that ketamine directly stimulated AMPA receptors, which sit postsynaptically, that is, the part of the nerve cell that receives signals and this leads to the increased release of the neurotransmitter adenosine which inhibits presynaptic glutamate release.

The effects of ketamine could be counteracted by the researchers inhibiting presynaptic adenosine A1 receptors.

“This suggests that the antidepressant action of ketamine can be regulated by a feedback mechanism. It is new knowledge that can explain some of the rapid effects of ketamine,” says Per Svenningsson.

In collaboration with Rockefeller University, the same research group has also recently reported on the disease mechanism in depression.

The findings, also published in the journal Molecular Psychiatry, show how the molecule p11 plays an important role in the onset of depression by affecting cells sitting on the surface of the brain cavity, ependymal cells, and the flow of cerebrospinal fluid.

Fight-or-flight response is altered in healthy young people who had COVID-19

New research published in The Journal of Physiology found that otherwise healthy young people diagnosed with COVID-19, regardless of their symptom severity, have problems with their nervous system when compared with healthy control subjects.

Specifically, the system which oversees the fight-or-flight response, the sympathetic nervous system, seems to be abnormal (overactive in some instances and underactive in others) in those recently diagnosed with COVID-19.

These results are especially important given the emerging evidence of symptoms like racing hearts being reported in conjunction with “long-COVID.”

The impact of this alteration in fight-or-flight response, especially if prolonged, means that many processes within the body could be disrupted or affected. This research team has specifically been looking at the impact on the cardiovascular system – including blood pressure and blood flow – but the sympathetic nervous system is also important in exercise responses, the digestive system, the immune function, and more.

Understanding what happens in the body shortly following diagnosis of COVID-19 is an important first step towards understanding the potential long-term consequences of contracting the disease.

Importantly, if similar disruption of the flight-or-fight response, like that found here in young individuals, is present in older adults following COVID-19 infection, there may be substantial adverse implications for cardiovascular health.

The researchers studied lung function, exercise capacity, vascular function, and neural cardiovascular control (the control of heartbeat by the brain).

They used a technique called microneurography, wherein the researchers inserted a tiny needle with an electrode into a nerve behind the knee, which records the electrical impulses of that nerve and measures how many bursts of electrical activity are happening and how big the bursts are.

From this nerve activity, they can assess the function of the sympathetic nervous system through a series of tests. For all the tests, the subject was lying on their back on a bed. First, the researchers looked at the baseline resting activity of the nerves, heart rate, and blood pressure. Resting sympathetic nerve activity was higher in the COVID-19 participants than healthy people used as controls in the experiment.

Then, the subject did a “cold pressor test,” where they stick their hand in an ice-water mixture (~0° C) for two minutes. In healthy individuals, this causes a profound increase in that sympathetic nerve (fight-or-flight) activity and blood pressure. The COVID-19 subjects rated their pain substantially lower than healthy subjects typically do.

Finally, the participant was moved to an upright position (the bed they’re lying on can tilt up and down) to see how well their body can respond to a change in position. The COVID-19 subjects had a pretty large increase in heart rate during this test; they also had higher sympathetic nerve activity throughout the tilt test compared with other healthy young adults.

As with all research on humans, there are limitations to this study. However, the biggest limitation in the present study is its cross-sectional nature – in other words, we do not know what the COVID-19 subjects’ nervous system activity “looked like” before they were diagnosed with COVID-19.

These findings are consistent with the increasing reports of long-COVID symptoms pertaining to problems with the fight-or-flight response.

Abigail Stickford, senior author on this study said, “Through our collaborative project, we have been following this cohort of COVID-19 subjects for 6 months following their positive test results. This work was representative of short-term data, so the next steps for us are to wrap up data collection and interpret how the subjects have changed over this time.”

Neurons that respond to touch are less picky than expected

Researchers used to believe that individual primary touch-sensitive neatly responded to specific types of touch. Now a Northwestern University study finds that touch-sensitive neurons communicate touch in a much messier and jumbled manner.

In the study, the team developed a new technique to stimulate rats’ whiskers in three dimensions while simultaneously recording first-stage touch-sensitive neurons in the rats’ brains. The researchers discovered that, instead of responding to distinct types of touch, these neurons — which are the first to receive early touch signals — responded to many types of touch and to varying degrees.

The research was published online in the Proceedings of the National Academy of Sciences.

“Many people used to think that each neuron was very precisely tuned for some aspect of the touch stimulus,” said Northwestern’s Mitra Hartmann, one of the study’s senior authors. “We didn’t find that at all. Some neurons respond more than others to some features of the stimulus, so there is some degree of tuning. But these neurons respond to many combinations of different forces and torques applied to the whisker.”

“When we compared all the recorded neurons, we found that the stimuli they responded to overlapped with each other but not perfectly,” added Nicholas Bush, the paper’s first author. “It’s similar to a painter’s palette: We expected to find a handful of ‘primary colors,’ where a neuron could be one of a few different types. But we found the ‘palette’ had already been mixed. Each neuron was a little different from all the others, but together they covered an entire spectrum.”

Hartmann is a professor of biomedical and mechanical engineering at Northwestern’s McCormick School of Engineering, where she is a member of the Center for Robotics and Biosystems. A former Ph.D. candidate in Hartmann’s laboratory, Bush now is a postdoctoral fellow at the Seattle Children’s Research Institute. Sara Solla, a professor of physiology at Northwestern University Feinberg School of Medicine and of physics and astronomy in the Weinberg College of Arts and Sciences, is the other senior author of the study.

Previous studies ‘too restricted, simplified’

With just a brush of their whiskers, rats can extract detailed information from their environments, including an object’s distance, orientation, shape and texture. This keen ability makes the rat’s sensory system ideal for studying the relationship between mechanics (the moving whisker) and sensory input (touch signals sent to the brain). But despite the popularity of using the whisker system to explore the mystery of touch, many long-standing questions remain.

Although large regions of the brain are dedicated to processing touch signals, the number of primary sensory neurons that first acquire tactile information is relatively small and little understood. “There is an ‘information bottleneck,’” Bush said. “We wanted to know how the neurons that sense touch are capable of acquiring and representing complex information despite this bottleneck.”

While previous studies have explored how neurons respond to a stimulated whisker, those studies were unable to realistically replicate natural touch. In many studies, for example, researchers clipped an anesthetized rat’s whisker to about a centimeter in length and then precisely moved the whisker back and forth, micrometers at a time.

“This doesn’t at all capture the full flexibility of the whisker,” Hartmann said. “It’s not how a rat would move in a natural environment. It’s a precise stimulation, which gives a precise response, but it’s too restricted and simplified.”

New comprehensive technique

In Northwestern’s study, however, the researchers left the whisker intact and manually stimulated it through a comprehensive range of motions, directions, speeds and forces, up and down the full length of the whisker. Using implanted electrodes to measure the electrical activity of neurons that received the touch signals, the researchers quantified how these neurons responded to a broad range of mechanical signals, much closer to what a real rat would experience in its natural environment. Ultimately, they found that all neurons responded — albeit some more than others — to all different types of stimuli.

“This finding suggests that the sense of touch may be capable of very complex tactile feats because it doesn’t throw away or filter much information at that first stage of sensory acquisition before information gets to higher processing centers in the brain,” Bush said. “Rather, these early sensory neurons are relaying a high-fidelity — but ‘jumbled’ or ‘messy’ — representation. The brain has to be, and clearly is, capable of sorting through the jumble and reconciling it with all the other information available to interpret the sense of touch.”

Next, Hartmann and her team plan to combine this new finding with WHISKiT, the first 3D simulation of a rat’s whisker system, which was recently developed in her laboratory.

“We can use the WHISKiT model to simulate the mechanical signals that will occur during natural whisking behavior, and then simulate how a population of first-stage touch neurons would respond to those mechanical signals,” Hartmann said. “Simulation scenarios will be based on the results we uncovered in this latest study.”

Neural Network Model Shows Why People with Autism Read Facial Expressions Differently

People with autism spectrum disorder have difficulty interpreting facial expressions.

Using a neural network model that reproduces the brain on a computer, a group of researchers based at Tohoku University have unraveled how this comes to be.

The journal Scientific Reports published the results.

“Humans recognize different emotions, such as sadness and anger by looking at facial expressions. Yet little is known about how we come to recognize different emotions based on the visual information of facial expressions,” said paper coauthor, Yuta Takahashi.

“It is also not clear what changes occur in this process that leads to people with autism spectrum disorder struggling to read facial expressions.”

The research group employed predictive processing theory to help understand more. According to this theory, the brain constantly predicts the next sensory stimulus and adapts when its prediction is wrong. Sensory information, such as facial expressions, helps reduce prediction error.

The artificial neural network model incorporated the predictive processing theory and reproduced the developmental process by learning to predict how parts of the face would move in videos of facial expression. After this, the clusters of emotions were self-organized into the neural network model’s higher level neuron space - without the model knowing which emotion the facial expression in the video corresponds to.

The model could generalize unknown facial expressions not given in the training, reproducing facial part movements and minimizing prediction errors.

Following this, the researchers conducted experiments and induced abnormalities in the neurons’ activities to investigate the effects on learning development and cognitive characteristics. In the model where heterogeneity of activity in neural population was reduced, the generalization ability also decreased; thus, the formation of emotional clusters in higher-level neurons was inhibited. This led to a tendency to fail in identifying the emotion of unknown facial expressions, a similar symptom of autism spectrum disorder.

According to Takahashi, the study clarified that predictive processing theory can explain emotion recognition from facial expressions using a neural network model.

“We hope to further our understanding of the process by which humans learn to recognize emotions and the cognitive characteristics of people with autism spectrum disorder,” added Takahashi. “The study will help advance developing appropriate intervention methods for people who find it difficult to identify emotions.”

Novel research identifies gene targets of stress hormones in the brain

Chronic stress is a well-known cause for mental health disorders. New research has moved a step forward in understanding how glucocorticoid hormones (‘stress hormones’) act upon the brain and what their function is. The findings could lead to more effective strategies in the prevention and treatment of mental health disorders.

(Image caption: A magnified image of developing young human neurons. The mineralocorticoid receptor, coloured red, was found in the cell nucleus of these neurons. Credit: University of Bristol)

The study, led by academics at the University of Bristol and published in Nature Communications,has discovered a link between corticosteroid receptors – the mineralocorticoid receptor (MR) and the glucocorticoid receptor (GR) - and ciliary and neuroplasticity genes in the hippocampus, a region of the brain involved in stress coping and learning and memory.

The aim of the research was to find out what genes MR and GR interact with across the entire hippocampus genome during normal circadian variation and after exposure to acute stress. The research team also wanted to discover whether any interaction would result in changes in the expression and functional properties of these genes.

The study combined advanced next-generation sequencing, bioinformatics and pathway analysis technologies to enable a greater understanding into glucocorticoid hormone action, via MRs and GRs, on gene activity in the hippocampus.

The researchers found a previously unknown link between the MR and cilia function. Cilia are small hair-like structures that protrude from cell bodies. Effective cilia function is vitally important for brain development and ongoing brain plasticity, but how their structure and function is regulated in neurons is largely unknown.

The discovery of the novel role of MR in cilia structure and function in relation to neuronal development has increased knowledge of the role of these cell structures in the brain and could help resolve cilia-related (developmental) disorders in the future.

The team also found that MR and GR interact with many genes which are involved in neuroplasticity processes, such as neuron-to-neuron communication and learning and memory processes. Some of these genes, however, have been linked to the development of mental health disorders like major depression, anxiety, PTSD as well as schizophrenia spectrum disorders. Consequently, glucocorticoid hormone dysfunction, as observed in chronic stress, could have a harmful effect on mental health through their action on these vulnerability genes, providing a potential new mechanism to explain the long-known involvement of glucocorticoids in the aetiology of mental health disorders.

Although further research on the role glucocorticoid hormones play in the regulation of these genes is needed, the findings fill the gap between the long-known involvement of glucocorticoids in mental health disorders and the existence of vulnerability genes.

Hans Reul, Professor of Neuroscience in Bristol Medical School: Translational Health Sciences (THS), said: “This research is a substantial step forward in our efforts to understand how these powerful glucocorticoid hormones act upon the brain and what their function is.

"We hope that our findings will trigger new targeted research into the role these hormones play in the aetiology of severe mental disorders like depression, anxiety and PTSD.”

Next steps for the research include studying how glucocorticoid hormone action via MR and GR on the hippocampus genome changes under chronic stress conditions and, thanks to a new BBSRC grant, glucocorticoid action via MR and GR upon the female brain genome. Very little is known about this research area in females as most studies on stress and glucocorticoid hormones have been conducted in males.

The study, supported by the BBSRC and a Wellcome Trust Neural Dynamics PhD studentship, was carried out by the Neuro-Epigenetics Research Group led by Professor Hans Reul and Dr Karen Mifsud, in collaboration with Bristol’s Stem Cell Biology Group - Dr Oscar Cordero Llana and Ms Andriana Gialeli - and sequencing specialists and bioinformaticians at the University of Oxford.

Does Visual Feedback of Our Tongues Help in Speech Motor Learning?

When we speak, although we may not be aware of it, we use our auditory and somatosensory systems to monitor the results of the movements of our tongue or lips.

This sensory information plays a critical role in how we learn to speak and maintain accuracy in these behaviors throughout our lives. Since we cannot typically see our own faces and tongues while we speak, however, the potential role of visual feedback has remained less clear.

In the Journal of the Acoustical Society of America, University of Montreal and McGill University researchers present a study exploring how readily speakers will integrate visual information about their tongue movements — captured in real time via ultrasound — during a speech motor learning task.

“Participants in our study, all typical speakers of Quebec French, wore a custom-molded prosthesis in their mouths to change the shape of their hard palate, just behind their upper teeth, to disrupt their ability to pronounce the sound ‘s’ — in effect causing a temporary speech disorder related to this sound,” said Douglas Shiller, an associate professor at the University of Montreal.

One group received visual feedback of their tongue with a sensor under their chin oriented to provide an ultrasound image within the sagittal plane (a slice down the midline, front to back).

A second group also received visual feedback of their tongue. In this case, the sensor was oriented at 90 degrees to the previous condition with an image of the tongue within the coronal plane (across the tongue from left to right).

A third group, the control group, received no visual feedback of their tongue.

All participants were given the opportunity to practice the “s” sound with the prosthesis in place for 20 minutes.

“We compared the acoustic properties of the ‘s’ across the three groups to determine to what degree visual feedback enhanced the ability to adapt tongue movements to the perturbing effects of the prosthesis,” said Shiller.

As expected, participants in the coronal visual feedback group improved their “s” production to a greater degree than those receiving no visual feedback.

“We were surprised, however, to find participants in the sagittal visual feedback group performed even worse than the control group that received no visual feedback,” said Shiller. “In other words, visual feedback of the tongue was found to either enhance motor learning or interfere with it, depending on the nature of the images being presented.”

The group’s findings broadly support the idea that ultrasound tools can improve speech learning outcomes when used, for example, in the treatment of speech disorders or learning new sounds in a second language.

“But care must be taken in precisely how visual information is selected and presented to a patient,” said Shiller. “Visual feedback that isn’t compatible with the demands of the speaking task may interfere with the person’s natural mechanisms of speech learning — potentially leading to worse outcomes than no visual feedback at all.”

To Do or Not to Do: Cracking the Code of Motivation

Our motivation to put effort for achieving a goal is controlled by a reward system wired in the brain. However, many neuropathological conditions impair the reward system, diminishing the will to work. Recently, scientists in Japan experimentally manipulated the reward system network of monkeys and studied their behavior. They deciphered a few critical missing pieces of the reward system puzzle that might help in increasing motivation.

Why do we do things? What persuades us to put an effort to achieve goals, however mundane? What, for instance, drives us to search for food? Neurologically, the answer is hidden in the reward system of the brain—an evolutionary mechanism that controls our willingness to work or to take a risk as the cost of achieving our goals and enjoying the perceived rewards. In people suffering from depression, schizophrenia, or Parkinson’s disease, often the reward system of the brain is impaired, leading them to a state of diminished motivation for work or chronic fatigue.

To find a way to overcome the debilitating behavioral blocks, neuroscientists are investigating the “anatomy” of the reward system and determining how it evaluates the cost-benefit trade-off while deciding on whether to pursue a task. Recently, Dr. Yukiko Hori of National Institutes for Quantum and Radiological Science and Technology, Japan, along with her colleagues have conducted a study that has answered some of the most critical questions on benefit- and cost-based motivation of reward systems. The findings of their study have been published in PLoS Biology.

Discussing what prompted them to undertake the study, Dr. Hori explains “Mental responses such as ‘feeling more costly and being too lazy to act,’ are often a problem in patients with mental disorders such as depression, and the solution lies in the better understanding of what causes such responses. We wanted to look deeper into the mechanism of motivational disturbances in the brain.”

To do so, Dr. Hori and her team focused on dopamine (DA), the “neurotransmitter” or the signaling molecule that plays the central role in inducing motivation and regulation of behavior based on cost-benefit analysis. The effect of DA in the brain transmits via DA receptors, or molecular anchors that bind the DA molecules and propagate the signals through the neuronal network of the brain. However, as these receptors have distinct roles in DA signal transduction, it was imperative to assess their relative impacts on DA signaling. Therefore, using macaque monkeys as models, the researchers aimed to decipher the roles of two classes of DA receptors—the D1-like receptor (D1R) and the D2-like receptor (D2R)—in developing benefit- and cost-based motivation.

In their study, the researchers first trained the animals to perform “reward size” tasks and “work/delay tasks.” These tasks allowed them to measure how perceived reward size and required effort influenced the task-performing behavior. Dr. Takafumi Minamimoto, the corresponding author of the study explains, “We systematically manipulated the D1R and D2R of these monkeys by injecting them with specific receptor-binding molecules that dampened their biological responses to DA signaling. By positron emission tomography-based imaging of the brains of the animals, the extent of bindings or blockades of the receptors was measured.” Then, under experimental conditions, they offered the monkeys the chance to perform tasks to achieve rewards and noted whether the monkeys accepted or refused to perform the tasks and how quickly they responded to the cues related to the tasks.

Analysis of these data unearthed some intriguing insights into the neurobiological mechanism of the decision-making process. The researchers observed that decision-making based on perceived benefit and cost required the involvement of both D1R and D2R, in both incentivizing the motivation (the process in which the size of the rewards inspired the monkeys to perform the tasks) and in increasing delay discounting (the tendency to prefer immediate, smaller rewards over larger, but delayed rewards). It also became clear that DA transmission via D1R and D2R regulates the cost-based motivational process by distinct neurobiological processes for benefits or “reward availability” and costs or “energy expenditure associated with the task.” However, workload discounting—the process of discounting the value of the rewards based on the proportion of the effort needed—was exclusively related to D2R manipulation.

Prof. Hori emphasizes, “The complementary roles of two dopamine receptor subtypes that our study revealed, in the computation of the cost-benefit trade-off to guide action will help us decipher the pathophysiology of psychiatric disorders.” Their research brings the hope of a future when by manipulating the inbuilt reward system and enhancing the motivation levels, lives of many can be improved.

(Image caption: Primitive olfactory receptors of the jumping bristletail are thought to be amongst the most evolutionarily ancient versions of olfactory receptors in insects. Credit: Katja Schulz, CC BY 2.0)

Study reveals how smell receptors work

All senses must reckon with the richness of the world, but nothing matches the challenge faced by the olfactory system that underlies our sense of smell. We need only three receptors in our eyes to sense all the colors of the rainbow—that’s because different hues emerge as light-waves that vary across just one dimension, their frequency. The vibrant colorful world, however, pales in comparison to the complexity of the chemical world, with its many millions of odors, each composed of hundreds of molecules, all varying greatly in shape, size and properties. The smell of coffee, for instance, emerges from a combination of more than 200 chemical components, each of which are structurally diverse, and none of which actually smells like coffee on its own.

“The olfactory system has to recognize a vast number of molecules with only a few hundred odor receptors or even less,” says Rockefeller neuroscientist Vanessa Ruta. “It’s clear that it had to evolve a different kind of logic than other sensory systems.”

In a new study, Ruta and her colleagues offer answers to the decades-old question of odor recognition by providing the first-ever molecular views of an olfactory receptor at work.

The findings, published in Nature, reveal that olfactory receptors indeed follow a logic rarely seen in other receptors of the nervous system. While most receptors are precisely shaped to pair with only a few select molecules in a lock-and-key fashion, most olfactory receptors each bind to a large number of different molecules. Their promiscuity in pairing with a variety of odors allows each receptor to respond to many chemical components. From there, the brain can figure out the odor by considering the activation pattern of combinations of receptors.

Holistic recognition

Olfactory receptors were discovered 30 years ago. But scientists have not been able to see them up close and decipher their structural and mechanistic workings, in part because these receptors didn’t lend themselves to commonly available molecular imaging methods. Complicating the matter, there seems to be no rhyme or reason to the receptors’ preferences—an individual odor receptor can respond to compounds that are both structurally and chemically different.

“To form a basic understanding of odorant recognition we need to know how a single receptor can recognize multiple different chemicals, which is a key feature of how the olfactory system works and has been a mystery,” says Josefina del Mármol, a postdoc in Ruta’s lab.

So Ruta and del Mármol, along with Mackenzie Yedlin, a research assistant in the lab, set out to solve an odor receptor’s structure taking advantage of recent advances in cryo-electron microscopy. This technique, which involves beaming electrons at a frozen specimen, can reveal extremely small molecular constructs in 3D, down to their individual atoms.

The team turned to the jumping bristletail, a ground-dwelling insect whose genome has been recently sequenced and has only five kinds of olfactory receptors. Although the jumping bristletail’s olfactory system is simple, its receptors belong to a large family of receptors with tens of millions of variants thought to exist in hundreds of thousands of different insect species. Despite their diversity, these receptors function the same way: They form an ion channel—a pore through which charged particles flow—that opens only when the receptor encounters its target odorant, ultimately activating the sensory cells that initiate the sense of smell.

The researchers chose OR5, a receptor from the jumping bristletail with broad recognition ability, responding to 60 percent of the small molecules they tested.

They then examined OR5’s structure alone and also bound to a chemical, either eugenol, a common odor molecule, or DEET, the insect repellent. “We learned a lot from comparing these three structures,” Ruta says. “One of the beautiful things you can see is that in the unbound structure the pore is closed, but in the structure where it’s bound with either eugenol or DEET, the pore has dilated and provides a pathway for ions to flow.”

With the structures in hand, the team looked closer to see exactly where and how the two chemically different molecules bind to the receptor. There have been two ideas about odor receptors’ interactions with molecules. One is that the receptors have evolved to distinguish large swaths of molecules by responding to a partial but defining feature of a molecule, such as a part of its shape. Other researchers have proposed that each receptor packs multiple pockets on its surface at once, allowing it to accommodate a number of different molecules.

But what Ruta found fit neither of those scenarios. It turned out that both DEET and eugenol bind at the same location and fit entirely inside a simple pocket within the receptor. And surprisingly, the amino acids lining the pocket didn’t form strong, selective chemical bonds with the odorants, but only weak bonds. Whereas in most other systems, receptors and their target molecules are good chemical matches, here they seemed more like friendly acquaintances. “These kinds of nonspecific chemical interactions allow different odorants to be recognized,” Ruta says. “In this way, the receptor is not selective to a specific chemical feature. Rather, it’s recognizing the more general chemical nature of the odorant,” Ruta says.

And as computational modeling revealed, the same pocket could accommodate many other odor molecules in just the same way.

But the receptor’s promiscuity doesn’t mean it has no specificity, Ruta says. Although each receptor responds to a large number of molecules, it is insensitive to others. Moreover, a simple mutation in the amino acids of the binding site would broadly reconfigure the receptor, changing the molecules with which it prefers to bind. This latter finding also helps to explain how insects have been able to evolve many millions of odor receptor varieties suited for the wide range of lifestyles and habitats they encounter.

The findings are likely representative of many olfactory receptors, Ruta says. “They point to key principles in odorant recognition, not only in insects’ receptors but also in receptors within our own noses that must also detect and discriminate the rich chemical world.”

Trains in the Brain—Scientists Uncover Switching System Used in Information Processing and Memory

A team of scientists has uncovered a system in the brain used in the processing of information and in the storing of memories—akin to how railroad switches control a train’s destination. The findings offer new insights into how the brain functions.

“Researchers have sought to identify neural circuits that have specialized functions, but there are simply too many tasks the brain performs for each circuit to have its own purpose,” explains André Fenton, a professor of neural science at New York University and the senior author of the study, which appears in the journal Cell Reports. “Our results reveal how the same circuit takes on more than one function. The brain diverts ‘trains’ of neural activity from encoding our experiences to recalling them, showing that the same circuits have a role in both information processing and in memory.”

This newly discovered dynamic shows how the brain functions more efficiently than previously realized.

“When the same circuit performs more than one function, synergistic, creative, and economic interactions become possible,” Fenton adds.

To explore the role of brain circuits, the researchers examined the hippocampus—a brain structure long known to play a significant role in memory—in mice. They investigated how the mouse hippocampus switches from encoding the current location to recollecting a remote location. Here, mice navigated a surface and received a mild shock if they touched certain areas, prompting the encoding of information. When the mice subsequently returned to this surface, they avoided the area where they’d previously received the shock–evidence that memory influenced their movement. The analysis of neural activity revealed a switching in the hippocampus. Specifically, the scientists found that a certain type of activity pattern in the population of neurons known as a dentate spike, which originates from the medial entorhinal cortex (DSM), served to coordinate changes in brain function.

“Railway switches control each train’s destination, whereas dentate spikes switch hippocampus information processing from encoding to recollection,” observes Fenton. “Like a railway switch diverts a train, this dentate spike event diverts thoughts from the present to the past.”

The music of silence: imagining a song triggers similar brain activity to moments of mid-music silence

Imagining a song triggers similar brain activity as moments of silence in music, according to a pair of just-published studies in the Journal of Neuroscience (1,2).

The results collectively reveal how the brain continues responding to music, even when none is playing, and provide new insights into how human sensory predictions work.

Music is more than a sensory experience

When we listen to music, the brain attempts to predict what comes next. A surprise, such as a loud note or disharmonious chord, increases brain activity.

To isolate the brain’s prediction signal from the signal produced in response to the actual sensory experience, researchers used electroencephalograms (EEGs) to measure the brain activity of musicians while they listened to or imagined Bach piano melodies.

When imagining music, the musicians’ brain activity had the opposite electrical polarity to when they listened to it – indicating different brain activations – but the same type of activity as for imagery occurred in silent moments of the songs when people would have expected a note but there wasn’t one.

Explaining the significance of the results, Giovanni Di Liberto, Assistant Professor in Intelligent Systems in Trinity’s School of Computer Science and Statistics, said:

“There is no sensory input during silence and imagined music, so the neural activity we discovered is coming purely from the brain’s predictions e.g., the brain’s internal model of music. Even though the silent time-intervals do not have an input sound, we found consistent patterns of neural activity in those intervals, indicating that the brain reacts to both notes and silences of music.

“Ultimately, this underlines that music is more than a sensory experience for the brain as it engages the brain in a continuous attempt of predicting upcoming musical events. Our study has isolated the neural activity produced by that prediction process. And our results suggest that such prediction processes are at the foundation of both music listening and imagery.

“We used music listening in these studies to investigate brain mechanisms of sound processing and sensory prediction, but these curious findings have wider implications – from boosting our basic, fundamental scientific understanding, to applied settings such as in clinical research.

“For example, imagine a cognitive assessment protocol involving music listening. From a few minutes of EEG recordings during music listening, we could derive several useful cognitive indicators, as music engages a variety of brain functions, from sensory and prediction processes to emotions. Furthermore, consider that music listening is much more pleasant than existing tasks.”

(Image caption: Children attending the after school music club. Credit: Mari Tervaniemi)

Learning foreign languages can affect the processing of music in the brain

Research Director Mari Tervaniemi from the University of Helsinki’s Faculty of Educational Sciences investigated, in cooperation with researchers from the Beijing Normal University (BNU) and the University of Turku, the link in the brain between language acquisition and music processing in Chinese elementary school pupils aged 8–11 by monitoring, for one school year, children who attended a music training programme and a similar programme for the English language. Brain responses associated with auditory processing were measured in the children before and after the programmes. Tervaniemi compared the results to those of children who attended other training programmes.

“The results demonstrated that both the music and the language programme had an impact on the neural processing of auditory signals,” Tervaniemi says.

Learning achievements extend from language acquisition to music

Surprisingly, attendance in the English training programme enhanced the processing of musically relevant sounds, particularly in terms of pitch processing.

“A possible explanation for the finding is the language background of the children, as understanding Chinese, which is a tonal language, is largely based on the perception of pitch, which potentially equipped the study subjects with the ability to utilise precisely that trait when learning new things. That’s why attending the language training programme facilitated the early neural auditory processes more than the musical training.”

Tervaniemi says that the results support the notion that musical and linguistic brain functions are closely linked in the developing brain. Both music and language acquisition modulate auditory perception. However, whether they produce similar or different results in the developing brain of school-age children has not been systematically investigated in prior studies.

At the beginning of the training programmes, the number of children studied using electroencephalogram (EEG) recordings was 120, of whom more than 80 also took part in EEG recordings a year later, after the programme.

In the music training, the children had the opportunity to sing a lot: they were taught to sing from both hand signs and sheet music. The language training programme emphasised the combination of spoken and written English, that is, simultaneous learning. At the same time, the English language employs an orthography that is different from Chinese. The one-hour programme sessions were held twice a week after school on school premises throughout the school year, with roughly 20 children and two teachers attending at a time.

“In both programmes the children liked the content of the lessons which was very interactive and had many means to support communication between the children and the teacher” says Professor Sha Taowho led the study in Beijing.

Motivation depends on how the brain processes fatigue

Fatigue – the feeling of exhaustion from doing effortful tasks – is something we all experience daily. It makes us lose motivation and want to take a break. Although scientists understand the mechanisms the brain uses to decide whether a given task is worth the effort, the influence of fatigue on this process is not yet well understood.

The research team conducted a study to investigate the impact of fatigue on a person’s decision to exert effort. They found that people were less likely to work and exert effort – even for a reward – if they were fatigued. The results are published in Nature Communications.

Intriguingly, the researchers found that there were two different types of fatigue that were detected in distinct parts of the brain. In the first, fatigue is experienced as a short-term feeling, which can be overcome after a short rest. Over time, however, a second, longer term feeling builds up, stops people from wanting to work, and doesn’t go away with short rests.

“We found that people’s willingness to exert effort fluctuated moment by moment, but gradually declined as they repeated a task over time,” says Tanja Müller, first author of the study, based at the University of Oxford. “Such changes in the motivation to work seem to be related to fatigue – and sometimes make us decide not to persist.”

The team tested 36 young, healthy people on a computer-based task, where they were asked to exert physical effort to obtain differing amounts of monetary rewards. The participants completed more than 200 trials and in each, they were asked if they would prefer to ‘work’ – which involved squeezing a grip force device – and gain the higher rewards offered, or to rest and only earn a small reward.

The team built a mathematical model to predict how much fatigue a person would be feeling at any point in the experiment, and how much that fatigue was influencing their decisions of whether to work or rest.

While performing the task, the participants also underwent an MRI scan, which enabled the researchers to look for activity in the brain that matched the predictions of the model.

They found areas of the brain’s frontal cortex had activity that fluctuated in line with the predictions, while an area called the ventral striatum signalled how much fatigue was influencing people’s motivation to keep working.

“This work provides new ways of studying and understanding fatigue, its effects on the brain, and on why it can change some people’s motivation more than others” says Dr Matthew Apps, senior author of the study, based at the University of Birmingham’s Centre for Human Brain Health. “This helps begin to get to grips with something that affects many patients lives, as well as people while at work, school, and even elite athletes.

Amygdala Found to Have Role in Important Pre-Attentive Mechanism in the Brain

We’re all familiar with the startle reflex – that sudden, uncontrollable jerk that occurs when we’re surprised by a noise or other unexpected stimulus. But the brain also has an important pre-attentive mechanism to tamp down that response and tune out irrelevant sounds so you can mind the task in front of you.

This pre-attentive mechanism is called sensorimotor gating and normally prevents cognitive overload. However, sensorimotor gating is commonly impaired in people with schizophrenia and other neurological and psychiatric conditions, including post-traumatic stress disorder (PTSD) and obsessive-compulsive disorder (OCD).

“Reduced sensorimotor gating is a hallmark of schizophrenia, and this is often associated with attention impairments and can predict other cognitive deficits,” explains neuroscientist Karine Fénelon, assistant professor of biology at the University of Massachusetts Amherst. “While the reversal of sensorimotor gating deficits in rodents is a gold standard for antipsychotic drug screening, the neuronal pathways and cellular mechanisms involved are still not completely understood, even under normal conditions.”

(Image caption: Mouse brain stem inhibitory neurons (green) activated by amygdala inputs (magenta neuronal processes))

To assess sensorimotor gating, neuroscientists measure prepulse inhibition (PPI) of the acoustic startle reflex. PPI occurs when a weak stimulus is presented before a startle stimulus, which inhibits the startle response.

For the first time, Fénelon and her UMass Amherst team – then-Ph.D. student Jose Cano (now a postdoctoral researcher at the University of Rochester Medical Center) and Ph.D. student Wanyun Huang – have shown how the amygdala, a brain region typically associated with fear, contributes to PPI by activating small inhibitory neurons in the mouse brain stem. This discovery, published in the journal BMC Biology, advances understanding of the systems underlying PPI and efforts to ultimately develop medical therapies for schizophrenia and other disorders by reversing pre-attentive deficits.

“Until recently, prepulse inhibition was thought to depend on midbrain neurons that release the transmitter acetylcholine,” Fénelon explains. “That was because studies of schizophrenia patients involved deficits in the cholinergic system.”

But there exists a “super cool neuroscience tool” – optogenetics – which allows scientists to use light to pinpoint and control genetically modified neurons in various experimental systems. “It is very specific,” Fénelon says. “Before this, we couldn’t pick and choose which neurons to manipulate.”

Their challenge was to use optogenetics to identify which circuits in which parts of the brain were involved in PPI. “We wanted to know what brain region connects to the core of the startle inhibition circuit in the brain stem, so we put tracers or dye to visualize those neurons,” Fénelon says. “With this approach we were able to identify amygdala neurons connected to the brain stem area in the center of the startle inhibition circuit.”

Next, they tested with optogenetic tools whether this connection between the amygdala and the brain stem was important for startle inhibition. “We know that in the brain of schizophrenia patients the function of the amygdala is also altered, so it made sense to us that this brain region was relevant to disease,” Fénelon says.

By photo-manipulating amygdala neurons in mice, they showed that the amygdala appeared to contribute to PPI by activating brain stem inhibitory, or glycinergic, neurons. Specifically, PPI was reduced by either shutting down the excitatory synapses between the amygdala and the brain stem or by silencing the brain stem inhibitory neurons themselves. “Interestingly, the PPI reduction measured as a result of these photo manipulations mimicked the PPI reduction observed in humans with schizophrenia and in mouse models of schizophrenia,” Fénelon says.

To better detail this connection, Fénelon and team used electrophysiology along with optogenetics to record the electrical activity of individual neurons taken from thin brain sections, in vitro. “This very precise yet technically challenging recording method allowed us to confirm without any doubt that amygdala excitatory inputs activate those glycinergic neurons in the brain stem,” Fénelon says.

She calls this finding “a piece of the puzzle” that pinpoints the prepulse inhibition circuit. Now she’s working in her lab using this new information to identify other brain pathways and attempt to reverse pre-attentive deficits in a mouse model of schizophrenia. Such a breakthrough would allow researchers to begin to develop drugs that can more precisely target treatment of pre-attentive problems.

Gene Associated With Autism Linked to Itch Response

A pilot study from North Carolina State University has found that a gene associated with autism spectrum disorder (ASD) and pain hypersensitivity may actually decrease itch response. Atopic dermatitis and pain hypersensitivity are both conditions associated with some types of ASD.

The gene in question, contactin associated protein 2 (CNTNAP2), is thought to be linked to a mutation associated with some forms of autism. This gene is found throughout the dorsal root ganglia (DRG), which are clusters of sensory cells located at the root of the spinal nerves. The DRG is the superhighway that transmits sensations of both pain and itch from the skin through the spinal cord to the brain.

“Since atopic dermatitis is often associated with ASD and CNTNAP2 is both linked to pain hypersensitivity in ASD and expressed in almost all DRG sensory neurons, we wondered whether CNTNAP2 might also contribute to itch behavior,” says Santosh Mishra, assistant professor of neuroscience at NC State and author of the study.

Mishra compared itch response in mice with the CNTNAP2 gene to those without it. In the presence of both histamine and non-histamine based stimuli, the CNTNAP2 knock-out mice, or mice without the gene, had a reduced itch response compared to mice with the gene.

“If there is a link between ASD and atopic dermatitis, then mice without a normal CNTNAP2 gene would be expected to have an increased itch response just as they have increased sensitivity to pain,” Mishra says. “There are several possible explanations for this finding, ranging from standard physiological differences between humans and animals to CNTNAP2’s potential role in releasing neuropeptides that could affect this response.

“But we also know that pain can suppress itch sensation and vice versa. Just as some humans with ASD have higher pain sensitivity, so do mice without CNTNAP2. That pain sensitivity may be inhibiting the itch sensation.”

Mishra hopes that this pilot study may pave the way to further exploration of the role of CNTNAP2 in itch.

“The functional role of CNTNAP2 in the neural transmission of itch is unknown,” Mishra says. “While this study sheds light on the possible linkage between ASD and itch, it’s limited because it is primarily based on behavioral, not cellular or molecular, results. Future studies may be required to dissect the molecular underpinnings of CNTNAP2 and itch sensation.”

Remember more by taking breaks

We remember things longer if we take breaks during learning, referred to as the spacing effect. Scientists at the Max Planck Institute of Neurobiology gained deeper insight into the neuronal basis for this phenomenon in mice. With longer intervals between learning repetitions, mice reuse more of the same neurons as before – instead of activating different ones. Possibly, this allows the neuronal connections to strengthen with each learning event, such that knowledge is stored for a longer time.

Many of us have experienced the following: the day before an exam, we try to cram a huge amount of information into our brain. But just as quickly as we acquired it, the knowledge we have painstakingly gained is gone again. The good news is that we can counteract this forgetting. With expanded time intervals between individual learning events, we retain the knowledge for a longer time.

But what happens in the brain during the spacing effect, and why is taking breaks so beneficial for our memory? It is generally thought that during learning, neurons are activated and form new connections. In this way, the learned knowledge is stored and can be retrieved by reactivating the same set of neurons. However, we still know very little about how pauses positively influence this process – even though the spacing effect was described more than a century ago and occurs in almost all animals.

Learning in a maze

Annet Glas and Pieter Goltstein, neurobiologists in the team of Mark Hübener and Tobias Bonhoeffer, investigated this phenomenon in mice. To do this, the animals had to remember the position of a hidden chocolate piece in a maze. On three consecutive opportunities, they were allowed to explore the maze and find their reward – including pauses of varying lengths. “Mice that were trained with the longer intervals between learning phases were not able to remember the position of the chocolate as quickly,” explains Annet Glas. “But on the next day, the longer the pauses, the better was the mice’s memory.”

During the maze test, the researchers additionally measured the activity of neurons in the prefrontal cortex. This brain region is of particular interest for learning processes, as it is known for its role in complex thinking tasks. Accordingly, the scientists showed that inactivation of the prefrontal cortex impaired the mice’s performance in the maze.

“If three learning phases follow each other very quickly, we intuitively expected the same neurons to be activated,” Pieter Goltstein says. “After all, it is the same experiment with the same information. However, after a long break, it would be conceivable that the brain interprets the following learning phase as a new event and processes it with different neurons.” However, the researchers found exactly the opposite when they compared the neuronal activity during different learning phases. After short pauses, the activation pattern in the brain fluctuated more than compared to long pauses: In fast successive learning phases, the mice activated mostly different neurons. When taking longer breaks, the same neurons active during the first learning phase were used again later.

Memory benefits from longer breaks

Reactivating the same neurons could allow the brain to strengthen the connections between these cells in each learning phase – there is no need to start from scratch and establish the contacts first. “That’s why we believe that memory benefits from longer breaks,” says Pieter Goltstein.

Thus, after more than a century, the study provides the first insights into the neuronal processes that explain the positive effect of learning breaks. With spaced learning, we may reach our goal more slowly, but we benefit from our knowledge for much longer. Hopefully, we won’t have forgotten this by the time we take our next exam!

How to make up your mind when the glass seems half empty?

Is a new high-income job offer worth accepting if it means commuting an extra hour to work? People often have to make tough choices regarding whether to endure some level of discomfort to take advantage of an opportunity or otherwise walk away from the reward. In making such choices, it turns out that the brain weighs our desire to go for the reward against our desire to avoid the related hardship.

In previous research, negative mental states have been shown to upset this balance between payoff and hardship toward more ‘pessimistic’ decision making and avoidance. For example, scientists know that people experiencing anxiety have a stronger-than-normal desire to avoid negative consequences. And people with depression have a weaker desire to approach the reward in the first place. But there is still much we do not know about how the brain incorporates feelings into decision making.

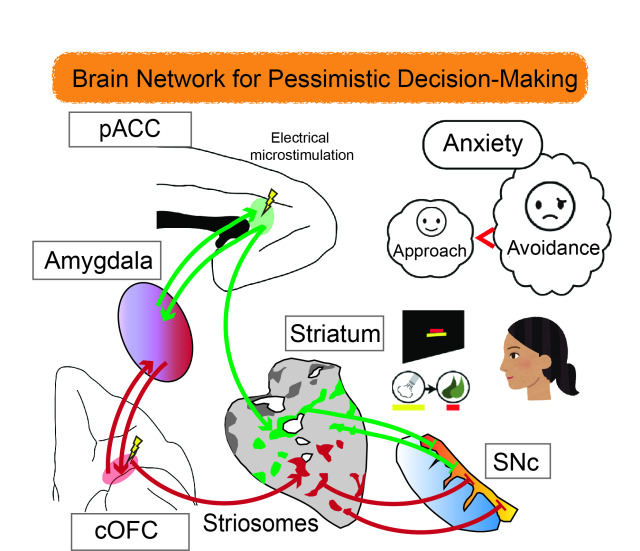

Neuroscientists at Kyoto University’s Institute for Advanced Study of Human Biology (WPI-ASHBi) have connected some of the dots to reveal the brain networks that give anxiety influence over decisions. Writing in the journal Frontiers in Neuroscience, the group has published a review that synthesizes results from years of brain measurements in rats and primates and relates these findings to the human brain.

“We are facing a new epidemic of anxiety, and it is important that we understand how our anxiety influences our decision making,” says Ken-ichi Amemori, associate professor in neuroscience at Kyoto University, ASHBi. “There is a real need for a better understanding of what is happening in the brain here. It is very difficult for us to see exactly where and how anxiety manifests in humans, but studies in primate brains have pointed to neurons in the ACC [anterior cingulate cortex] as being important in these decision-making processes.”

Thinking of the brain as an onion, the ACC lies in a middle layer, wrapping around the tough ‘heart’, or corpus callosum, which joins the two hemispheres. The ACC is also well-connected with many other parts of the brain controlling higher and lower functions with a role in integrating feelings with rational thinking.

The team started by measuring brain activity in rhesus macaques while they performed a task to select or reject a reward in the form of food combined with different levels of ‘punishment’ in the form of an annoying blast of air in the face. The potential choices were visually represented on a screen, and the monkeys used a joystick to make their selection, revealing how much discomfort they were willing to consider acceptable.

When the team probed the ACC of the monkeys, they identified groups of neurons that activated or deactivated in line with the sizes of the reward or punishment on offer. The neurons associated with avoidance and pessimistic decision-making were particularly concentrated in a part of the ACC called the pregenual ACC (pACC). This region has been previously linked to major depressive disorder and generalized anxiety disorder in humans.

Microstimulation of the pACC with a low-level electrical pulse caused the monkeys to avoid the reward, simulating the effects of anxiety. Remarkably, this artificially induced pessimism could be reversed by treatment with the antianxiety drug diazepam.

With knowledge of the pACC’s involvement in anxiety-related decision-making, the team next searched for its connections to other parts of the brain. They injected viruses at the specific sites that instructed nerve cells to start making fluorescent proteins that would light up under microscope observation. The virus then spread to other connected nerve cells, revealing the pathways other areas of the brain linked to this center of ‘pessimistic’ thought.