#portmanteaux

Also the “snake X, or snX” and “snX (snail X)” memes are an interesting example of a blending process becoming a fully productive morphological process- people don’t form a new blend of snake/snail with whatever the second word is every time, they’ve simplified it to “replace the first onset with /sn/” and it can now be applied freely!

Similar things have happened in the past- consider the “-gate” morpheme, from Watergate, that creates words for scandals. (E.g.- god help us- “Gamergate.”) “-gate” is a totally normal suffix now, even though it began life as a pattern of blending Watergate with other words.

The interesting thing about the /sn/ memes is that this process is subtractive- it usually deletes material from the original word, and replaces it with the /sn/ morpheme. Subtractive morphology is cool!

blends is the same

I gathered some experimental data asking people to form novel blends, and am resuming work analyzing that data. Here’s a Google Drive folder with the basic questionnaire I used, the five questionnaires I collected data on, and a spreadsheet of the data cleaned up. Feel free to examine if interested!

I have misguided all of you. The number of blends you can produce using the method I described in the last post is not N!; that’s the number of blends you can produce if you use every object in the set. The real number is much, much larger, and looks like this:

The number of ways you can take k objects from a set with N objects in it and order those combinations is equal to N!/(N-k)!, or the expression inside the big parentheses above. The weird shit on the left just says take that expression for every value of k from 1 to N and add the results all together. This is A Big Number. According to this helpful website, that number for 7 (like in mint plus sage) is 13,699. Compare 7!’s value of 5,040- more than double. 13! was equal to 6,227,020,800; the value of this function for N = 13 is 16,927,797,485. If I thought this method was a terrible way to make blends because it produced too many before, it’s much worse in reality.

Another of the of the ways in which ‘blends as permutations’ (well, k-permutations) model sucks is that there’s no guarantee that any given 'possible blend’ will contain material from both words. From mintand sage, both mintand sage are considered possible blends in this model! It’s a pretty solid generalization about blends that they contain elements from both words. How do we capture this in our model?

Well, let’s say that, instead of one bag, you have two- one for each word- and every time you have to draw a letter, you flip a coin. Heads means you draw from word A, tails means you draw from word B. You can only stop once you’ve drawn at least one sound from both bags (although you need not stop then), and for convenience’s sake let’s just say that once you’ve emptied one bag you stop flipping the coin and just draw from the other one. (An unintended consequence of introducing the flipping coin is that most of the time, the blends produced this way will contain roughly equal amounts of sounds from words A and B, but let’s ignore this for a second and just say that all possible blends are equally likely.)

How many blends can you produce in this way? Given what we learned above, this is pretty simple.

Scrapping the requirement that sounds from both words must end up in the blend, what about what sounds begin a blend? Seems like most blends start with a sound that starts one of the donor words, so let’s constrain the output to blends starting with a1 or b1. How many blends can we make in this way?

Well, let’s start with the set {1, 2, 3, 4, 5, 6, 7}, and say that the k-permutations all have to start with 7. There’s one permutation for k = 1 that fits this criterion- 7. After that, it’s 7 followed by every k-permutation of the set containing all the same members, except 7, as the set before- that is, every k-permutation of the set {1, 2, 3, 4, 5, 6}. So 1, for 7, plus every k-permutation of a set with 7-1 members.

So, if two members, a1 and b1, of a set of size N can start the k-permutations, the number is just double the above.

Let’s say you want to combine this requirement with the one above that both words must contribute to the blend!

This is getting ugly fast.

So here’s the golden question: Why am I counting all of these possible blends? Who on Earth cares how many of them exist? Here’s where I lay out my evil research plan:

I’m going to ask research subjects to form blends from various pairs of words, then compare the set of blends they collectively produce to the set of all possible blends under each of these models. And if models with certain constraints on them overgenerate less than the worst imaginable case to a statistically significant degree, we will have rigorously shown that those constraints meaningfully capture something about blend-formation in English.

Yeah, beat that!

So what, exactly, is a blend? How do you make one? What kinds of blends are permissible? And… drumroll please… how can we categorize this in a mathematical way?

[cymbal clash]

Okay, maybe that question doesn’t excite anyone else but me. If you’re not super jazzed about that as a problem to be solved, well, sucks for both of us, I’m thinking it’s going to be pretty important to my research. More importantly in the short term, this blog post might be even more unreadable than the ones about academic sources. But if that question does tickle your brain cells, even a little bit, do read on- I’ll try to keep it fun.

One hypothetical way to make a blend of two words, let’s say mintand sage, is to write all the sounds in both words on little pieces of paper, put the pieces of paper in a hat, and pull as many as you like (or until you run out). There are very few rules to this game, and you can probably figure them out yourself, but I’ll say them just to be complete.

- You wrote each word (in a phonemic transcription) in big symbols on a piece of paper and tore each sound out individually. You didn’t put any other sounds in the hat. That means that, because mintand sage don’t repeat any sounds, you can only use each sound once. (If you wanted to blend lettuceand lemon, you could use /l/, /ɛ/, and /ə/ twice each, since there are two of each between lettuceand lemon: /‘lɛ.təs/, /'lɛ.mən/.)

- More subtly, you didn’t tear any of the sounds in two- you’re stuck with how you chopped up the words when you started writing. You may be thinking, 'Why would that matter? What would you even do with half of an /m/?’ You’re right, that wouldn’t make much sense, but consider sage /sedʒ/: that last sound, the J-sound, is sort of one sound and sort of two. Phonetically, that is in physical reality, it’s a [d] followed very quickly by a [ʒ] (the middle sound in vision). The International Phonetic Alphabet reflects this phonetic reality by telling us to write [dʒ] with two letters, even though a ligature- a combination of the two letters- exists, and looks like this: <ʤ> But most linguists believe that, in the mind and in the language, the [d] and the [ʒ] together form a unit /dʒ/ that can’t be split apart. If you agree with them, you can’t cheat and tear the /dʒ/ piece in two to make words with /d/ and /ʒ/- neither of which are in either mintor sage on their own.

- You’re making a word with these sounds, so you have to remember what order you pulled them out in. If you pulled /m/, /e/, and /s/ but didn’t remember what order they came in, you haven’t really made a word. That may seem obvious, but it matters for the math.

If you have a collection of objects and you’re taking some to all of them and putting them in an order, that’s called a permutation. Permutations, among other things, are studied in the field of math known as combinatorics. For a set of N (whatever non-negative integer, or whole number, you want) objects, you can make N! permutations of those objects. N! is read Nfactorial, which means N times N-1 times N-2 times… and so on, until you get to 'times 3 times 2 times 1’.

The nice thing about this way of making blends is that there probably aren’t any or many blends that can’t be made this way, with enough time and patience. That is, this method or model (probably) doesn't undergenerate: there are no real-life examples that the model can’t account for.

The problem is, this method sucks. N! is a really big number- find a calculator that can graph and calculate factorials and use it to graph Y = X!. That’s a steep upwards curve if I’ve ever seen one. For example, between mintand sage there are seven sounds. 7! is 5,040. What if you wanted to blend, say, skeletonwith titties? That’s thirteen sounds, and 13! is 6,227,020,800- over six billion 'possible’ blends in this model.

Why is this a problem? I don’t know about you all, but when I sit down to make a blend- like you do- I usually come up with maybe three, five max, blends that seem reasonable, and usually I settle on one that really works. And when I mentioned the bone titties meme on an imageboard, the person who responded to me took no time at all to coin the word skeletittiesfrom skeletonand titties- not the response time of someone who’s sifting painstakingly through six billion options trying to find the best one.

So, while treating blends as permutations of the handful of sounds that make up the words they’re made of doesn't undergenerate, it does overgenerate wildly- it labels 'possible’ blends that wouldn’t actually be made in the real world. It’s like a floodlight, it’ll illuminate the target… and everything around it, too. We want a spotlight that will only light up the star onstage- the real-world blends. How can we manage that?

There’s one simple way to narrow the beam that’s probably a good idea anyway. Consider: nstm /nstm/ is a possible blend in the permutation model, but it’s not a possible word in the English language. If someone tried to tell you about this new word nstm, your first response would probably be 'Gesundheit.' nstm violates the phonotactics of English, the rules about what sounds can be next to what other sounds and, as a result, what novel combinations of sounds can qualify as English words. For instance, words in English always contain some kind of vowel sound*, which nstm conspicuously lacks. So what if we just crossed off the list of permutations any 'blend’ that couldn’t be a word in the English language?

This is a start, but there are plenty of permutations that could be words but are really terrible blends. For mintand sage,mastin /'me.stɪn/ comes to mind. It’s definitely a possible word, but I don’t think I would produce that if I was trying to blend those words. So there must be some greater regularity to blends that our model just doesn’t capture. What might some of those regularities be? We’ll find out next time.

*This is both true and not-true, and I can hear some of you wincing right now as I’m typing it. On the phonetic level, it’s false: some syllables in English, instead of being built around a vowel, are built around a nasal, /ɹ/ (English’s R-sound), or /l/. See, for instance, button['bʌ.ʔn̩],rhythm [ˈɹɪðm̩], batter ['bæ.ɾɹ̩] (or ['bæ.ɾɚ]), and bottle ['bɑ.ɾɫ̩]. The undertick marks these syllabic consonants that, despite not being vowels, take the nucleus or core position usually associated with vowels. However, this can only occur in unstressed syllables (where vowel reduction, in which vowels are produced with less energy and often less distinctly, also occurs) and a pronunciation with [ə] in front of the consonant in question is always possible in addition to the syllabic consonant pronunciation. This leads most linguists to say that underlyingly, that is on the phonemic level, the words have a schwa in the nucleus that gets reduced further to zero, moving the /m/, /n/, /ɹ/, or /l/ into the nucleus. So phonologically, in English anyway, there really is always a vowel in there somewhere.

Hello! It’s been more than a month, I bet some of you forgot that you followed this blog. If all goes well- cross your fingers for me, everybody- you should see a relative flurry of activity on this blog in the near future as I catch up with my backblog. (As you can tell, I chose the subject of blends for solely dry, academic reasons. … ‘Backblog.’ Hee hee.)

Today’s source is only the second I read for my independent study, whose midterm is fast approaching. Oops. It’s Louise Pound’s 1914 work, “Blends: Their relation to English word formation.” Like the Bergström source I looked at earlier, this one is out of copyright and is available for free and legal download here.

Pound’s work doesn’t say very much that Bergström didn’t, but she says it a lot better, and leaves a lot of silly things out. Even more excitingly for my purposes, Pound’s work is entirely about morphological blends, rather than being dedicated mostly to syntactic blends. If you’re only going to read one of these two works, read Pound.

The biggest new thing in Pound is that Pound introduces a source taxonomy- a taxonomy based on who made the blend and for what purpose. Pound divides blends into the following categories:

- “Clever Literary Coinages” (e.g., galumphing, fidgitated)

- “Political Terms,” also including anything the press invents (e.g., gerrymander, Prohiblican, Popocrat)

- “Nonce Blends” (e.g., sweedlefrom swindle+ wheedle), which is how Pound categorizes error blends, although she notes that error blends that prove popular may persist in the context in which they were accidentally coined. Apparently this label only applies to erroneous adults, because the next heading is

- “Children’s Coinages” (e.g., snuddlefrom snuggle+ cuddle) which she notes as also largely accidental. (Why the split?)

- “Conscious Folk Coinages” (e.g., solemncholy,sweatspiration) and

- “Unconscious Folk Coinages” (e.g., diptherobiafrom diptheria+ hydrophobia, insinuendo), which are distinct from the conscious sort and from normal errors in that they are believed to be 'real’ words and have persisted in a wider context

- “Coined Place Names; also Coined Personal Names” (e.g., Texarkana, Maybeth)

- “Scientific Names” (e.g., chloroform, formaldehyde)

- “Names for Articles of Merchandise” (e.g., Locomobile, electrolierfrom electro- and chandelier)

She notes that some of these aren’t mutually exclusive, and suggests that the source of a blend may affect its success; however, it’s not clear which she believes would be more successful. To me, this sort of taxonomy doesn’t seem very useful. I’m more interested in a taxonomy that references more linguistic characteristics of the blends and source words, but Pound essentially throws her hands up in defeat at taxonomizing blends in this way. Blends are too varied, she argues, for a “definite grouping [to seem] advisable.]”

She does mention that, as a hard rule, the primary stress in a blend is on one of the primarily stressed syllables from the source words, and she mentions haplology, which she describes as the deletion of one of two (or possibly more) similar syllables in sequence. A blend she describes as haplologic is swellegant, from swell+ elegant. The sequence /ɛl/, pronounced like the letter L, that would be repeated if you just said swell-elegant, is preserved only once. I wouldn’t necessarily describe the /ɛl/ in elegant as a syllable- I’d say that the /l/ starts the next syllable. In fact, many of the haplologic blends she describes delete similar sequences, and not properly syllables. Does this matter? You’re free to discuss among yourselves.

Pound, like Bergström, brings up onomatopoetic blends, and doesn’t conclusively place them in or out of the category of blends. I tend to say 'out’. Onomatopoetic 'blends’ generally have as their sources one of two classes of words that have sounds and maybe a general meaning in common and combines those sounds together; for instance, chump (with the original meaning 'a short segment of wood’) is suggested to have arisen from chop, chunk, or chuband lump (but equally plausible, and having similar meanings, are bumpand hump).

With no definite single-word source for either element, it seems more likely that a word like this arose from non-word, non-morpheme (it’s not like you can add -ump onto anything and make words referring to small, smooth raised areas on a surface) associations people have with certain sounds or sequences, so that words made 'out of the blue’ have suggestive forms. This, or ideas like it, is called sound symbolism- the apparent meaning individual sounds sometimes appear to have when the sounds themselves don’t behave like morphemes.

That’s about it for Pound (1914)- next up is MacKay (1972, 1973), a jump of nearly sixty years!

I hope you were not misled by my last posting; most of this blog is going to be me explicating and responding to scholarship on blends, and not fun examples of blends in pop culture, although I’ll try to post any of those I find! I do hope, however, to keep these posts as digestible and accessible as possible.

Today’s post concerns the chronologically earliest source I found in my initial research, “On blendings of synonymous or cognate expressions in English,” a 1906 dissertation by one Gustav Adolf Bergström, which is out of copyright- you can download it freely and legally here. (By the way, you will find a full citation of any academic source I mention here on the References page, which can be found on the sidebar of my blog.)

This text has a couple of issues. One, it’s one of the worst-organized pieces of scholarship I’ve ever read- sorry, Gustav. (In his defense, he says in his preface that his work got derailed by illness, which I totally get.) Two, Gustav considers the primary focus of his work to be what he calls ‘syntactic blending,’ where a speaker appears to have started saying one sentence or phrase and then meandered into another, and while he considers lexical blends to be related it’s a secondary focus of the work.

I don’t like this idea that 'syntactic blends’ are related to morphological blends at all! Current theories of linguistics that I’m familiar with say that there is a two-way split, roughly, in the representation of language in the mind. On the one hand there’s the lexicon, which is like a mental dictionary that stores everything you know about all the words you know- their form(s), like the fact that to be has the present-tense indicative forms am,is, and are, their syntactic category, such as verb, noun, adjective, etc., and their meaning, although it’s controversial exactly how much information about a word’s meaning is stored in the lexicon. On the other hand there’s the syntactic component, which strings words together according to certain rules. (You can go into finer detail about how the syntactic component is divided, but I won’t here.)

The point being, words are stored in the lexicon, but phrases and sentences are built in the syntactic component from words in the lexicon, and not 'stored’ in the same sense as words are stored (with the exception of idioms, phrases or sentences whose meanings are memorized rather than being possible to figure out just from the words they’re made up of- for instance, letting the cat out of the bag means 'telling a secret,’ which isn’t obvious at first glance and which language learners have to memorize). So words and phrases/sentences are very different things, structurally- one memorized, one constructed on the fly.

Bergström’s theory about how error blends, blends formed on accident as a speech error, are formed is that the speaker considers two related words at once for a given thought and tries to articulate both- or, more precisely, switches over at some point from saying one word to trying to say the other. As far as psycholinguistic theories from before the cognitive revolution go, this isn’t bad! It’s hard to say, though, that the same process applies to 'syntactic blendings’ when our theories say that phrases aren’t stored in the brain in the same way that words are- even if people do consider two different phrases at once and accidentally switch between them, they’re constructing them in the moment instead of simply remembering them.

Since I’m studying morphological blends specifically, and I don’t agree that 'syntactic blendings’ are another instance of the same phenomenon, I didn’t make use of the parts of the text that were about these 'syntactic blendings’.

Despite his focus not being on morphological blends specifically, he does have some interesting insights to offer. He suggests that blends are asymmetric, that is, that the two words that contribute to a blend may contribute to it in different ways. While he doesn’t go into much detail about how their roles may differ, this basic insight will, as we’ll see later in the semester, be borne out by more modern research. He notes, as other researchers have, that blends occur in many (perhaps all) languages- that is, they are cross-linguistic. And he theorizes that error blends result from pairs of words that are related in meaning through synonymy, similarity or equivalence of meaning, or antonymy, opposition of meaning. Intentional blends, which are what they sound like- blends made on purpose- he suggests are in imitation of naturally-occurring error blends, and don’t have to be synonyms or antonyms like error blends do. He specifically mentions that two words that are frequently right next to each other may be blended intentionally, such as GritainfromGreat+Britain.

He notes that some blends seem 'better’ than others, a concept for which I often use the term felicity- literally meaning 'happiness’ or the quality of being pleasing. This is a difficult-to-define concept, but basically it means how 'successful’ a linguistic form is in some measurable way- how easy it is to understand, how likely it is to be adopted into the mainstream, or simply how much speakers prefer it to other, similar forms. I did a pilot study a while back on what factors make unfamiliar blends more easily intelligible to speakers, which I might make the subject of another post.

The qualities that Bergström suggests may influence the 'success’ of a blend include usefulness (does the new form encapsulate some common concept that is otherwise complicated to express?), whether the blend fits the 'character’ of the language (which I’d translate into, does it sound like other commonly-used words?), and the frequency of the parent words separately and together.

It is because of lack of this 'success’ in making it into the mainstream that blends are such a comparatively rare source for new words in the general knowledge; he suggests that blends are invented all the time, but rarely make it out of the small circle in which they were innovated, so that they die out almost as fast as they’re invented.

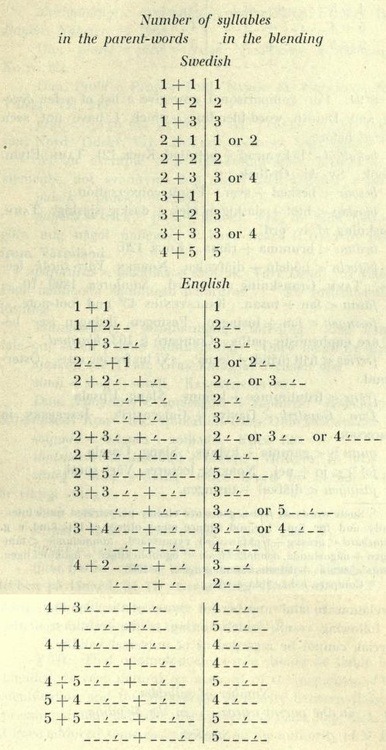

Bergström also notes some commonalities in form among blends- that they preserve stress on one of the stressed vowels in the parent words. For instance, in the blend Brangelina, it's Brangelina, and not Brangelinaor Brangelina; it takes its stress from the source word Angelina. He further notes that both beginnings of words are rarely preserved, but does not note that, to my knowledge, it is never the case that neither beginning is kept. Finally, he makes a chart that I’d be better off inserting as an image than trying to describe:

Fig. 1: Bergström takes the blends he has available and compares the syllable number and stress position of the parent words to the syllable number and stress position of the blend. In the English chart, each underscore represents a syllable and the tick mark represents the syllable that takes primary stress.

Finally, Bergström divides blends up in several ways- that is, he forms a taxonomy, or a system that divides things (here, blends) into groups based on characteristics they all share. The first major taxonomy he introduces is three-way: 'subtractive compromises,’ where both parent words lose material in the blend, like brunch; 'partial subtraction compromises,’ where one word is preserved but the other loses material, like slithy; and 'addition compromises’, where neither parent word loses material. For the last category, he uses the example forbecause, which just sounds to me like a compound; for my purposes, I’d say this would apply to a word like slanguage.

He additionally divides blends into one category that switches from one word to the other only once- like brunch, where br- is from breakfast and then it switches to -unchfromlunch- and another category that switches between the words more than once- like slithy, with the sl- from slimy, -lith- from lithe, and the -yfromslimy once more.

The last division Bergström makes is between error blends and intentional blends, which we’ve talked about already, and onomatopoeia blends. Onomatopoeia are 'sound-imitative’ words like bangandclick, and frequently words of this type that mean similar things have similar sounds in them. Here Bergström classifies some of these as blends, as will the next source I examine- Pound (1914)- but I incline towards a different analysis which I will expand on after writing about that source.

Whew! That’s a lot of words! Buckle your seatbelts, kiddoes, because posts like this are gonna make up the meat of this blog.

Lemme Smang It- Yung Humma ft. Flynt Flossy

Let’s start the blog with something fun! Don’t listen to this song at work- Rated R for Frank Sexuality and Bemused Backup Dancer. Anyway, the reason I’m posting this on my serious-business morphological blends research blog is right in the title, the nonce word smang. As Yung Humma states in the chorus, smang is a blend of smashand bang- in the sexual sense, if that wasn’t clear enough. The blend is used, here as elsewhere, for humorous wordplay purposes.

And smang actually brings up an important theoretical problem. Because words are a spoken phenomenon before they’re a written one, we don’t want to analyze words in terms of letters, but in terms of the units of sound that make them up- phonemes. A phoneme is, in general terms, the smallest segment of sound that can distinguish two words. While English uses 26 letters in writing, it has somewhere in the neighborhood of forty phonemes, the precise number depending on your dialect. To represent these speech sounds, we usually use the International Phonetic Alphabet, and we usually put phonemic transcriptions between slashes /like this/.

So that’s simple enough, right? But it turns out that figuring out what phonemes make up a word isn’t always obvious, and different dialects along with theoretical differences of opinion can lead to very different transcriptions for one and the same word. Smash is pretty easy- /smæʃ/. /s/ and /m/ mean what you think they do, /æ/ is the vowel in cat, and /ʃ/ is the sh sound. But when it comes to the vowel of bang things get complicated. Historically, it would have been the same vowel as in smashand cat- /æ/. However, in many dialects (including mine), that vowel has shifted to something more like the vowel in say when it comes in front of /ŋ/, the ng sound. We can represent that vowel with [e]. (Brackets go around transcriptions of the sounds themselves, rather than just the phonemic identity, of speech.) So is it /bæŋ/ or /beŋ/? Certainly it sounds more like the latter, but psychologically it could be the former!

So why does this matter? Well, what if you were trying to count how many phonemes from smash made its way into smang? Does the vowel ‘count’ as part of both smashand bang, or does it only count as the vowel from bang? I don’t have an answer right now, which is okay! If I need to find out the answer I’ll just have to do an experiment or gather more data to come to a more conclusive analysis.

Or should I say, introbligatory? Obligatroduction? Perhaps I shouldn’t.

Hello all! My name is Matthew Aston Seaver, and I am a senior linguistics student at the University of Mary Washington. This semester I’m undertaking an independent study on morphological blends.

What’s a morphological blend?

Well, it can also be called a portmanteau, a blend-word, or just a blend- but fundamentally, a blend is a word like brunch- from breakfastand lunch- that is formed by fusing together two other words. What’s interesting about blends is that they are not a simple case of compoundingoraffixation. (An example of compounding is icehouse, from iceand house; an example of affixation is affixation, from affix, -ate, and -ion.) Both of those processes act on morphemes, the smallest units in language that can carry their own meanings. Morphemes can be bound, like affixes, and be unable to stand on their own, or they can be free, like independent words.

The thing to notice here is that neither *br- nor *-unch is a meaningful unit on its own. (In this context, the asterixes mean something like unattested, ungrammatical, or just plain wrong.) Because the units aren’t morphemes, traditional approaches to morphology- here, the inner structure of words- have trouble analyzing blends. Breakfast is meaningful, and so is lunch, but some of the material from both words is missing; what happened to ‘eakfast’ and 'l’?

For that matter, what about a word like slanguage (Irwin by way of Wood by way of Pound 1914), where slangand language both make it into the word intact? Or Lewis Carrol’s coinage slithyfrom slimyand lithe, where the words don’t even have the decency to occur linearly? Slimy wraps right around lithe- is that even allowed?

For my purposes- and this definition is subject to revision!- a blend is formed in such a way that there are at least two parent words, and in the output, there is no place where the end of one word is followed by the beginning of the other. You’ll notice in slanguage that the end of slang occurs well after the beginning of language, and that in slithy, lithe starts and ends entirely within the bounds of slimy.

Well, now that we’ve got that all cleared up, what’s left for me to talk about for this whole semester? I have a sneaking suspicion that there’s gonna be a whole lot.

~Follow for more soft morphology~