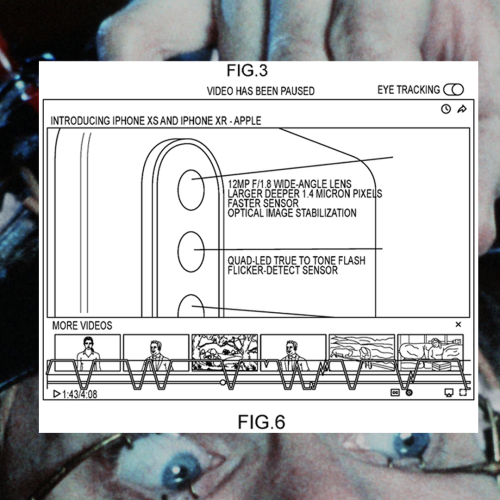

#eye tracking

Eye tracking in the HTC Vive Pro

Short video from Cubic Motion presents a debug-view of eye-tracking from within a VR headset. Why is eye-tracking important? There are several useful possibilities - graphics are optimized through the lenses based on where you look, you can make selections in menus, and your avatar can present your gaze to other players:

First attempt at a simple tracker from a Pupil Labs camera inside the HTC Vive Pro. Watch this space for further density, coverage, and precision over the coming weeks. Model was trained on a desktop CPU in a few minutes. No GPU in either training or runtime. No neural networks in fact.

Post link

IMAGINATIVE

Telepresence art installation by H.O features two rooms, one with a display with eye-tracking interface to control a ceramic cup, the other a chromokey-suited robotic arm to carry out instructions from the former room:

What if our thoughts materialized as tangible actions in remote locations?

IMAGINATIVE is an installation work consisting of two rooms.

In the first, there is a monitor displaying a table with a cup in an empty room. Using a specialized (eye tracking / brain computer) interface, participants can telekinetically control the cup in the remote space. The shifting of the cup back and forth, poised in the air, expresses someone’s imagination in real-time.

In the second room, the audience will encounter an extraordinary spectacle. Sitting on the table is a green robot arm enabling the cup to move, while the floor surrounding the table is covered in broken cups.

Given that brain computer interfaces directly connect our “consciousness” with the external world, what does “imagination” mean? What is the “imagination” that we present to the world?

Post link

An interesting question found its way into our inbox recently, asking about relative clauses in Swedish, and wondering whether their unique characteristics might pose a problem for some of the linguistic theories we’ve talked about on our channel. So if you want a discussion of syntax, Swedish, and subjacency (with some eye-tracking thrown in), this is for you!

So yes, there is a hypothesis that Swedish relative clauses break one of the basic principles by which language is thought to work. In particular, it’s been claimed that one of the governing principles of language isSubjacency, which basically says that when words move around in a sentence, like when a statement gets turned into a question, those words can’t move around without limit. Instead, they have to hop around in small skips and jumps to get to their destination. To make this more concrete, consider the sentence in (1).

(1) Where did Nick think Carol was from?

The idea goes that a sentence like this isn’t formed by moving the word “where” directly from the end to the beginning, as in (2). Instead, we suppose that it happens in steps, by moving it to the beginning of the embedded clause first, and then moving it all the way to the front of the sentence as a whole, shown in (3).

(2a) Did Nick think Carol was from where?

(2b) Where did Nick think Carol was from _?

(3a) Did Nick think Carol was from where?

(3b) Did Nick think where Carol was from _?

(3c) Where did Nick think _ Carol was from _?

One of the advantages of supposing that this is how questions are formed is that it’s easy to explain why some questions just don’t work. The question in (4) sounds pretty weird — so weird that it’s hard to know what it’s even supposed to mean. (The asterisk marks it as unacceptable.)

(4) *Where did Nick ask who was from _?

Theexplanation behind this is that the intermediate step that “where” normally would have made on its way to the front is rendered impossible because the “who” in the middle gets in its way. It’s sitting in exactly the spot inside the structure of the sentence that “where” would have used to make its pit stop.

More generally, Subjacency is used as an explanation for ‘islands,’ which are the parts of sentences where words like “where” and “when” often seem to get stranded. And one of the most robust kinds of island found across the world’s languages is the relative clause, which is why we can’t ever turn (5) into (6).

(5) Nick is friends with a hero who lives on another planet

(6) *Where is Nick friends with a hero who lives _?

Surprisingly, Swedish — alongside other mainland Scandinavian languages like Norwegian — seems to break this rule into pieces. The sentence in (7) doesn’t have a direct translation into English that sounds very natural.

(7a) Såna blommor såg jag en man som sålde på torget

(7b) Those kinds of flowers saw I a man that sold in square-the (gloss)

(7c) *Those kinds of flowers, I saw a man that sold in the square

So does that mean we have to toss all our progress out the window, and start from scratch? Well, let’s not be too hasty. For one, it’s worth noting that even the English version of the sentence can be ‘rescued’ using what’s called a resumptive pronoun, filling the gap left behind by the fronted noun phrase “those kinds of flowers.”

(8) Those kinds of flowers, I saw a man that sold them in the square

For many speakers, the sentence in (8) actually sounds pretty good, as long as the pronoun “them” is available to plug the leak, so to speak. At the very least, these kinds of sentences do find their way into conversational speech a whole lot. So, whether a supposedly inviolable rule gets broken or not isn’t as black-and-white as it might appear. What’s maybe a more compelling line of thinking is that what look like violations of these rules on the surface can turn out not to be, once we dig a little deeper. For instance, the sentence in (9), found in Quebec French, might seem surprising. It looks like there’s a missing piece after “exploser” (“blow up”), inside of a relative clause, that corresponds directly to “l'édifice” (“the building”) — so, right where a gap shouldn’t be possible.

(9a) V'là l'édifice qu'y a un gars qui a fait exploser _

(9b) *This is the building that there is a man who blew up

But that embedded clause has some very strange properties that have given linguists reasons to think it’s something more exotic. For one, the sentence in (9) above only functions with what’s known as a stage-level predicate — so, a verb that describes an action that takes place over a relatively short period of time, like an explosion. This is in contrast to an individual-level predicate, which can apply over someone’s whole lifetime. When we replace one kind of predicate with another, what comes out as garbage in English now sounds equally terrible in French.

(10a) *V’là l'édifice qu'y a un employé qui connaît _

(10b) *This is the building that there is an employee who knows

Interestingly, stage-level predicates seem to fundamentally change the underlying structures of these sentences, so that other apparently inviolable rules completely break down. For instance, with a stage-level predicate, we can now fit a proper name in there, which is something that English (and many other languages) simply forbid.

(11a) Y a Jean qui est venu

(11b) *There is John who came (cannot say out-of-the-blue to mean “John came”)

For this reason, along with some other unusual syntactic properties that come hand-in-hand, it’s supposed that these aren’t really relative clauses at all. And not being relative clauses, the “who” in (9) isn’t actually occupying a spot that any other words have to pass through on their way up the tree. That is, movement isn’t blocked like how it normally would be in a genuine relative clause.

Still, Swedish has famously resisted any good analysis. Some researchers have tried to explain the problem away by claiming that what look like relative clauses are actually small clauses — the “Carol a friend” part of the sentence below — since small clauses are happy to have words move out of them.

(12a) Nick considers Carol a friend

(12b) Who does Nick consider _ a friend?

But the structures that words can move out of in Swedish clearly have more in common with noun phrases containing relative clauses, than clauses in and of themselves. In (13), it just doesn’t make sense to think of the verb “träffat” (“meet”) as being followed by a clause, in the same way it did for “consider.”

(13a) Det har jag inte träffat någon som gjort

(13b) that have I not met someone that done

(13c) *That, I haven’t met anyone who has done

So what’s next? Here, it’s important not to miss the forest for the trees. Languages show amazing variation, but given all the ways it could have been, language as a whole also shows incredible uniformity. It’s truly remarkable that almost all the languages we’ve studied carefully so far, regardless of how distant they are from each other in time and space, show similar island effects. Even if Swedish turns out to be a true exception after all is said and done, there’s such an overwhelming tendency in the opposite direction, it begs for some kind of explanation. If our theory is wrong, it means we need to build an even better one, not that we need no theory at all.

And yet the situation isn’t so dire. A recent eye tracking study — the first of its kind to address this specific question — suggests a more nuanced set of facts. Generally, when experimental subjects read through sentences, looking for open spots where a dislocated word might have come from as they process what they’re seeing, they spend relatively less time fixated on the parts of sentences that are syntactic islands, vs. those that aren’t. In other words, by default, readers in these experiments tend to ignore the possibility of finding gaps inside syntactic islands, since our linguistic knowledge rules that out. And in this study, it was found that sentences like the ones in (7) and (13), which seem to show that Swedish can move words out from inside a relative clause, tend to fall somewhere between full-on syntactic islands and structures that typically allow for movement, in terms of where readers look, and for how long. This suggests that Swedish relative clauses are what you might call ‘weak islands,’ letting you move words out of them in some circumstances, but not in others. And this is in line with the fact that not all kinds of constituents (in this case, “why”) can be moved out of these relative clauses, as the unacceptability of the sentence in (14) shows. (In English, the sentence cannot be used to ask why people were late.)

(14a) *Varföri känner du många som blev sena till festeni?

(14b) Why know you many who were late to party-the

(14c) *Why do you know many people who were late to the party?

For reasons we don’t yet fully understand, relative clauses in Swedish don’t obviously pattern with relative clauses in English. At the same time, the variation between them isn’t so deep that we’re forced to throw out everything we know about how language works. The search for understanding is an ongoing process, and sometimes the challenges can seem impossible, but sooner or later we usually find a way to puzzle out the problem. And that can only ever serve to shed more light on what we already know!

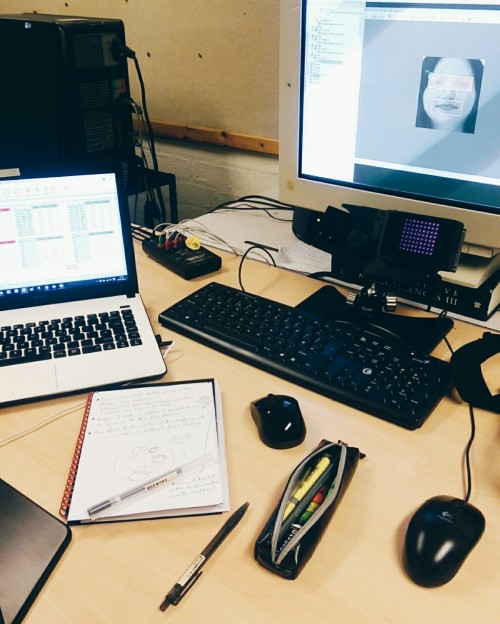

7am // Data analysis requires patience.

Working with the eye-tracker again and I’ve been inputting data for what feels like an eternity; I have >5,000 units to input.

Here’s something cool though: The eye-tracking camera on the right has an infrared illuminator (the square with the purple dots). I can’t actually see those dots with my naked eye (it’s pitch black, I’m looking at it right now and there’s no dots there). Turns out phone cameras are more sensitive to light than human eyes are, so it’s able to pick-up the infrared light that is ‘invisible’ to us. Am-a-zing!

Post link

Nope, not kidding. I have actually been writing computer programs with my eyes (and a few voice commands thrown in here and there).

More specifically, I’m using an eye tracking device called the Tobii EyeX. It’s a consumer-level eye tracker which mounts to the bottom of my computer monitor and connects to my computer via USB 3.0. (I’ve written about this type of eye tracking device before, and you can also look at Tobii’s website.)

Okay, so how?…

Good question! And I’m going to answer it with the rest of this post. I’m going to write this in the general style of a how-to guide, in case any of my readers actually want to copy my set up. (To my knowledge, no one else has documented how to do this.) However, even if you have no desire to write Python with your eyes, you might still want to skim the rest of this. Several of the tools that I’m using have potentially much broader applications.

Hardware requirements:

-Tobii EyeX sensor bar (link, < $150)

-external monitor, preferably at least 20 inches but no more than 24 inches (You probably could make this work with a laptop, but having a larger screen area makes the eye tracker much easier to use. 24 inches is the size limit for the EyeX sensor.)

-Modern computer with USB 3.0 support (I have a quad core processor at 3.6 GHz, 8 GB of RAM. I wouldn’t recommend much less than that, unless you want to eliminate the speech commands from the project and use keystrokes instead.)

Software requirements:

-Windows 7 64-bit (should work on Windows 8 or 10, as well. But you may have to make some adjustments.)

-EyeX drivers and associated software (included with the device)

-Dasher (free and open source)

-FreePIE(free and open source)

-Compiler and editor for the language you wish to program in (for this example, I’ll be using Sage and its browser-based interface)

You will also need some sample code from the language you want to program in (so that Dasher can build an appropriate language model). You can ignore this if you only want to write in English (or another natural language).

Got all that? Good, let’s get started.

1. Install the EyeX drivers and mount the sensor according to the manufacturer’s instructions. Run the configuration to get EyeX to work properly with your monitor, and calibrate it to recognize your eyes. All of this is standard for any user of the EyeX, and you should be able to follow instructions from the manufacturer. When you’re finished, you can test your calibration using the “test calibration” option in the EyeX software.

2. Download and install Dasher. We will make some configuration changes to Dasher in the next step, but for now just take a minute to familiarize yourself with Dasher if you haven’t used it before. With the default settings, you can click the mouse inside of Dasher’s window to start writing, and drag the mouse towards the letters you want in order to write. Your text will appear in the box at the top. For more details, see Dasher’s website. (Obviously, skip this experimentation/exploration step if you aren’t physically able to use the mouse. You can experiment with Dasher once we get it working with the eye tracker. I just included this step so that, as much as possible, new users can get used to one new technology at a time.)

3. (Skip this step if you just want to write in English, not computer code.) Close Dasher, and locate its program files on your hard drive. On Windows 7, I have a Dasher folder in Program Files (x86). Inside of that is another folder for my specific version, in this case Dasher 4.11. In your Dasher folder, find the folder labeled system.rc. We are going to add two new plaintext files to this folder. First, an alphabet file. This defines the all of the valid symbols for your language. I would recommend starting with an existing file, so make a copy of alphabet.englishC.xml and put it in the same folder. Name your copy alphabet.<yourlanguage>.xml, and open the file in a plain text editor. Make the following changes :

-Change line 5 so that the text in double quotes is the name of your language (this will be shown in that the user interface).

-Change line 9 by replacing ‘training_english_GB.txt’ with the filename for your training text. (I used 'training_sage.txt’)

-Remove the section labeled “Combining accents” (lines 13 through 27)

-(optional and language-dependent) I additionally removed line 127 (the tab character) because my editor does auto-formatting with tab characters, and unexpected results were generated if Dasher inserted a tab character and then attempted to delete that character with the backspace key (which it regularly does if you take a “shortcut” to the character you want through another box). I decided that it was simpler to leave the autoformatting on in my editor (I really didn’t want to manually insert six or seven tab characters before a line of code, as can happen in Python!) and insert my tabs manually with voice commands. You will have to decide how to handle these sorts of formatting and autoformatting issues yourself, based on the language and editor that you choose.

It is also at this point that you can reorder or regroup any of the characters if you want, in a way that makes sense for the language you are writing.

Save your changes, and now you have an alphabet file. Now, let’s specify the training text. Create a plain text file titled training_<yourlanguage>.txt (or whatever you called it in the alphabet file). Paste all of your sample code into that file. (More is better! But Dasher will also learn from what you write over time.) Save your training text file in the system.rc folder, just like the alphabet file.

Now, open Dasher and make sure that you can access your new language. Click on the Prefs button, and look in the alphabet selection box. You should be able to locate the language that you just specified. Click on it, click okay, and try writing in your programming language. If everything is working, proceed to the next step. Otherwise, go back and check that you got all of the syntax and file names/locations correct. (A few troubleshooting details: If it works, but Dasher warns you that you have no training text, then your alphabet file is correct but you need to check the training text file (or file specified for training in the alphabet file). If it works, but the predictions seem bad, then you probably need more training text. If it works, but Dasher takes too long to load, try deleting some of your training text.)

4. Okay, now let’s configure the Dasher user interface. Click on the Prefs button. Under alphabet selection, make sure your desired language is selected. On the control tab, select 'Eyetracker Mode’ for 'Control Style’. Then, select 'Mouse Input’ for the 'Input Device’. You can select the speed and choose whether or not you want Dasher to automatically adjust its speed. (I like this feature, but it’s up to you.) Finally, choose the method(s) for starting and stopping. Personally, I like the “Start with mouse position – Centre circle” option for with the eye tracker. You might also want to enable the mouse button control and/or spacebar, especially until you get comfortable with the eye tracker (obviously only useful if you have a way to push the spacebar or click the mouse button). I would not recommend using the “stop outside of canvas” option, because it makes the motion feel extremely jerky when the eye tracker briefly leaves the active area. All of that is personal preference, though. There’s no harm in experimenting to see what you like best.

5. More Dasher configuration. In the preferences window, click on the Application tab. Under “Application style:” select “Direct entry”. This means that Dasher will automatically send keystrokes to the currently active window. This lets you write directly into your code editor of choice.

Let’s test again. With Dasher open, open Microsoft Notepad. Click in Notepad so that your cursor is in the text entry field. Then go to Dasher and (without clicking on anything besides the canvas – you don’t want to change focus) start writing with the mouse. Your text should appear in Notepad. Once that’s working, give it a try with your desired code editor.

6. Download and install FreePIE.

7. Let’s actually use this eyetracker! At the end of this post, I have pasted the code for a simple mouse control script. Take this script and paste it into a new script in FreePIE. Click on script menu and then run script (or press F5). (Tumblr doesn’t support any sort of code environment, so, if you have trouble getting the script to work, let me know and I can email you the actual file.) Then press the Z key to start the eye-controlled mouse movements. If everything is working correctly the mouse cursor should now follow your gaze around the screen. I’ve also set up this script so that you can start or stop the eye mouse using speech. If you have a decent microphone (preferably headset), make sure that that device is selected as your default recording device in Windows. Then, with the script running, you can say “start eye mouse” or “stop eye mouse” to start or stop the mouse movement. (If the computer has a hard time recognizing your voice, you can improve this by doing some of the training in Windows speech recognition. You can find it in the control panel.)

8. Now all that’s left to do is chain all the pieces together. Get FreePIE open with your mouse script running. Make sure that Dasher is running, and also open your code editor. Make sure that the focus is in the text entry field of the code editor, press the or use speech to start the eye mouse, and finally start Dasher (by whatever you selected in Dasher’s configuration). You should now be able to enter code with only your eyes.

9. (Optional) Depending on your needs, you may want to add some additional commands to the FreePIE script. For example, I have written some code that lets me enter tab characters by voice, and also navigate with in text by voice (e.g. “go to end of line”, “go to beginning of line”, “go up line”, “go down line”, etc.). Each of these is just a couple of lines in FreePIE. Feel free to reach out to me if you need some help with adding these sorts of additional commands.

That’s a lot of text, so let’s finish off with a screenshot. Here’s what the whole thing looks like when it’s up and running. On the left-hand side of the screen you see Dasher. On the right-hand side is Firefox showing Sage. FreePIE is running but minimized.

[Image description: the image shows a screenshot of a Windows 7 desktop with two visible applications. On the left-hand side of the screen is Dasher. The majority of that window is filled with a collection of different colored squares and typable characters. There is a cross in the center of the window, and a red line shows where the user is currently pointing. On the right-hand side of the screen is a Firefox web browser window. The active tab is labeled Palindromes, and most of the window is occupied by text fields showing Sage code and output.]

The script for FreePIE:

#Use Z or speech to toggle on/off

import ctypes

import math

def update():

global prevX

global prevY

#Settings

smoothingConstant = 0.2 #between 0 and 1; 0 means no cursor movement, 1 means use raw data

rawxcord = tobiiEyeX.gazePointInPixelsX

rawycord = tobiiEyeX.gazePointInPixelsY

diagnostics.watch(rawxcord)

diagnostics.watch(rawycord)

xcord = prevX + (rawxcord - prevX) * smoothingConstant

ycord = prevY + (rawycord - prevY) * smoothingConstant

if enabled:

ctypes.windll.user32.SetCursorPos(int(xcord),int(ycord))

global enabled

#enabled = False

prevX = xcord

prevY = ycord

if starting:

global enabled

global prevX

global prevY

enabled = False

prevX = 0

prevY = 0

tobiiEyeX.update += update

toggle = keyboard.getPressed(Key.Z)

start = speech.said(“start eye mouse”)

stop = speech.said(“stop eye mouse”)

if toggle:

global enabled

enabled = not enabled

if start:

global enabled

enabled = True

if stop:

global enabled

enabled = False

Within the past year, multiple companies have released eyetrackers costing hundreds, instead of (tens of) thousands, of dollars. These new devices are aimed at gaming and multimedia applications, but they are still amazingly useful as assistive technology. The two main competitors at this point seem to be Eye Tribe andTobii EyeX.

I purchased an EyeX unit this March, and it has significantly expanded what I can do with my computer. I will write later about the specifics of my setup, but I want to today just survey some of the development happening on the EyeX and other low-cost eye tracking devices (much software works across multiple devices) which is relevant to disability. (There is also significant gaming development on the platform, but it is largely using multiple input devices and would not be accessible to many physically disabled people. Plus,Tobii will tell you all about that.)

To be clear, I’m saying that these devices have great accessibility potential, not that they are currently ready made computer access solutions. At present, using these devices as an accessibility tool requires at least moderate technical skills.

Okay, without further ado, here are some software projects I find interesting:

- OptiKey is an on-screen keyboard and mouse emulator specifically designed for eye tracking input. Free and open source. Windows only.

- Project Iris uses eye tracking to control the mouse. It also allows you to define active screen regions and triggers actions when you look at them. Many potential uses for accessible gaming. Paid. Windows only.

- Gaze Speaker is a self contained environment for speech generation by eye movements. Also includes other tools like an email client, a simple web browser, and a file browser, all designed to be accessed by eye alone. Free and open source.

- FreePIE lets you write Python scripts that take input from many, many different devices, including the EyeX. You can use that input to trigger just about any action you can imagine, provided you can figure out how to write the code. Probably best for geeks, but extremely powerful for meeting custom needs. Free and open source.

Why you might be counting in the wrong language

Learning numbers in a European language has probably affected your early maths ability.

(…) And in English, words like “twelve” or “eleven” don’t give many clues as to the structure of the number itself (these names actually come from the Old Saxon words ellevanandtwelif, meaning “one left” and “two left”, after 10 has been subtracted).