#neuroscience

How the brain navigates cities

Everyone knows the shortest distance between two points is a straight line. However, when you’re walking along city streets, a straight line may not be possible. How do you decide which way to go?

A new MIT study suggests that our brains are actually not optimized to calculate the so-called “shortest path” when navigating on foot. Based on a dataset of more than 14,000 people going about their daily lives, the MIT team found that instead, pedestrians appear to choose paths that seem to point most directly toward their destination, even if those routes end up being longer. They call this the “pointiest path.”

This strategy, known as vector-based navigation, has also been seen in studies of animals, from insects to primates. The MIT team suggests vector-based navigation, which requires less brainpower than actually calculating the shortest route, may have evolved to let the brain devote more power to other tasks.

“There appears to be a tradeoff that allows computational power in our brain to be used for other things — 30,000 years ago, to avoid a lion, or now, to avoid a perilious SUV,” says Carlo Ratti, a professor of urban technologies in MIT’s Department of Urban Studies and Planning and director of the Senseable City Laboratory. “Vector-based navigation does not produce the shortest path, but it’s close enough to the shortest path, and it’s very simple to compute it.”

Ratti is the senior author of the study, which appeared in Nature Computational Science. Christian Bongiorno, an associate professor at Université Paris-Saclay and a member of MIT’s Senseable City Laboratory, is the study’s lead author. Joshua Tenenbaum, a professor of computational cognitive science at MIT and a member of the Center for Brains, Minds, and Machines and the Computer Science and Artificial Intelligence Laboratory (CSAIL), is also an author of the paper.

Vector-based navigation

Twenty years ago, while a graduate student at Cambridge University, Ratti walked the route between his residential college and his departmental office nearly every day. One day, he realized that he was actually taking two different routes — one on to the way to the office and a slightly different one on the way back.

“Surely one route was more efficient than the other, but I had drifted into adapting two, one for each direction,” Ratti says. “I was consistently inconsistent, a small but frustrating realization for a student devoting his life to rational thinking.”

At the Senseable City Laboratory, one of Ratti’s research interests is using large datasets from mobile devices to study how people behave in urban environments. Several years ago, the lab acquired a dataset of anonymized GPS signals from cell phones of pedestrians as they walked through Boston and Cambridge, Massachusetts, over a period of one year. Ratti thought that these data, which included more than 550,000 paths taken by more than 14,000 people, could help to answer the question of how people choose their routes when navigating a city on foot.

The research team’s analysis of the data showed that instead of choosing the shortest routes, pedestrians chose routes that were slightly longer but minimized their angular deviation from the destination. That is, they choose paths that allow them to more directly face their endpoint as they start the route, even if a path that began by heading more to the left or right might actually end up being shorter.

“Instead of calculating minimal distances, we found that the most predictive model was not one that found the shortest path, but instead one that tried to minimize angular displacement — pointing directly toward the destination as much as possible, even if traveling at larger angles would actually be more efficient,” says Paolo Santi, a principal research scientist in the Senseable City Lab and at the Italian National Research Council, and a corresponding author of the paper. “We have proposed to call this the pointiest path.”

This was true for pedestrians in Boston and Cambridge, which have a convoluted network of streets, and in San Francisco, which has a grid-style street layout. In both cities, the researchers also observed that people tended to choose different routes when making a round trip between two destinations, just as Ratti did back in his graduate school days.

“When we make decisions based on angle to destination, the street network will lead you to an asymmetrical path,” Ratti says. “Based on thousands of walkers, it is very clear that I am not the only one: Human beings are not optimal navigators.”

Moving around in the world

Studies of animal behavior and brain activity, particularly in the hippocampus, have also suggested that the brain’s navigation strategies are based on calculating vectors. This type of navigation is very different from the computer algorithms used by your smartphone or GPS device, which can calculate the shortest route between any two points nearly flawlessly, based on the maps stored in their memory.

Without access to those kinds of maps, the animal brain has had to come up with alternative strategies to navigate between locations, Tenenbaum says.

“You can’t have a detailed, distance-based map downloaded into the brain, so how else are you going to do it? The more natural thing might be use information that’s more available to us from our experience,” he says. “Thinking in terms of points of reference, landmarks, and angles is a very natural way to build algorithms for mapping and navigating space based on what you learn from your own experience moving around in the world.”

“As smartphone and portable electronics increasingly couple human and artificial intelligence, it is becoming increasingly important to better understand the computational mechanisms used by our brain and how they relate to those used by machines,” Ratti says.

Our brains have a “fingerprint” too

An EPFL scientist has pinpointed the signs of brain activity that make up our brain fingerprint, which – like our regular fingerprint – is unique.

“I think about it every day and dream about it at night. It’s been my whole life for five years now,” says Enrico Amico, a scientist and SNSF Ambizione Fellow at EPFL’s Medical Image Processing Laboratory and the EPFL Center for Neuroprosthetics. He’s talking about his research on the human brain in general, and on brain fingerprints in particular. He learned that every one of us has a brain “fingerprint” and that this fingerprint constantly changes in time. His findings have just been published in Science Advances.

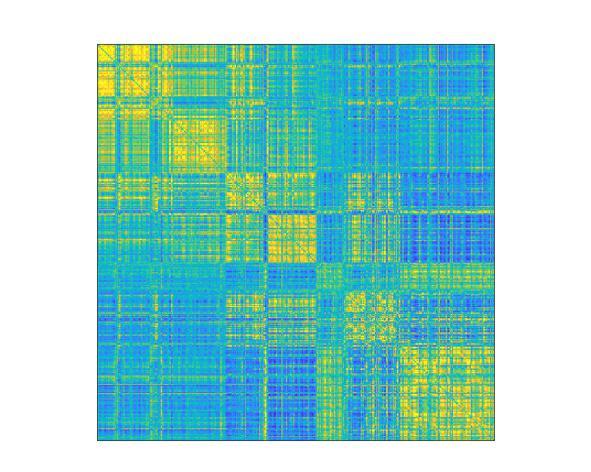

“My research examines networks and connections within the brain, and especially the links between the different areas, in order to gain greater insight into how things work,” says Amico. “We do this largely using MRI scans, which measure brain activity over a given time period.” His research group processes the scans to generate graphs, represented as colorful matrices, that summarize a subject’s brain activity. This type of modeling technique is known in scientific circles as network neuroscience or brain connectomics. “All the information we need is in these graphs, that are commonly known as “functional brain connectomes”. The connectome is a map of the neural network. They inform us about what subjects were doing during their MRI scan – if they were resting or performing some other tasks, for example. Our connectomes change based on what activity was being carried out and what parts of the brain were being used,” says Amico.

Two scans are all it takes

A few years ago, neuroscientists at Yale University studying these connectomes found that every one of us has a unique brain fingerprint. Comparing the graphs generated from MRI scans of the same subjects taken a few days apart, they were able to correctly match up the two scans of a given subject nearly 95% of the time. In other words, they could accurately identify an individual based on their brain fingerprint. “That’s really impressive because the identification was made using only functional connectomes, which are essentially sets of correlation scores,” says Amico.

(Image caption: Map of functional brain connectomes Credit: © 2021 EPFL)

He decided to take this finding one step further. In previous studies, brain fingerprints were identified using MRI scans that lasted several minutes. But he wondered whether these prints could be identified after just a few seconds, or if there was a specific point in time when they appear – and if so, how long would that moment last? “Until now, neuroscientists have identified brain fingerprints using two MRI scans taken over a fairly long period. But do the fingerprints actually appear after just five seconds, for example, or do they need longer? And what if fingerprints of different brain areas appeared at different moments in time? Nobody knew the answer. So, we tested different time scales to see what would happen,” says Amico.

A brain fingerprint in just 1 minute and 40 seconds

His research group found that seven seconds wasn’t long enough to detect useful data, but that around 1 minute and 40 seconds was. “We realized that the information needed for a brain fingerprint to unfold could be obtained over very short time periods,” says Amico. “There’s no need for an MRI that measures brain activity for five minutes, for example. Shorter time scales could work too.” His study also showed that the fastest brain fingerprints start to appear from the sensory areas of the brain, and particularly the areas related to eye movement, visual perception and visual attention. As time goes by, also frontal cortex regions, the ones associated to more complex cognitive functions, start to reveal unique information to each of us.

The next step will be to compare the brain fingerprints of healthy patients with those suffering from Alzheimer’s disease. “Based on my initial findings, it seems that the features that make a brain fingerprint unique steadily disappear as the disease progresses,” says Amico. “It gets harder to identify people based on their connectomes. It’s as if a person with Alzheimer’s loses his or her brain identity.”

Along this line, potential applications might include early detection of neurological conditions where brain fingerprints get disappear. Amico’s technique can be used in patients affected by autism, or stroke, or even in subjects with drug addictions. “This is just another little step towards understanding what makes our brains unique: the opportunities that this insight might create are limitless.”

Sense of smell is our most rapid warning system

The ability to detect and react to the smell of a potential threat is a precondition of our and other mammals’ survival. Using a novel technique, researchers at Karolinska Institutet have been able to study what happens in the brain when the central nervous system judges a smell to represent danger. The study, which is published in PNAS, indicates that negative smells associated with unpleasantness or unease are processed earlier than positive smells and trigger a physical avoidance response.

“The human avoidance response to unpleasant smells associated with danger has long been seen as a conscious cognitive process, but our study shows for the first time that it’s unconscious and extremely rapid,” says the study’s first author Behzad Iravani, researcher at the Department of Clinical Neuroscience, Karolinska Institutet.

The olfactory organ takes up about five per cent of the human brain and enables us to distinguish between many million different smells. A large proportion of these smells are associated with a threat to our health and survival, such as that of chemicals and rotten food. Odour signals reach the brain within 100 to 150 milliseconds after being inhaled through the nose.

Measuring the olfactory response

The survival of all living organisms depends on their ability to avoid danger and seek rewards. In humans, the olfactory sense seems particularly important for detecting and reacting to potentially harmful stimuli.

It has long been a mystery just which neural mechanisms are involved in the conversion of an unpleasant smell into avoidance behaviour in humans.

One reason for this is the lack of non-invasive methods of measuring signals from the olfactory bulb, the first part of the rhinencephalon (literally “nose brain”) with direct (monosynaptic) connections to the important central parts of the nervous system that helps us detect and remember threatening and dangerous situations and substances.

Researchers at Karolinska Institutet have now developed a method that for the first time has made it possible to measure signals from the human olfactory bulb, which processes smells and in turn can transmits signals to parts of the brain that control movement and avoidance behaviour.

The fastest warning system

Their results are based on three experiments in which participants were asked to rate their experience of six different smells, some positive, some negative, while the electrophysiological activity of the olfactory bulb when responding to each of the smells was measured.

“It was clear that the bulb reacts specifically and rapidly to negative smells and sends a direct signal to the motor cortex within about 300 ms,” says the study’s last author Johan Lundström, associate professor at the Department of Clinical Neuroscience, Karolinska Institutet. “The signal causes the person to unconsciously lean back and away from the source of the smell.”

He continues:

“The results suggest that our sense of smell is important to our ability to detect dangers in our vicinity, and much of this ability is more unconscious than our response to danger mediated by our senses of vision and hearing.”

In Neurodegenerative Diseases, Brain Immune Cells Have a “Ravenous Appetite” for Sugar

At the beginning of neurodegenerative disease, the immune cells of the brain – the “microglia” – take up glucose, a sugar molecule, to a much greater extent than hitherto assumed. Studies by the DZNE, the LMU München and the LMU Klinikum München, published in the journal “Science Translational Medicine”, come to this conclusion. These results are of great significance for the interpretation of brain scans depicting the distribution of glucose in the brain. Furthermore, such image-based data could potentially serve as a biomarker to non-invasively capture the response of microglia to therapeutic interventions in people with dementia.

In humans, the brain is one of the organs with the highest energy consumption, which can change with age and also due to disease – e. g. as a result of Alzheimer’s disease. “Energy metabolism can be recorded indirectly via the distribution of glucose in the brain. Glucose is an energy carrier. It is therefore assumed that where glucose accumulates in the brain, energy demand and consequently brain activity is particularly high,” says Dr. Matthias Brendel, deputy director of the Department of Nuclear Medicine at LMU Klinikum München.

The measuring technique commonly used for this purpose is a special variant of positron emission tomography (PET), known as “FDG-PET” in technical jargon. Examined individuals are administered an aqueous solution containing radioactive glucose that distributes in the brain. Radiation emitted by the sugar molecules is then measured by a scanner and visualized. “However, the spatial resolution is insufficient to determine in which cells the glucose accumulates. Ultimately, you get a mixed signal that stems not only from neurons, but also from microglia and other cell types found in the brain,” says Brendel.

Cellular Precision

“The textbook view is that the signal from FDG-PET comes mainly from neurons, because they are considered the largest consumers of energy in the brain,” says Christian Haass, research group leader at DZNE and professor of biochemistry at LMU Munich. “We wanted to put this concept to the test and found that the signal actually comes predominantly from the microglia. This applies at least in the early stages of neurodegenerative disease, when nerve damage is not yet so advanced. In this case, we see that the microglia take up large amounts of sugar. This appears to be necessary to allow them for an acute, highly energy-consuming immune response. This can be directed, for example, against disease-related protein aggregates. Only in the later course of the disease does the PET signal appear to be dominated by neurons.”

The findings of the Munich researchers are based on laboratory investigations as well as PET studies in about 30 patients with dementia – either Alzheimer’s disease or so-called four-repeat tauopathy. The findings are supported, for instance, by studies on mice whose microglia were either largely removed from the brain or, so to speak, deactivated. In addition, a newly developed technique was used that allowed cells derived from the brains of mice to be sorted according to cell type and their sugar uptake to be measured separately.

Consequences for Research and Practice

“FDG-PET is used in dementia research as well as in the context of clinical care,” Brendel says. “Insofar, our results are relevant for the correct interpretation of such brain images. They also shed new light on some hitherto puzzling observations. However, this does not call into question existing diagnoses. Rather, it is about a better understanding of the disease mechanisms.”

Haass draws further conclusions from the current results: “In recent years, it has become evident that microglia play a crucial, protective role in Alzheimer’s and other neurodegenerative diseases. It would be very helpful to be able to monitor the activity of these cells non-invasively, for example their response to drugs. In particular, to determine whether a therapy is working. Our findings suggest that this may be possible by PET.”

Stress on mothers can influence biology of future generations

Biologists at the University of Iowa found that roundworm mothers subjected to heat stress passed, under certain conditions and through modifications to their genes, the legacy of that stress exposure not only to their offspring but even to their offspring’s children.

The researchers, led by Veena Prahlad, associate professor in the Department of Biology and the Aging Mind and Brain Initiative, looked at how a mother roundworm reacts when she senses danger, such as a change in temperature, which can be harmful or even fatal to the animal. In a study published last year, the biologists discovered the mother roundworm releases serotonin when she senses danger. The serotonin travels from her central nervous system to warn her unfertilized eggs, where the warning is stored, so to speak, and then passed to offspring after conception.

Examples of such genetic cascades abound, even in humans. Studies have shown that pregnant women affected by famine in the Netherlands from 1944 to 1945, known as the Dutch Hunger Winter, gave birth to children who were influenced by that episode as adults—with higher rates than average of obesity, diabetes, and schizophrenia.

In this study, the biologists wanted to find out how the memory of stress exposure was stored in the egg cell.

“Genes have ‘memories’ of past environmental conditions that, in turn, affect their expression even after these conditions have changed,” Prahlad explains. “How this ‘memory’ is established and how it persists past fertilization, embryogenesis, and after the embryo develops into adults is not clear. “This is because during embryogenesis, most organisms typically reset any changes that have been made to genes because of the genes’ past activity.”

Prahlad and her teams turned to the roundworm, a creature regularly studied by scientists, for clues. They exposed mother roundworms to unexpected stresses and found the stress memory was ingrained in the mother’s eggs through the actions of a protein called the heat shock transcription factor, or HSF1. The HSF1 protein is present in all plants and animals and is activated by changes in temperature, salinity, and other stressors.

The team found that HSF1 recruits another protein, an enzyme called a histone 3 lysine 9 (H3K9) methyltransferase. The latter normally acts during embryogenesis to silence genes and erase the memory of their prior activity.

However, Prahald’s team observed something else entirely.

“We found that HSF1 collaborates with the mechanisms that normally act to ‘reset’ the memory of gene expression during embryogenesis to, instead, establish this stress memory,” Prahlad says.

One of these newly silenced genes encodes the insulin receptor, which is central to metabolic changes with diabetes in humans, and which, when silenced, alters an animal’s physiology, metabolism, and stress resilience. Because these silencing marks persisted in offspring, their stress-response strategy was switched from one that depended on the ability to be highly responsive to stress, to relying instead on mechanisms that decreased stress responsiveness but provided long-term protection from stressful environments.

“What we found all the more remarkable was that if the mother was exposed to stress for a short period of time, only progeny that developed from her germ cells that were subjected to this stress in utero had this memory,” Prahlad says. “The progeny of these progeny (the mother’s grandchildren) had lost this memory. However, if the mother was subjected to a longer period of stress, the grandchildren generation retained this memory. Somehow the ‘dose’ of maternal stress exposure is recorded in the population.”

The researchers plan to investigate these changes further. HSF1 is not only required for stress resistance but also increased levels of both HSF1 and the silencing mark are associated with cancer and metastasis. Because HSF1 exists in many organisms, its newly discovered interaction with H3K9 methyltransferase to drive gene silencing is likely to have larger repercussions.

Warm milk makes you sleepy — peptides could explain why

According to time-honored advice, drinking a glass of warm milk at bedtime will encourage a good night’s rest. Milk’s sleep-enhancing properties are commonly ascribed to tryptophan, but scientists have also discovered a mixture of milk peptides, called casein tryptic hydrolysate (CTH), that relieves stress and enhances sleep. Now, researchers reporting in ACS’ Journal of Agricultural and Food Chemistry have identified specific peptides in CTH that might someday be used in new, natural sleep remedies.

According to the U.S. Centers for Disease Control and Prevention, one-third of U.S. adults don’t get enough sleep. Sedatives, such as benzodiazepines and zolpidem, are commonly prescribed for insomnia, but they can cause side effects, and people can become addicted to them. Many sedatives work by activating the GABA receptor, a protein in the brain that suppresses nerve signaling. Scientists have also discovered several natural peptides, or small pieces of proteins, that bind the GABA receptor and have anti-anxiety and sleep-enhancing effects. For example, treating a protein in cow’s milk, called casein, with the digestive enzyme trypsin produces the mixture of sleep-enhancing peptides known as CTH. Within this mixture, a specific peptide known as α-casozepine (α-CZP) has been identified that could be responsible for some of these effects. Lin Zheng, Mouming Zhao and colleagues wondered if they could find other, perhaps more powerful, sleep-enhancing peptides in CTH.

The researchers first compared the effects of CTH and α-CZP in mouse sleep tests, finding that CTH showed better sleep-enhancing properties than α-CZP alone. This result suggested that other sleep-promoting peptides besides α-CZP exist in CTH. The team then used mass spectrometry to identify bioactive peptides released from CTH during simulated gastric digestion, and they virtually screened these peptides for binding to the GABA receptor and for the ability to cross the blood-brain barrier. When the strongest candidates were tested in mice, the best one (called YPVEPF) increased the number of mice that fell asleep quickly by about 25% and the sleep duration by more than 400% compared to a control group. In addition to this promising peptide, others in CTH should be explored that might enhance sleep through other pathways, the researchers say.

Unraveling the mystery of why we overeat

Eating is one of life’s greatest pleasures, and overeating is one of life’s growing problems.

In 2019, researchers from The Stuber Lab at the University of Washington School of Medicine discovered that certain cells light up in obese mice and prevent signals that indicate satiety, or feeling full. Now comes a deeper dive into what role these cells play.

A study published in the journal Neuron reports on the function of glutamatergic neurons in mice. These cells are located in the lateral hypothalamic area of the brain, a hub that regulates motivated behaviors, including feeding.

The researchers found that these neurons communicate to two different brain regions: the lateral habenula, a key brain region in the pathophysiology of depression, and the ventral tegmental area, best known for the major role it plays in motivation, reward and addiction.

“We found these cells are not a monolithic group, and that different flavors of these cells do different things,” said Stuber, a joint UW professor of anesthesiology and pain medicine and pharmacology. He works at the UW Center for the Neurobiology of Addiction, Pain, and Emotion, and was the paper’s senior author. Mark Rossi, acting instructor of anesthesiology and pain medicine, is the lead author.

The study is another step in understanding the brain circuits involved in eating disorders.

The Stuber Lab studies the function of major cell groups in the brain’s reward circuit, and characterizes their role in addiction and mental illness – in hopes of finding treatments. One question is whether these cells can be targeted by drugs without harming other parts of the brain.

Their recent study systematically analyzed the lateral hypothalamic glutamate neurons. Researchers found that, when mice are being fed, the neurons in the lateral habenula are more responsive than those in the ventral tegmental area, suggesting that these neurons may play a greater role in guiding feeding.

Researchers also looked at the influence of the hormones leptin and ghrelin on how we eat. Both leptin and ghrelin are thought to regulate behavior through their influence on the mesolimbic dopamine system, a key component of the reward pathway in the brain. But little has been known about how these hormones influence neurons in the lateral hypothalamic area of the brain. The investigators found that leptin blunts the activity of neurons that project to the lateral habenula and increases the activity of neurons that project to the ventral tegmental area. But ghrelin does the opposite.

This study indicated that brain circuits that control feeding at least partially overlap with brain circuitry involved in drug addiction.

The study adds to the growing body of research on the role of the brain in obesity, which the World Health Organization calls a global epidemic. New data from the Centers for Disease Control and Prevention showed 16 states now have obesity rates of 35% or higher. That’s an increase of four states – Delaware, Iowa, Ohio and Texas – in just a year.

“Caramel receptor” identified—New insights from the world of chemical senses

Who doesn’t like the smell of caramel? However, the olfactory receptor that contributes decisively to this sensory impression was unknown until now. Researchers at the Leibniz Institute for Food Systems Biology at the Technical University of Munich (LSB) have now solved the mystery of its existence and identified the “caramel receptor”. The new knowledge contributes to a better understanding of the molecular coding of food flavors.

Furaneol is a natural odorant that gives numerous fruits such as strawberries, but also coffee or bread, a caramel-like scent. Likewise, the substance has long played an important role as a flavoring agent in food production. Nevertheless, until now it was unknown which of the approximately 400 different types of olfactory receptors humans use to perceive this odorant.

Odorant receptors put to the test

This is not an isolated case. Despite intensive research, it is still only known for about 20 percent of human olfactory receptors which odorant spectrum they recognize. To help elucidate the recognition spectra, the team led by Dietmar Krautwurst at LSB is using a collection of all human olfactory receptor genes and their most common genetic variants to decipher their function using a test cell system.

“The test system we developed is unique in the world. We have genetically modified the test cells so that they act like small biosensors for odorants. In doing so, we specify exactly which type of odorant receptor they present on their cell surface. In this way, we can specifically investigate which receptor reacts how strongly to which odorant,” explains Dietmar Krautwurst. In the present study, the researchers examined a total of 391 human odorant receptor types and 225 of their most common variants.

Only two odorants for one receptor

“As our results show, furaneol activated only the OR5M3 odorant receptor. Even one thousandth of a gram of the odorant per liter is sufficient to generate a signal,” says first author of the study Franziska Haag. In addition, the team investigated whether the receptor also reacts to other odorants. To this end, the team examined 186 other substances that are key odorants and therefore play a major role in shaping the aroma of food. Of these, however, only homofuraneol was able to significantly activate the receptor.

This odorant is structurally closely related to furaneol. As shown by previous LSB studies, it imparts a caramel-like aroma to fruits such as durian. “We hypothesize that the receptor we identified, OR5M3, has a very specific recognition spectrum for food ingredients that smell caramel-like. In the future, this knowledge could be used to develop new biotechnologies that can be used to quickly and easily check the sensory quality of foods along the entire value chain,” says Dietmar Krautwurst. Although there is still a long way to go to understand the complex interplay between the approximately 230 key food-related odorants and human olfactory receptors, a start has been made, the molecular biologist adds.

Veronika Somoza, Director of the Leibniz Institute adds: “In the future, we will continue to use our extensive odorant and receptor collections at the Institute to help elucidate the molecular basis of human olfactory perception. After all, this significantly influences our food choices and thus our health.”

(Image credit: G. Olias / LSB)

Teaching Ancient Brains New Tricks

The science of physics has strived to find the best possible explanations for understanding matter and energy in the physical world across all scales of space and time. Modern physics is filled with complex concepts and ideas that have revolutionized the way we see (and don’t see) the universe. The mysteries of the physical world are increasingly being revealed by physicists who delve into non-intuitive, unseen worlds, involving the subatomic, quantum and cosmological realms. But how do the brains of advanced physicists manage this feat, of thinking about worlds that can’t be experienced?

In a recently published paper in npj: Science of Learning, researchers at Carnegie Mellon University have found a way to decode the brain activity associated with individual abstract scientific concepts pertaining to matter and energy, such as fermion or dark matter.

Robert Mason, senior research associate, Reinhard Schumacher, professor of physics and Marcel Just, the D.O. Hebb University Professor of Psychology, at CMU investigated the thought processes of their fellow CMU physics faculty concerning advanced physics concepts by recording their brain activity using functional Magnetic Resonance Imaging (fMRI).

Unlike many other neuroscience studies that use brain imaging, this one was not out to find “the place in the brain” where advanced scientific concepts reside. Instead, this study’s goal was to discover how the brain organizes highly abstract scientific concepts. An encyclopedia organizes knowledge alphabetically, a library organizes it according to something like the Dewey Decimal System, but how does the brain of a physicist do it?

The study examined whether the activation patterns evoked by the different physics concepts could be grouped in terms of concept properties. One of the most novel findings was that the physicists’ brains organized the concepts into those with measureable versus immeasurable size. Here on Earth for most of us mortals, everything physical is measureable, given the right ruler, scale or radar gun. But for a physicist, some concepts like dark matter, neutrinos or the multiverse, their magnitude is not measureable. And in the physicists’ brains, the measureable versus immeasurable concepts are organized separately.

Of course, some parts of the brain organization of the physics professors resembled the organization in physics students’ brains such as concepts that had a periodic nature. Light, radio waves and gamma rays have a periodic nature but concepts like buoyancy and the multiverse do not.

But how can this interpretation of the brain activation findings be assessed? The study team found a way to generate predictions of the activation patterns of each of the concepts. But how can the activation evoked by dark matter be predicted? The team recruited an independent group of physics faculty to rate each concept on each of the hypothesized organizing dimensions on a 1-7 scale. For example, a concept like “duality” would tend to be rated as immeasurable (i.e., low on the measureable magnitude scale). A computational model then determined the relation between the ratings and activation patterns for all of the concepts except one of them and then used that relation to predict the activation of the left-out concept. The accuracy of this model was 70% on average, well above chance at 50%. This result indicates that the underlying organization is well-understood. This procedure is demonstrated for the activation associated with the concept of dark matter in the accompanying figure.

(Image caption: Based on how other physics concepts are represented in terms of their underlying dimensions, a mathematical model can accurately predict the brain activation pattern of a new concept like dark matter)

Creativity of Thought

Near the beginning of the 20th century, post-Newtonian physicists radically advanced the understanding of space, time, matter, energy and subatomic particles. The new concepts arose not from their perceptual experience, but from the generative capabilities of the human brain. How was this possible?

The neurons in the human brain have a large number of computational capabilities with various characteristics, and experience determines which of those capabilities are put to use in various possible ways in combination with other brain regions to perform particular thinking tasks. For example, every healthy brain is prepared to learn the sounds of spoken language, but an infant’s experience in a particular language environment shapes which phonemes of which language are learned.

The genius of civilization has been to use these brain capabilities to develop new skills and knowledge. What makes all of this possible is the adaptability of the human brain. We can use our ancient brains to think of new concepts, which are organized along new, underlying dimensions. An example of a “new” physics dimension significant in 20th century, post-Newtonian physics is “immeasurability” (a property of dark matter, for example) that stands in contrast to the “measurability” of classical physics concepts, (such as torque or velocity). This new dimension is present in the brains of all university physics faculty tested. The scientific advances in physics were built with the new capabilities of human brains.

Another striking finding was the large degree of commonality across physicists in how their brains represented the concepts. Even though the physicists were trained in different universities, languages and cultures, there was a similarity in brain representations. This commonality in conceptual representations arises because the brain system that automatically comes into play for processing a given type of information is the one that is inherently best suited to that processing. As an analogy, consider that the parts of one’s body that come into play to perform a given task are the best suited ones: to catch a tennis ball, a closing hand automatically comes into play, rather than a pair or knees or a mouth or an armpit. Similarly, when physicists are processing information about oscillation, the brain system that comes into play is the one that would normally process rhythmic events, such as dance movements or ripples in a pond. And that is the source of the commonality across people. It is the same brain regions in everyone that are recruited to process a given concept.

So the secret of teaching ancient brains new tricks, as the advance of civilization has repeatedly done, is to empower creative thinkers to develop new understandings and inventions, by building on or repurposing the inherent information processing capabilities of the human brain. By communicating these newly developed concepts to others, they will root themselves in the same information processing capabilities of the recipients’ brains as the original developers used. Mass communication and education can propagate the advances to entire populations. Thus the march of science, technology and civilization continue to be driven by the most powerful entity on Earth, the human brain.

Unraveling the Mystery of Touch

Some parts of the body—our hands and lips, for example—are more sensitive than others, making them essential tools in our ability to discern the most intricate details of the world around us.

This ability is key to our survival, enabling us to safely navigate our surroundings and quickly understand and respond to new situations.

It is perhaps unsurprising that the brain devotes considerable space to these sensitive skin surfaces that are specialized for fine, discriminative touch and which are continually gathering detailed information via the sensory neurons that innervate them.

But how does the connection between sensory neurons and the brain result in such exquisitely sensitive skin?

A new study led by researchers at Harvard Medical School has unveiled a mechanism that may underlie the greater sensitivity of certain skin regions. The research, conducted in mice and published Oct. 11 in Cell, shows that the overrepresentation of sensitive skin surfaces in the brain develops in early adolescence and can be pinpointed to the brain stem.

Moreover, the sensory neurons that populate the more sensitive parts of the skin and relay information to the brain stem form more connections and stronger ones than neurons in less sensitive parts of the body.

Sensitive skin regions

“This study provides a mechanistic understanding of why more brain real estate is devoted to surfaces of the skin with high touch acuity,” said senior author David Ginty, the Edward R. and Anne G. Lefler Professor of Neurobiology at Harvard Medical School. “Basically, it’s a mechanism that helps explain why one has greater sensory acuity in the parts of the body that require it.”

While the study was done in mice, the overrepresentation of sensitive skin regions in the brain is seen across mammals—suggesting that the mechanism may be generalizable to other species.

From an evolutionary perspective, mammals have dramatically varied body forms, which translates into sensitivity in different skin surfaces. For example, humans have highly sensitive hands and lips, while pigs explore the world using highly sensitive snouts. Thus, Ginty said this mechanism could provide the developmental flexibility for different species to develop sensitivity in different areas.

Moreover, the findings, while fundamental, could someday help illuminate the touch abnormalities seen in certain neurodevelopmental disorders in humans.

Scientists have long known that certain body parts are overrepresented in the brain—as depicted by the brain’s sensory map, called the somatosensory homunculus, a schematic of human body parts and the corresponding areas in the brain where signals from these body parts are processed. The striking illustration includes cartoonishly oversized hands and lips.

Previously, it was thought that the overrepresentation of sensitive skin regions in the brain could be attributed to a higher density of neurons innervating those skin areas. However, earlier work by the Ginty lab revealed that while sensitive skin does contain more neurons, these extra neurons are not sufficient to account for the additional brain space.

“We noticed that there was a rather meager number of neurons that were innervating the sensitive skin compared to what we’d expect,” said co-first author Brendan Lehnert, a research fellow in neurobiology, who led the study with Celine Santiago, also a research fellow in the Ginty lab.

“It just wasn’t adding up,” Ginty added.

To investigate this contradiction, the researchers conducted a series of experiments in mice that involved imaging the brain and neurons as neurons were stimulated in different ways.

First, they examined how different skin regions were represented in the brain throughout development. Early in development, the sensitive, hairless skin on a mouse’s paw was represented in proportion to the density of sensory neurons.

However, between adolescence and adulthood, this sensitive skin became increasingly overrepresented in the brain, even though the density of neurons remained stable—a shift that was not seen in less sensitive, hairy paw skin.

“This immediately told us that there’s something more going on than just the density of innervation of nerve cells in the skin to account for this overrepresentation in the brain,” Ginty said.

“It was really unexpected to see changes over these postnatal developmental timepoints,” Lehnert added. “This might be just one of many changes over postnatal development that are important for allowing us to represent the tactile world around us, and helping us gain the ability to manipulate objects in the world through the sensory motor loop that touch is such a special part of.”

Sensory and brain stem neurons

Next, the team determined that the brain stem—the region at the base of the brain that relays information from sensory neurons to more sophisticated, higher-order brain regions—is the location where the enlarged representation of sensitive skin surfaces occurs.

This finding led the researchers to a realization: The overrepresentation of sensitive skin must emerge from the connections between sensory neurons and brain stem neurons.

To probe even further, the scientists compared the connections between sensory neurons and brain stem neurons for different types of paw skin. They found that these connections between neurons were stronger and more numerous for sensitive, hairless skin than for less sensitive, hairy skin. Thus, the team concluded, the strength and number of connections between neurons play a key role in driving overrepresentation of sensitive skin in the brain.

Finally, even when sensory neurons in sensitive skin weren’t stimulated, mice still developed expanded representation in the brain—suggesting that skin type, rather than stimulation by touch over time, causes these brain changes.

“We think we’ve uncovered a component of this magnification that accounts for the disproportionate central representation of sensory space.” Ginty said. “This is a new way of thinking about how this magnification comes about.”

Next, the researchers want to investigate how different skin regions tell the neurons that innervate them to take on different properties, such as forming more and stronger connections when they innervate sensitive skin.

“What are the signals?” Ginty asked. “That’s a big, big mechanistic question.”

And while Lehnert described the study as purely curiosity-driven, he noted that there is a prevalent class of neurodevelopmental disorders in humans called developmental coordination disorders that affect the connection between touch receptors and the brain—and thus might benefit from elucidating further the interplay between the two.

“This is one of what I hope will be many studies that explore on a mechanistic level changes in how the body is represented over development,” Lehnert said. “Celine and I both think this might lead, at some point in the future, to a better understanding of certain neurodevelopmental disorders.”

Research Shows Promising Results for Parkinson’s Disease Treatment

Researchers from Carnegie Mellon University have found a way to make deep brain stimulation (DBS) more precise, resulting in therapeutic effects that outlast what is currently available. The work, led by Aryn Gittis and colleagues in CMU’s Gittis Lab and published in Science, will significantly advance the study of Parkinson’s disease.

DBS allows researchers and doctors to use thin electrodes implanted in the brain to send electrical signals to the part of the brain that controls movement. It is a proven way to help control unwanted movement in the body, but patients must receive continuous electrical stimulation to get relief from their symptoms. If the stimulator is turned off, the symptoms return immediately.

Gittis, an associate professor of biological sciences in the Mellon College of Science and faculty in the Neuroscience Institute, said that the new research could change that.

“By finding a way to intervene that has long-lasting effects, our hope is to greatly reduce stimulation time, therefore minimizing side effects and prolonging battery life of implants.”

Gittis set the foundation for this therapeutic approach in 2017, when her lab identified specific classes of neurons within the brain’s motor circuitry that could be targeted to provide long-lasting relief of motor symptoms in Parkinson’s models. In that work, the lab used optogenetics, a technique that uses light to control genetically modified neurons. Optogenetics, however, cannot currently be used on humans.

Since then, she has been trying to find a strategy that is more readily translated to patients suffering from Parkinson’s disease. Her team found success in mice with a new DBS protocol that uses short bursts of electrical stimulation.

“This is a big advance over other existing treatments,” Gittis said. “In other DBS protocols, as soon as you turn the stimulation off, the symptoms come back. This seems to provide longer lasting benefits — at least four times longer than conventional DBS.”

In the new protocol, the researchers target specific neuronal subpopulations in the globus pallidus, an area of the brain in the basal ganglia, with short bursts of electrical stimulation. Gittis said that researchers have been trying for years to find ways to deliver stimulation in such a cell-type specific manner.

“That concept is not new. We used a ‘bottom up’ approach to drive cell type specificity. We studied the biology of these cells and identified the inputs that drive them. We found a sweet spot that allowed us to utilize the underlying biology,” she said.

Teresa Spix, the first author of the paper, said that while there are many strong theories, scientists do not yet fully understand why DBS works.

“We’re sort of playing with the black box. We don’t yet understand every single piece of what’s going on in there, but our short burst approach seems to provide greater symptom relief. The change in pattern lets us differentially affect the cell types,” she said.

Spix, who defended her Ph.D. in July, is excited about the direct connection this research has to clinical studies.

“A lot of times those of us that work in basic science research labs don’t necessarily have a lot of contact with actual patients. This research started with very basic circuitry questions but led to something that could help patients in the near future,” Spix said.

Next, neurosurgeons at Pittsburgh’s Allegheny Health Network (AHN) will use Gittis’ research in a safety and tolerability study in humans. Nestor Tomycz, a neurological surgeon at AHN, said that researchers will soon begin a randomized, double blind crossover study of patients with idiopathic Parkinson’s disease. The patients will be followed for 12 months to assess improvements in their Parkinson’s disease motor symptoms and frequency of adverse events.

“Aryn Gittis continues to do spectacular research which is elucidating our understanding of basal ganglia pathology in movement disorders. We are excited that her research on burst stimulation shows a potential to improve upon DBS which is already a well-established and effective therapy for Parkinson’s disease,” Tomycz said.

Donald Whiting, the chief medical officer at AHN and one of the nation’s foremost experts in the use of DBS, said the new protocol could open doors for experimental treatments.

“Aryn is helping us highlight in the animal model things that are going to change the future of what we do for our patients. She’s actually helping evolve the care treatment of Parkinson’s patients for decades to come with her research,” Whiting said.

Tomycz agreed. “This work is really going to help design the future technology that we’re using in the brain and will help us to get better outcomes for these patients.”

Nerve repair, with help from stem cells

A new approach to repairing peripheral nerves marries the regenerating power of gingiva-derived mesenchymal stem cells with a biological scaffold to enable the functional recovery of nerves following a facial injury, according to a study by a cross-disciplinary team from the University of Pennsylvania School of Dental Medicine and Perelman School of Medicine.

Faced with repairing a major nerve injury to the face or mouth, skilled surgeons can take a nerve from an arm or leg and use to it restore movement or sensation to the original site of trauma. This approach, known as a nerve autograft, is the standard of care for nerve repair, but has its shortcomings. Besides taking a toll on a previously uninjured body part, the procedure doesn’t always result in complete and functional nerve regrowth, especially for larger injuries.

Scientists and clinicians have recently been employing a different strategy for regrowing functional nerves involving commercially-available scaffolds to guide nerve growth. In experimental approaches, these scaffolds are infused with growth factors and cells to support regeneration. But to date, these efforts have not been completely successful. Recovery can fall short due to a failure to coax large numbers of regenerating axons to cross the graft and then adequately mature and regrow myelin, the insulating material around peripheral nerves that allows them to fire quickly and efficiently.

In an innovative approach to guided nerve repair, shared in the journal npjRegenerative Medicine, the Penn team coaxed human gingiva-derived mesenchymal stem cells (GMSCs) to grow Schwann-like cells, the pro-regenerative cells of the peripheral nervous system that make myelin and neural growth factors. The current work demonstrated that infusing a scaffold with these cells and using them to guide the repair of facial nerve injuries in an animal model had the same effectiveness as an autograft procedure.

“Instead of an autograft, which causes unnecessary morbidity, we wanted to create a biological approach and use the regenerating ability of stem cells,” says Anh Le, senior author on the study and chair and a professor in the Department of Oral and Maxillofacial Surgery/Pharmacology in Penn’s School of Dental Medicine. “To be able to recreate nerve cells in this way is really a new paradigm.”

For more than a decade, Le’s lab has pioneered the use of GMSCs to treat several inflammatory diseases and to regrow a variety of types of craniofacial tissue. Gingival tissue is easily extracted and heals rapidly, offering an accessible source of GMSCs. In fact, gingival tissue is often discarded from routine dental procedures. Le says the potential of GMSCs to help in nerve regrowth also owes in part to the cells’ common lineage. “Embryologically, we know that craniofacial tissue is derived from the same neural crest progenitor cells as nerves,” Le says. “That’s part of the beauty of this system.”

Le and colleagues led by Qunzhou Zhang, now a faculty member at Penn Dental Medicine, were able to apply their previous understanding of GMSCs to grow them in a collagen matrix using specific conditions that encouraged the cells to grow more like Schwann cells, the cells’ identity confirmed with a variety of genetic markers.

“We observed this very interesting phenomenon,” says Le, “that when we changed that matrix density and suspended the cells three-dimensionally, they changed to have more neural crest properties, like Schwann cells.”

To move the work forward, Le reached out to the Perelman School of Medicine’s D. Kacy Cullen, a bioengineer who has worked on nerve repair for 15 years. Cullen and colleagues have expertise in creating and testing nerve scaffold materials.

Using commercially available scaffolds for nerve growth, the researchers introduced the cells into collagen hydrogel. “The cells migrate into the nerve graft and create a sheet of Schwann cells,” Le says. “By doing so they are forming the functionalized nerve guidance to guide axon generation in the gap left by an injury.”

“To get host Schwann cells all throughout a bioscaffold, you’re basically approximating natural nerve repair,” Cullen says. Indeed, when Le and Cullen’s groups collaborated to implant these grafts into rodents with a facial nerve injury and then tested the results, they saw evidence of a functional repair. The animals had less facial droop than those that received an “empty” graft and nerve conduction was restored. The implanted stem cells also survived in the animals for months following the transplant.

“The animals that received nerve conduits laden with the infused cells had a performance that matched the group that received an autograft for their repair,” he says. “When you’re able to match the performance of the gold-standard procedure without a second surgery to acquire the autograft, that is definitely a technology to pursue further.”

While the current study worked at repairing a small gap in a nerve, the researchers aim to continue refining the method to try to repair larger gaps, as often arise when oral cancer necessitates surgical removal of a tumor. “The field desperately needs what have been dubbed ‘living scaffolds’ to direct the regrowth,” Cullen says.

Le notes that this approach would give patients with oral cancer or facial trauma the opportunity to use their own tissue to recover motor function and sensation and to have cosmetic improvements following a repair.

And while Le’s group focuses on the head and neck, further work on this model could translate to nerve repair in other areas of the body as well. “I’m hopeful we can continue moving this forward towards clinical application,” she says.

Neuroscientists roll out first comprehensive atlas of brain cells

When you clicked to read this story, a band of cells across the top of your brain sent signals down your spine and out to your hand to tell the muscles in your index finger to press down with just the right amount of pressure to activate your mouse or track pad.

A slew of new studies now shows that the area of the brain responsible for initiating this action — the primary motor cortex, which controls movement — has as many as 116 different types of cells that work together to make this happen.

The 17 studies, appearing online in the journal Nature, are the result of five years of work by a huge consortium of researchers supported by the National Institutes of Health’s Brain Research Through Advancing Innovative Neurotechnologies (BRAIN) Initiative to identify the myriad of different cell types in one portion of the brain. It is the first step in a long-term project to generate an atlas of the entire brain to help understand how the neural networks in our head control our body and mind and how they are disrupted in cases of mental and physical problems.

“If you think of the brain as an extremely complex machine, how could we understand it without first breaking it down and knowing the parts?” asked cellular neuroscientist Helen Bateup, a University of California, Berkeley, associate professor of molecular and cell biology and co-author of the flagship paper that synthesizes the results of the other papers. “The first page of any manual of how the brain works should read: Here are all the cellular components, this is how many of them there are, here is where they are located and who they connect to.”

Individual researchers have previously identified dozens of cell types based on their shape, size, electrical properties and which genes are expressed in them. The new studies identify about five times more cell types, though many are subtypes of well-known cell types. For example, cells that release specific neurotransmitters, like gamma-aminobutyric acid (GABA) or glutamate, each have more than a dozen subtypes distinguishable from one another by their gene expression and electrical firing patterns.

While the current papers address only the motor cortex, the BRAIN Initiative Cell Census Network (BICCN) — created in 2017 — endeavors to map all the different cell types throughout the brain, which consists of more than 160 billion individual cells, both neurons and support cells called glia. The BRAIN Initiative was launched in 2013 by then-President Barack Obama.

“Once we have all those parts defined, we can then go up a level and start to understand how those parts work together, how they form a functional circuit, how that ultimately gives rise to perceptions and behavior and much more complex things,” Bateup said.

Knock-in mice

Together with former UC Berkeley professor John Ngai, Bateup and UC Berkeley colleague Dick Hockemeyer have already used CRISPR-Cas9 to create mice in which a specific cell type is labeled with a fluorescent marker, allowing them to track the connections these cells make throughout the brain. For the flagship journal paper, the Berkeley team created two strains of “knock-in” reporter mice that provided novel tools for illuminating the connections of the newly identified cell types, she said.

“One of our many limitations in developing effective therapies for human brain disorders is that we just don’t know enough about which cells and connections are being affected by a particular disease and therefore can’t pinpoint with precision what and where we need to target,” said Ngai, who led UC Berkeley’s Brain Initiative efforts before being tapped last year to direct the entire national initiative. “Detailed information about the types of cells that make up the brain and their properties will ultimately enable the development of new therapies for neurologic and neuropsychiatric diseases.”

Ngai is one of 13 corresponding authors of the flagship paper, which has more than 250 co-authors in all.

Bateup, Hockemeyer and Ngai collaborated on an earlier study to profile all the active genes in single dopamine-producing cells in the mouse’s midbrain, which has structures similar to human brains. This same profiling technique, which involves identifying all the specific messenger RNA molecules and their levels in each cell, was employed by other BICCN researchers to profile cells in the motor cortex. This type of analysis, using a technique called single-cell RNA sequencing, or scRNA-seq, is referred to as transcriptomics.

The scRNA-seq technique was one of nearly a dozen separate experimental methods used by the BICCN team to characterize the different cell types in three different mammals: mice, marmosets and humans. Four of these involved different ways of identifying gene expression levels and determining the genome’s chromatin architecture and DNA methylation status, which is called the epigenome. Other techniques included classical electrophysiological patch clamp recordings to distinguish cells by how they fire action potentials, categorizing cells by shape, determining their connectivity, and looking at where the cells are spatially located within the brain. Several of these used machine learning or artificial intelligence to distinguish cell types.

“This was the most comprehensive description of these cell types, and with high resolution and different methodologies,” Hockemeyer said. “The conclusion of the paper is that there’s remarkable overlap and consistency in determining cell types with these different methods.”

A team of statisticians combined data from all these experimental methods to determine how best to classify or cluster cells into different types and, presumably, different functions based on the observed differences in expression and epigenetic profiles among these cells. While there are many statistical algorithms for analyzing such data and identifying clusters, the challenge was to determine which clusters were truly different from one another — truly different cell types — said Sandrine Dudoit, a UC Berkeley professor and chair of the Department of Statistics. She and biostatistician Elizabeth Purdom, UC Berkeley associate professor of statistics, were key members of the statistical team and co-authors of the flagship paper.

“The idea is not to create yet another new clustering method, but to find ways of leveraging the strengths of different methods and combining methods and to assess the stability of the results, the reproducibility of the clusters you get,” Dudoit said. “That’s really a key message about all these studies that look for novel cell types or novel categories of cells: No matter what algorithm you try, you’ll get clusters, so it is key to really have confidence in your results.”

Bateup noted that the number of individual cell types identified in the new study depended on the technique used and ranged from dozens to 116. One finding, for example, was that humans have about twice as many different types of inhibitory neurons as excitatory neurons in this region of the brain, while mice have five times as many.

“Before, we had something like 10 or 20 different cell types that had been defined, but we had no idea if the cells we were defining by their patterns of gene expression were the same ones as those defined based on their electrophysiological properties, or the same as the neuron types defined by their morphology,” Bateup said.

“The big advance by the BICCN is that we combined many different ways of defining a cell type and integrated them to come up with a consensus taxonomy that’s not just based on gene expression or on physiology or morphology, but takes all of those properties into account,” Hockemeyer said. “So, now we can say this particular cell type expresses these genes, has this morphology, has these physiological properties, and is located in this particular region of the cortex. So, you have a much deeper, granular understanding of what that cell type is and its basic properties.”

Dudoit cautioned that future studies could show that the number of cell types identified in the motor cortex is an overestimate, but the current studies are a good start in assembling a cell atlas of the whole brain.

“Even among biologists, there are vastly different opinions as to how much resolution you should have for these systems, whether there is this very, very fine clustering structure or whether you really have higher level cell types that are more stable,” she said. “Nevertheless, these results show the power of collaboration and pulling together efforts across different groups. We’re starting with a biological question, but a biologist alone could not have solved that problem. To address a big challenging problem like that, you want a team of experts in a bunch of different disciplines that are able to communicate well and work well with each other.”

(Image caption: Brain slice from a transgenic mouse, in which genetically defined neurons in the cerebral cortex are labeled with a red fluorescent reporter gene. Credit: Tanya Daigle, courtesy of the Allen Institute)

New study uncovers brain circuits that control fear responses

Researchers at the Sainsbury Wellcome Centre, University College London, have discovered a brain mechanism that enables mice to override their instincts based on previous experience.

The study, published in Neuron, identifies a new brain circuit in the ventral lateral geniculate nucleus (vLGN), an inhibitory structure in the brain. The neuroscientists found that when activity in this brain region was suppressed, animals were more likely to seek safety and escape from perceived danger, whereas activation of vLGN neurons completely abolished escape responses to imminent threats.

While it is normal to experience fear or anxiety in certain situations, we can adjust our fear responses depending on our knowledge or circumstances. For example, being woken up by loud blasts and bright lights nearby might evoke a fear reaction. But if you have experienced fireworks before, your knowledge will likely prevent such reactions and allow you to watch without fear. On the other hand, if you happen to be in a war zone, your fear reaction might be strongly increased.

While many brain regions have previously been shown to be involved in processing perceived danger and mediating fear reactions, the mechanisms of how these reactions are controlled are still unclear. Such control is crucial since its impairment can lead to anxiety disorders such as phobias or post-traumatic stress disorders (PTSD), in which the circuits in the brain associated with fear and anxiety are thought to become overactive, leading to pathologically increased fear responses.

The new study from the research group of Professor Sonja Hofer at the Sainsbury Wellcome Centre at University College London, took advantage of an established experimental paradigm in which mice escape to a shelter in response to an overhead expanding dark shadow. This looming stimulus simulates a predator moving towards the animal from above.

The researchers found that the vLGN could control escape behaviour depending on the animal’s knowledge gained through previous experience, and on its assessment of risk in its current environment. When mice were not expecting a threat and felt safe, the activity of a subset of inhibitory neurons in the vLGN was high, which in turn could inhibit threat reactions. In contrast, when mice expected danger, activity in these neurons was low, which made the animals more likely to escape and seek safety.

“We think the vLGN may be acting as an inhibitory gate that sets a threshold for the sensitivity to a potentially threatening stimulus depending on the animal’s knowledge,” said Alex Fratzl, PhD student in the Hofer lab and first author of the paper.

The next piece of the puzzle the researchers are focusing on is determining which other brain regions the vLGN interacts with to achieve this inhibitory control of defensive reactions. They have already identified one such brain region, the superior colliculus in the midbrain.

“We found that the vLGN specifically inhibits neurons in the superior colliculus that respond to visual threats and thereby specifically blocks the pathway in the brain that mediates reactions to such threats – something the animal sees that could pose a danger like an approaching predator,” said Sonja Hofer, Professor at the Sainsbury Wellcome Centre and corresponding author on the paper.

While humans do not have to worry much about predators, they also have instinctive fear reactions in certain situations. The hope is therefore, that clinical scientists may one day be able to ascertain if the corresponding brain circuits in humans have a similar function, with clinical implications for the treatment of PTSD and other anxiety-related disorders in the future.

(Image caption: Image of the ventral lateral geniculate nucleus (vLGN) with axonal projections (inputs) from different positions in the visual part of the cerebral cortex labelled in different colours. These inputs might give the cerebral cortex the ability to regulate instinctive threat reactions through the vLGN, depending on the animal’s knowledge)

Treating Severe Depression with On-Demand Brain Stimulation

UCSF Health physicians have successfully treated a patient with severe depression by tapping into the specific brain circuit involved in depressive brain patterns and resetting them using the equivalent of a pacemaker for the brain.

The study, which appears in Nature Medicine, represents a landmark success in the years-long effort to apply advances in neuroscience to the treatment of psychiatric disorders.

“This study points the way to a new paradigm that is desperately needed in psychiatry,” said Andrew Krystal, PhD, professor of psychiatry and member of the UCSF Weill Institute for Neurosciences. “We’ve developed a precision-medicine approach that has successfully managed our patient’s treatment-resistant depression by identifying and modulating the circuit in her brain that’s uniquely associated with her symptoms.”

Previous clinical trials have shown limited success for treating depression with traditional deep brain stimulation (DBS), in part because most devices can only deliver constant electrical stimulation, usually only in one area of the brain. A major challenge for the field is that depression may involve different brain areas in different people.

What made this proof-of-principle trial successful was the discovery of a neural biomarker – a specific pattern of brain activity that indicates the onset of symptoms – and the team’s ability to customize a new DBS device to respond only when it recognizes that pattern. The device then stimulates a different area of the brain circuit, creating on-demand, immediate therapy that is unique to both the patient’s brain and the neural circuit causing her illness.

This customized approach alleviated the patient’s depression symptoms almost immediately, Krystal said, in contrast to the four- to eight-week delay of standard treatment models and has lasted over the 15 months she has had the implanted device. For patients with long-term, treatment-resistant depression, that result could be transformative.

“I was at the end of the line,” said the patient, who asked to be known by her first name, Sarah. “I was severely depressed. I could not see myself continuing if this was all I’d be able to do, if I could never move beyond this. It was not a life worth living.”

Applying Proven Advances in Neuroscience to Mental Health

The path to this project at UC San Francisco began with a large, multicenter effort sponsored under President Obama’s BRAIN (Brain Research through Advancing Innovative Neurotechnologies) Initiative in 2014.

Through that initiative, UCSF neurosurgeon Edward Chang, MD, and colleagues conducted studies to understand depression and anxiety in patients undergoing surgical treatment for epilepsy, for whom mood disorders are also common. The research team discovered patterns of electrical brain activity that correlated with mood statesandidentified new brain regions that could be stimulated to relieve depressed mood.

With results from the previous research as a guide, Chang, Krystal, and first author Katherine Scangos, MD, PhD, all members of the Weill Institute, developed a strategy relying on two steps that had never been used in psychiatric research: mapping a patient’s depression circuit and characterizing her neural biomarker.

“This new study puts nearly all the critical findings of our previous research together into one complete treatment aimed at alleviating depression,” said Chang, who is co-senior author with Krystal on the paper and the Joan and Sanford Weill Chair of Neurological Surgery.

The team evaluated the new approach in June 2020 under an FDA investigational device exemption, when Chang implanted a responsive neurostimulation device that he has successfully used in treating epilepsy.

“We were able to deliver this customized treatment to a patient with depression, and it alleviated her symptoms,” said Scangos. “We haven’t been able to do this the kind of personalized therapy previously in psychiatry.”

To personalize the therapy, Chang put one of the device’s electrode leads in the brain area where the team had found the biomarker and the other lead in the region of Sarah’s depression circuit where stimulation best relieved her mood symptoms. The first lead constantly monitored activity; when it detected the biomarker, the device signaled the other lead to deliver a tiny (1mA) dose of electricity for 6 seconds, which caused the neural activity to change.

“The effectiveness of this therapy showed that not only did we identify the correct brain circuit and biomarker, but we were able to replicate it at an entirely different, later phase in the trial using the implanted device,” said Scangos. “This success in itself is an incredible advancement in our knowledge of the brain function that underlies mental illness.”

Translating Neural Circuits into New Insights

For Sarah, the past year has offered an opportunity for real progress after years of failed therapies.

“In the early few months, the lessening of the depression was so abrupt, and I wasn’t sure if it would last,” she said. “But it has lasted. And I’ve come to realize that the device really augments the therapy and self-care I’ve learned while being a patient here at UCSF.”

The combination has given her perspective on emotional triggers and irrational thoughts on which she used to obsess. “Now,” she said, “those thoughts still come up, but it’s just…poof…the cycle stops.”

While the approach appears promising, the team cautions that this is just the first patient in the first trial.

“There’s still a lot of work to do,” said Scangos, who has enrolled two other patients in the trial and hopes to add nine more. “We need to look at how these circuits vary across patients and repeat this work multiple times. And we need to see whether an individual’s biomarker or brain circuit changes over time as the treatment continues.”

FDA approval for this treatment is still far down the road, but the study points toward new paths for treating severe depression. Krystal said that understanding the brain circuits underlying depression is likely to guide future non-invasive treatments that can modulate those circuits.

Added Scangos, “The idea that we can treat symptoms in the moment, as they arise, is a whole new way of addressing the most difficult-to-treat cases of depression.”

Mid-pregnancy may be defining period for human brain

About four or five months after conception, a burst of synaptic growth begins in the prefrontal cortex (PFC) of the human fetus. And within this tangled mass of connections, the developing brain acquires the unique properties that make humans capable of abstract thought, language, and complex social interactions.

But what are the molecular ingredients needed for this flowering of synapses to occur and that lead to such profound changes in the brain? In two new papers published in the journal Nature, Yale researchers have identified key changes in gene expression and structure within the developing human brain that make it unique among all animal species.

These insights could have profound implications for understanding common developmental or brain disorders, researchers say.

“It is surprising and somewhat disappointing that we still don’t know what makes the human brain different from the brains of other closely related species,” said Nenad Sestan, the Harvey and Kate Cushing Professor of Neuroscience at Yale, professor of comparative medicine, of genetics and of psychiatry, and senior author of both papers. “Knowing this is not just an intellectual curiosity to explain who we are as a species — It may also help us better understand neuropsychiatric disorders such as schizophrenia and autism.”

For the studies, Sestan’s lab team conducted an extensive analysis of the gene expressions that occur in the prefrontal cortexes of humans, macaque monkeys, and mice midway through fetal development, identifying both similarities and differences between the species.

A critical factor in determining outcomes in the developing brain for all of these species, they found, is the concentration of retinoic acid, or RA, a byproduct of Vitamin A. Retinoic acid, which is essential for the development of every organ, is tightly regulated in all animals. Too much or too little RA can lead to developmental abnormalities.

In the first paper, a research team led by Mikihito Shibata and Kartik Pattabiraman, both from Yale School of Medicine, found that RA is increased in the prefrontal cortex of humans, mice, and macaques alike during the second trimester, the most crucial time for formation of neural circuitry and connections.

When researchers blocked RA signals in the prefrontal cortex of mice, the animals failed to develop the specific circuits and connectivity in areas of the brain that in humans are essential for working memory and cognition. In humans, this same pathway is also disrupted during development in patients with schizophrenia and autism spectrum disorders, suggesting these disorders may share similar roots during development.

However, a close examination of the gene CYP26B1, which both synthesizes and turns off RA in the prefrontal cortex, revealed important differences between mice and primates. For instance, in mice the gene limits activity of RA beyond the animal’s tiny prefrontal cortex. When researchers blocked this gene in the mice, areas of their brains associated with sensory and motor skills came to resemble the synaptic wiring found in the prefrontal cortex. This finding further affirms the crucial role played by RA in the expansion of the prefrontal cortex — and in promoting ever greater brain complexity — in humans and other primates.