#sayitwithscience

Demons in the History of Science

Part one of two: Laplace’s Demon

Some might say that the modern day physicists have it easy; they can appeal to the public with their stories of eleven-dimensional universes, time travel, and stories of a quantum world that is stranger than fiction. But the basis of such appeal remains the same as the appeal for pursuing science always was and will be: a greater understanding of the environment, ourselves, and knowledge itself.

Just like Schrödinger’s cat, a popular thought experiment by famous physicist Erwin Schrödinger, Laplace’s Demon and Maxwell’s Demon are two other thought-experiments in scientific thinking which are important for what they reveal about our understanding of the universe. It may only interest you to learn of these thought-experiments for the sake of reinforcing the philosophical relevance and beauty that science has always sought to provide.

Jim-Al Khalili, author of Quantum: A Guide for the Perplexed, affirms that fate as a scientific idea was disproved three-quarters of a century ago, referring to the discoveries of quantum mechanics as proof, of course. But what does he mean when he says this? Prior to such discoveries, it may have been okay to argue for a deterministic universe, meaning that scientists could still consider the idea of a world in which one specific input must result in one specific output and thus the sum all these actions and their consequences could help “determine” the overall outcome, or fate, of such a world.

Pierre-Simon Laplace, born on March 23, 1794, was a French mathematician and astronomer whose work largely founded the statistical interpretation of probability known as Bayesian Probability. He lived in a world before Heisenberg’s Uncertainty Principle and Chaos Theory and thus he was allowed to imagine such a deterministic universe:

We may regard the present state of the universe as the effect of its past and the cause of its future. An intellect which at a certain moment would know all forces that set nature in motion, and all positions of all items of which nature is composed, if this intellect were also vast enough to submit these data to analysis, it would embrace in a single formula the movements of the greatest bodies of the universe and those of the tiniest atom; for such an intellect nothing would be uncertain and the future just like the past would be present before its eyes.

Laplace, A Philosophical Essay on Probabilities

Laplace thought about what it would be like if it were possible to know the positions, masses, and velocities of all the atoms in existence and hypothesized a being, later known as Laplace’s Demon, which would be able to know such information and such calculate all future events.

With our knowledge of physics, The Heisenberg Uncertainty PrincipleandChaos Theory, such a being could not exist because such information about atoms cannot be observed with enough precision to calculate and predict future events. (By the way, “enough” precision means infinite precision!) This might be good news for those who believe in free will as its concept would not be permitted in a deterministic universe governed by Laplace’s demon.

Interestingly enough, The Heisenberg Uncertainty Principle and Chaos Theory are not the only restrictive challenges that scientists have faced in trying to understand the properties and bounds of our universe. The Second Law of Thermodynamics is also of concern to scientists and philosophers alike, as we will learn with the birth of another mind-boggling demon.

Post link

Hey everyone! The Say It With Science team invites you to “like” our new Facebook page.

Thanks for following!

Post link

Maximum Entropy Distributions

Entropy is an important topic in many fields; it has very well known uses in statistical mechanics,thermodynamics, and information theory. The classical formula for entropy is Σi(pi log pi), where p=p(x) is a probability density function describing the likelihood of a possible microstate of the system, i, being assumed. But what is this probability density function? How must the likelihood of states be configured so that we observe the appropriate macrostates?

In accordance with the second law of thermodynamics, we wish for the entropy to be maximized. If we take the entropy in the limit of large N, we can treat it with calculus as S[φ]=∫dx φ ln φ. Here, S is called a functional (which is, essentially, a function that takes another function as its argument). How can we maximize S? We will proceed using the methods of calculus of variationsandLagrange multipliers.

First we introduce three constraints. We require normalization, so that ∫dx φ = 1. This is a condition that any probability distribution must satisfy, so that the total probability over the domain of possible values is unity (since we’re asking for the probability of any possible event occurring). We require symmetry, so that the expected value of x is zero (it is equally likely to be in microstates to the left of the mean as it is to be in microstates to the right — note that this derivation is treating the one-dimensional case for simplicity). Then our constraint is ∫dx x·φ = 0. Finally, we will explicitly declare our variance to be σ², so that ∫dx x²·φ = σ².

Using Lagrange multipliers, we will instead maximize the augmented functional S[φ]=∫(φ ln φ + λ0φ + λ1xφ + λ2x²φ dx). Here, the integrand is just the sum of the integrands above, adjusted by Lagrange multipliers λk for which we’ll be solving.

Applying the Euler-Lagrange equations and solving for φ gives φ = 1/exp(1+λ0+xλ1+x²λ2). From here, our symmetry condition forces λ1=0, and evaluating the other integral conditions gives our other λ’s such that q = (1/2πσ²)½·exp(-x² / 2σ²), which is just the Normal (or Gaussian) distribution with mean 0 and variance σ². This remarkable distribution appears in many descriptions of nature, in no small part due to the Central Limit Theorem.

Post link

Charge, Parity and Time Reversal (CPT) Symmetry

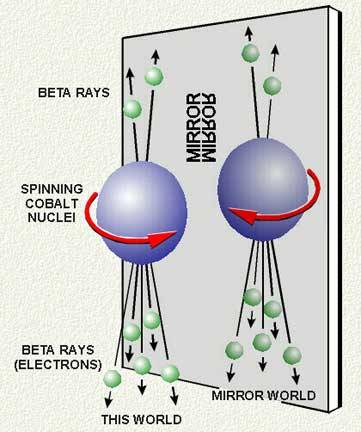

From our everyday experience, it is easy to conclude that nature obeys the laws of physics with absolute consistency. However, several experiments have revealed certain cases where these laws are not the same for all particles and their antiparticles. The concept of a symmetry, in physics, means that the laws will be the same for certain types of matter. Essentially, there are three different kinds of known symmetries that exist in the universe: charge ©, parity (P), and time reversal (T). The violations of these symmetries can cause nature to behave differently. If C symmetry is violated, then the laws of physics are not the same for particles and their antiparticles. P symmetry violation implies that the laws of physics are different for particles and their mirror images (meaning the ones that spin in the opposite direction). The violation of symmetry T indicates that if you go back in time, the laws governing the particles change.

There were two American physicists by the names of Tsunng-Dao Lee and Chen Ning Yang suggested that the weak interaction violates P symmetry. This was proven by an experiment which was conducted with radioactive atoms of colbalt-60 that were lined up and introduced a magnetic field to insure that they are spinning in the same direction. In addition, it was also found that the weak force also does not obey symmetry C. Oddly enough, the weak force did appear to obey the combined CP symmetry. Therefore the laws of physics would be the same for a particle and it’s antiparticle with opposite spin.

Surprise, surprise! There was a slight error in the previous experiment that was just mentioned. A few years later, it was discovered that the weak force actually violates CP symmetry. Another experiment was conducted by two physicists named Cronin and Fitch. They studied the decay of neutral kaons, which are mesons that are composed of either one down quark (or antiquark) and a strange antiquark (or quark). These particles have two decay modes where one will decay much faster than the other, even though they all have identical masses. The particles with the longer lifetimes will decay into three pions (denoted with the symbol π0), however the kaon ‘species’ with the shorter lifetimes will only decay into two pions. They had a 57 foot beamline, where they only expected to see the particles with slower decay rate at the end of the beam tube. In astonishment, one out of every 500 decays where from the kaons species that had a shorter lifetime. The main conflict with seeing the short-lived mesons at the end of the beam tube is because they are traveling relavistic speeds and therefore ignoring the time dilatationthat they are supposed to undergo. Thus, the experiment has shown that the weak force causes a small CP violation that can be seen in kaon decay.

Post link

The Virial Theorem

In the transition from classical to statistical mechanics, are there familiar quantities that remain constant? The Virial theorem defines a law for how the total kinetic energy of a system behaves under the right conditions, and is equally valid for a one particle system or a mole of particles.

Rudolf Clausius, the man responsible for the first mathematical treatment of entropy and for one of the classic statements of the second law of thermodynamics, defined a quantity G (now called the Virial of Clausius):

G ≡ Σi(pi·ri)

Where the sum is taken over all the particles in a system. You may want to satisfy yourself (it’s a short derivation) that taking the time derivative gives:

dG/dt = 2T + Σi(Fi·ri)

WhereT is the total kinetic energy of the system (Σ ½mv2) and dp/dt = F. Now for the theorem: the Virial Theorem states that if the time average of dG/dt is zero, then the following holds (we use angle brackets ⟨·⟩ to denote time averages):

2⟨T⟩ = - Σi(Fi·ri)

Which may not be surprising. If, however, all the forces can be written as power laws so that the potential is V=arn(withr the inter-particle separation), then

2⟨T⟩ = n⟨V⟩

Which is pretty good to know! (Here, V is the total kinetic energy of the particles in the system, not the potential function V=arn.) For an inverse square law (like the gravitationalorCoulomb forces), F∝1/r2 ⇒ V∝1/r, so 2⟨T⟩ = -⟨V⟩.

Try it out on a simple harmonic oscillator (like a mass on a spring with no gravity) to see for yourself. The potential V∝kx², so it should be the case that the time average of the potential energy is equal to the time average of the kinetic energy (n=2 matches the coefficient in 2⟨T⟩). Indeed, if x=A sin( √[k/m] · t ), then v=A√[k/m] cos( √[k/m] · t ); then x2 ∝ sin² and v² ∝ cos², and the time averages (over an integral number of periods) of sine squared and cosine squared are both ½. Thus the Virial theorem reduces to

2 · ½m·(A²k/2m) = 2 · ½k(A²/2)

Which is easily verified. This doesn’t tell us much about the simple harmonic oscillator; in fact, we had to find the equations of motion before we could even use the theorem! (Try plugging in the force term F=-kx in the first form of the Virial theorem, without assuming that the potential is polynomial, and verify that the result is the same). But the theorem scales to much larger systems where finding the equations of motion is impossible (unless you want to solve an Avogadro’s number of differential equations!), and just knowing the potential energy of particle interactions in such systems can tell us a lot about the total energy or temperature of the ensemble.

Post link

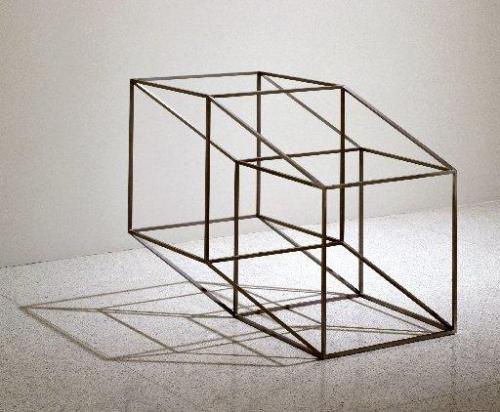

Hypercubes

What is a hypercube (also referred to as a tesseract) you say! Well, let’s start with what you know already. We know what a cube is, it’s a box! But how else could you describe a cube? A cube is 3 dimensional. Its 2 dimensional cousin is a square.

A hypercube is just to a cube what a cube is to a square. A hypercube is 4 dimensional! (Actually– to clarify, hypercubes can refer to cubes of all dimensions. “Normal” cubes are 3 dimensional, squares are 2 dimensional “cubes, etc. This is because a hypercube is an n-dimensional figure whose edges are aligned in each of the space’s dimensions, perpendicular to each other and of the same length. A tesseract is specifically a 4-d cube).

[source]

Another way to think about this can be found here:

Start with a point. Make a copy of the point, and move it some distance away. Connect these points. We now have a segment. Make a copy of the segment, and move it away from the first segment in a new (orthogonal) direction. Connect corresponding points. We now have an ordinary square. Make a copy of the square, and move it in a new (orthogonal) direction. Connect corresponding points. We now have a cube. Make a copy and move it in a new (orthogonal, fourth) direction. Connect corresponding points. This is the tesseract.

If a tesseract were to enter our world, we would only see it in our three dimensions, meaning we would see forms of a cube doing funny things and spinning on its axes. This would be referred to as a cross-section of the tesseract. Similarly, if we as 3-dimensional bodies were to enter a 2-dimensional world, its 2-dimension citizens would "observe” us as 2-dimensional cross objects as well! It would only be possible for them to see cross-sections of us.

Why would this be significant? Generally, in math, we work with multiple dimensions very often. While it may seem as though a mathematican must then work with 3 dimensions often, it is not necessarily true. The mathematician deals with these dimensions only mathematically. These dimensions do not have a value because they do not correspond to anything in reality; 3 dimensions are nothing ordinary nor special.

Yet, through modern mathematics and physics, researchers consider the existence of other (spatial) dimensions. What might be an example of such a theory? String theory is a model of the universe which supposes there may be many more than the usual 4 spacetime dimensions (3 for space, 1 for time). Perhaps understanding these dimensions, though seemingly impossible to visualize, will come in hand.

Carl Sagan also explains what a tesseract is.

Image: Peter Forakis, Hyper-Cube, 1967, Walker Art Center, Minneapolis

Post link

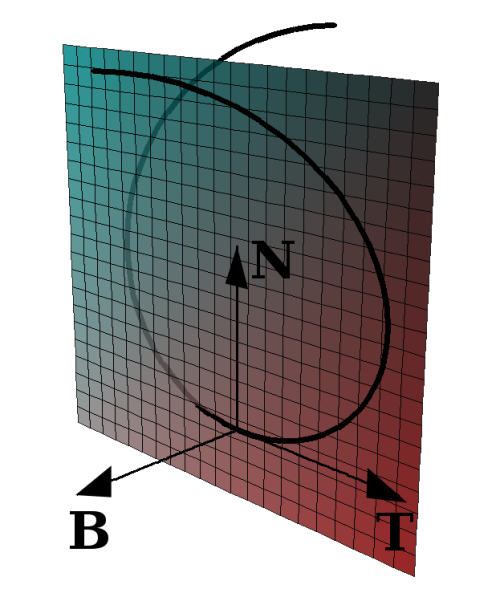

When describing the trajectory of a point particle in space, we can use simple kinematic physics to describe properties of the particle: force,energy, momentum, and so forth. But are there useful measures we can use to describe the qualities of the trajectory itself?

Enter the Frenet-Serret (or TNB) frame. In this post, we’ll show how to construct three (intuitively meaningful) orthonormal vectors that follow a particle in its trajectory. These vectors will be subject to the Frenet-Serret equations, and will also end up giving us a useful way to interpret curvature and torsion.

First, we define arc length: let s(t) = ∫0t||x’(τ)|| dτ. (We give a quick overview of integration in this post.) If you haven’t encountered this definition before, don’t fret: we’re simply multiplying the change in position of the particle x’(τ) by the small time step dτ summed over every infinitesimal time step from τ=0 to τ=t=”current time”. The post linked to above also explains a short theorem that may illustrate this point more lucidly.

Now, consider a particle’s trajectory x(t). What’s the velocity of this particle? Its speed, surely, is ds/dt: the change in arc length (distance traveled) over time. But velocity is a vector, and needs a direction. Thus we define the velocity v=(dx/ds)⋅(ds/dt). This simplifies to the more obvious definition dx/dt, but allows us to separate out the latter term as speed and the former term as direction. This first term, dx/ds, describes the change in the position given a change in distance traveled. As long as the trajectory of the particle has certain nice mathematical properties (like smoothness), this vector will always be tangent to the trajectory of the particle. Think of this vector like the hood of your car: even though the car can turn, the hood will always point in whatever direction you’re going towards. This vector T ≡ dx/ds is called the unit tangent vector.

We now define two other useful vectors. The normal vector: N ≡ (dT/ds) / ( |dT/ds| ) is a vector of unit length that always points in whichever way T is turning toward. It can be shown — but not here — that T⊥N. The binormal vector B is normal to both TandN; it’s defined as B≡TxN. So T, N, and B all have unit length and are all orthogonal to each other. Since T depends directly on the movement of the particle, N and B do as well; therefore, as the particle moves around, the coordinate system defined by T, N, and B moves around as well, connected to the particle. The frame is always orthonormal and always maintains certain relationships to the particle’s motion, so it can be useful to make some statements in the context of the TNB frame.

The Frenet-Serret equations, as promised:

- dT/ds = κN

- dN/ds = -κT + τB

- dB/ds = -τN

Here, κ is the curvature and τ is the torsion. Further reading (lookup the Darboux vector) illustrates that κ represents the rotation of the entire TNB frame about the binormal vector B, and τ represents the rotation of the frame about T. The idea of the particle trajectory twisting and rolling nicely matches the idea of what it might be like to be in the cockpit of one of these point particles, but takes this depth of vector analysis to get to.

Bonus points: remember how v=Tv, with v the speed? Differentiate this with respect to time, play around with some algebra, and see if you can arrive at the following result: the acceleration a = κv2N + (d2s/dt2)T. Thoughtful consideration will reveal the latter term as the tangential acceleration, and knowing that 1/κ ≡ ρ = “the radius of curvature” reveals that the first term is centripetal acceleration.

—

Photo credit: Salix albaaten.wikipedia

Post link

Cosmic Rays

As you are reading this sentence, thousands of cosmic rays are passing through your body. They have been your whole life… But what are they exactly?

The composition of cosmic rays is estimated as follows:

- 89% Simple protons

- 10%Alpha particles

- Most of the remaining 1% are electrons, positrons andantiprotons and other heavier nuclei (which are abundant end products of stars’ nuclear synthesis)

(However, the precise composition of cosmic rays outside the Earth’s atmosphere can vary depending on which part of the energy spectrum is observed.)

There are two types of cosmic rays:

Primary cosmic rays

It is speculated that cosmic rays are formed when particles are accelerated by a blast waves of supernova remnants. They eventually gain so much energy from expanding clouds of gas and bouncing back and forth in magnetic fields that they continue to move through space at speeds very close to that of light. The supernova remnants are not able to contain them when they reach these speeds (and also have energies of over 1018 eV), so the cosmic rays escape into the galaxy. The maximum amounts of energy cosmic rays can gain depend on the size of the acceleration region and the strength of its magnetic field.

Secondary cosmic rays

Primary cosmic rays interact with interstellar matter to produce a second type of cosmic ray. Heavy nuclei (mainly carbon and oxygen) that make up less than 1% of cosmic rays break up into lighter nuclei (of mainly lithium, beryllium and boron) upon penetration of the Earth’s atmosphere and surface by a process of cosmic ray spallation.

Post link

Antiprotonic Helium

Antiprotonic helium consists of an electron and antiproton that orbit around a helium nucleus. The hyperfine structure of this exotic type of matter is studied very closely by a CERN experiment in Japan called ASACUSA (AtomicSpectroscopyAndCollisionsUsing Slow Antiprotons) using laser spectroscopy.

To create antiprotonic helium, antiprotons are mixed with helium gas so that they spontaneously remove one of the electrons that orbit around each of the helium atoms and take their places. However, this reaction will only occur for 3% of the gas.

From the time that antiprotonic helium is created, the antiprotons orbiting the helium nucleus will only remain in orbit for a few micro seconds until they fall rapidly into the nucleus, causing a proton-antiproton annihilation. Surprisingly, antiprotonic helium has the longest lifetime of all the other antiprotonic atoms.

Laser Spectroscopy

ASACUSA physicists used a laser pulse (that if tuned correctly) will let the atom of antiprotonic helium absorb just enough energy so that the antiproton can jump from one energy level (aka orbit) to the other. Thus allowing physicists to determine the energy between orbits of an atom. Currently, laser and microwave precision spectroscopy of antiprotonic helium atoms is ASACUSA’s top priority. (Which is basically using two laser beams and pulsed microwave beams to further explore the ‘hyperfine energy levels’ of antiprotonic helium.)

Post link

What exactly is “redshift”?

Redshift is defined as:

a shifttoward longer wavelengths of the spectral lines emitted by a celestial object that is caused by the object moving away from the earth.

If you can understand that, great! But for those of us who cannot, consider the celestial bodies which make up our night sky. Did you think they were still, adamant, everlasting constants? They may seem to stick around forever, but…

Boy, you were wrong. I’ll have you know that stars are born and, at some point, they die. They move, they change. Have you heard about variable stars? Stars undergo changes, sometimes in their luminosity. (We are, indeed, made of the same stuff as stars).

So, stars move. All celestial bodies do, actually. You might have heard about some mysterious, elusive thing called dark energy. Dark energy is thought to be the force that causes the universe to expand at a growing rate. If it is proven to exist, dark energy will be able to explain why redshift occurs.

Maybe you can understand redshift by studying a visual:

These are spectral lines from an object. What do you notice is different in the unshifted, “normal” emission lines from the redshifted and blueshifted lines?

The redshifted line is observed as if everything is “shifted” a bit to the right– towards the red end of the spectrum; whereas the blueshifted line is moved to the left towards the bluer end of the spectrum.

Imagine if you were standing here on earth and some many lightyears away, a hypothetical “alien” was standing on their planet. With this image in mind, consider a galaxy in between the two of you that is moving towards the alien. You would then observe redshift (stretched out wavelength) and the alien would observe blueshift (shortened wavelength).

Here,Symmetry Magazine explains redshift in their “Explain it in 60 seconds” series.

A simple, everyday example of this concept can be observed if you stand in front of a road. As a car (one without a silencer) drives by, the pitch you observe changes. This is known as the Doppler effect. Watch this quick youtube video titled “Example of Dopper Shift using car horn”:

(You may not be able to view it from the dashboard, only by opening this post on the actual blog page. You can watch the video by clicking this link).

Notice how as the car drives past the camera man, the sound changes drastically.

Understanding redshift is important to scientists, especially astronomers and astrophysicists. They must account for this observable difference to make the right conclusions. Redshift is one the concepts which helped scientists determine that celestial bodies are actually moving further away from us at an accelerating rate.

Post link

The Stern-Gerlach Experiment

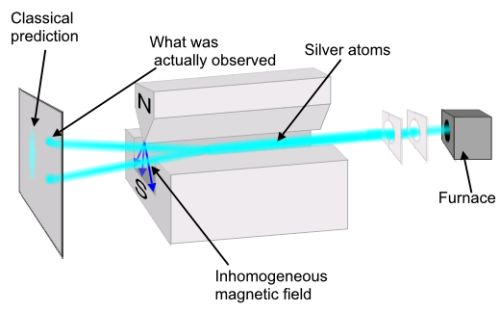

In 1922 at the University of Frankfurt in Frankfurt, Germany, Otto SternandWalther Gerlach sent a beam of silver atoms through an inhomogeneous magnetic field in their experimental device. They were taking a look at the new concept of quantized spin angular momentum. If indeed the spin associated with particles could only take on two or some other countable number of states, then the atoms transmitted through the other end of their machine should come out as two (or more) concentrated beams. Meanwhile if the quantum theory was wrong, classical physics predicted that the profile of a single smeared-out beam would result on the detector screen, due to the magnetic field deflecting each randomly spin-oriented atom a different amount on a continuous, rather than discrete, scale.

As you can see above, the results of the Stern-Gerlach experiment confirmed the quantization of spin for elementary particles.

Spin and quantum states

A spin-½ particle actually corresponds to a qubit

|ψ> = c1|ψ↑> + c2|ψ↓>

a wavefunction representing a particle whose quantum state can be seen as the superposition (or linear combination) of two pure states, one for each kind of possible spin along a chosen axis (such as x, y or z). The silver atoms of Stern and Gerlach’s experiment fit in this description because they are made of spin-½ particles (electrons and quarks, which make up protons and neutrons).

Significantly, the constant coefficients c1 and c2 are complex and can’t be directly measured. But the squared moduli ||c1||2 and ||c2||2 of these coefficients represent the probability that a particle in state |ψ> will be observed as spin up or down at the detector.

||c1||2 + ||c2||2 = 1 : it is certain that the particle will be detected in one of the two spin states.

That means when we pass a large sample of particles in identical quantum states through a Stern-Gerlach (S-G) machine and detector, we are actually measuring the probabilities that the particle will adopt the spin up or spin down states along the particular axis of the S-G machine. This follows the relative-frequency interpretation of probability, where as the number of identical trials grows large the relative frequency of an event approaches the true probability that the event will occur in any one trial.

By moving the screen so that either the up or down beam is allowed to pass while the the other is stopped at the screen, we are “polarizing” the beam to a certain spin orientation along the S-G machine axis. We can then place one or more S-G machines with stops in front of that beam and reproduce all the experiments analogous to linear polarization of light.

Post link

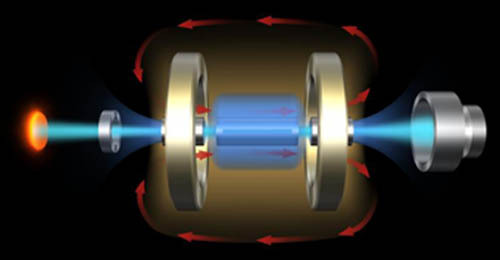

Refrigerated Electron Beam Ion Trap (REBIT)

Anion trap is an experimental physics device that captures ions for use in condensed matter experiments. A common design, pictured above, uses extremely strong magnetic fields (on the order of teslas) to accelerate a central electron beam. When a gas is released into the chamber, particles near the beam have their outer electrons ripped off by the magnetic field. The stronger the magnetic field, the more you can ionize your particles.

RefrigeratedEBITs can allow the magnetic field to become absurdly strong due to superconducting effects in the magnetic coils. These devices are capable of producing highly charged ions (HCIs). A REBIT can take xenon gas, for example, and give back Xe34+. That’s xenon – a noble gas that loves its electrons – with 34 of its 54 usual electrons ripped off! Particles like this are extremely energetic, and surface physics experiments often investigate the interaction of these particles with metallic surfaces. These very angry HCIs can create microscopic craters in a previously clean surface.

Besides surface physics, HCIs are found in several astrophysical systems. This makes REBIT facilities one of the rare places where scientists can perform experimental astrophysics, by generating and experimenting with high charged plasmas in the lab. Highly charged ions can be found in powerful cosmic phenomena like stellar coronae and accretion disks in quasars.

Post link

The Weak Interaction

Amongst the four fundamental forces in nature, the weak interaction (aka the weak force or weak nuclear force) is the most commonly unheard of.

The weak force is mainly responsible for radioactive decay and fusion in stars. In our current understanding of the Standard Model, the weak interaction itself is caused by the emission or absorption of W and Z bosons. For example, this is most commonly seen in beta decay.

The exchange of W and Z bosons not only cause the transmutation of quarks (i.e. quark flavor changing) inside hadrons, but also (by definition) the hadrons themselves. For example, the process of beta decay allows for a neutron to transform into a proton. Given that a neutron is made up of two down quarks and one up quark, a down quark will need to emit a W¯ boson in order to transform into an up quark, thus allowing for the formation of a proton (which consists of two up quarks and one down quark). At the end of the process, the W¯ boson will then further decay into an electron and antineutrino.

It is called the weak force because its field strength is several orders of magnitude less than that of both electromagnetism and the strong interaction (however, gravity is the weakest of the four forces). The weak interactions are extremely short ranged (≈ 2 x 10-3fm) because the intermediate vector bosons (W and Z) are very massive (with even higher masses than neutrons).

Unifying Fundamental Forces

Electromagnetism and the weak force are now considered to be two aspects of a unified electroweak interaction. This is the first step toward the unification the four fundamental forces.

Post link

Quark-Gluon Plasma

First of all… What are quarks and gluons?

Quarks are tiny subatomic particles that make up the nucleons (protons & neutrons) of everyday matter as well as other hadrons. Gluons are massless force-carrying particles which are necessary to bind quarks together (by the strong force\interaction) so that they can form hadrons.

QGP (Quark-Gluon Plasma)

A tiny fraction of a second after the Big Bang, the universe is speculated to have consisted of inconceivably hot and dense quark-gluon plasma. QGP exists at such high temperatures (about 4 trillion Kelvin — 250,000 times warmer than the sun’s interior), that the quarks and gluons are almost free from colour confinement (in other words, they do not group themselves to form hadrons). QGP does not behave as an ideal state of free quarks and gluons, instead it acts like an almost perfect dense fluid.

It then took only a few micro-seconds until those particles were able to cool down to lower energies and separate to form nucleons.

RHIC & ALICE

To study the properties of the early universe, physicists have created accelerators that essentially recreate quark-gluon plasma. The Relativistic Heavy Ion Collider (RHIC) at Brookhaven National Laboratory (in Upton, NY) collides heavy ions (mainly gold) at relativistic speeds. This accelerator has a circumference of about 2.4 miles in which two beams of gold ions travel (one travels clockwise, and the other anticlockwise) and collide. The resulting energy from the collision allows for the recreation of this mysterious primordial form of matter.

ALICE (A Large Ion Collider Experiment) at CERN (in Geneva, Switzerland) is the only other current heavy ion collider experiment which studies QGP. However, instead of gold, ALICE uses lead ions.

Post link

Fractal Geometry: An Artistic Side of Infinity

Fractal Geometry is beautiful. Clothes are designed from it and you can find fractal calendars for your house. There’s just something about that infinitely endless pattern that intrigues the eye– and brain.

Fractals are “geometric shapes which can be split into parts which are a reduced-size copy of the whole” (source: wikipedia). They demonstrate a property called self-similarity, in which parts of the figure are similar to the greater picture. Theoretically, each fractal can be magnified and should be infinitely self-similar.

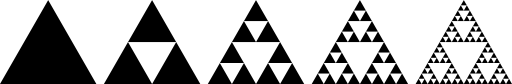

One simple fractal which can easily display self-similarity is the Sierpinski Triangle. You can look at the creation of such a fractal:

What do you notice? Each triangle is self similar– they are all equilateral triangles. The side length is half of the original triangle. And what about the area? The area is a quarter of the original triangle. This pattern repeats again, and again.

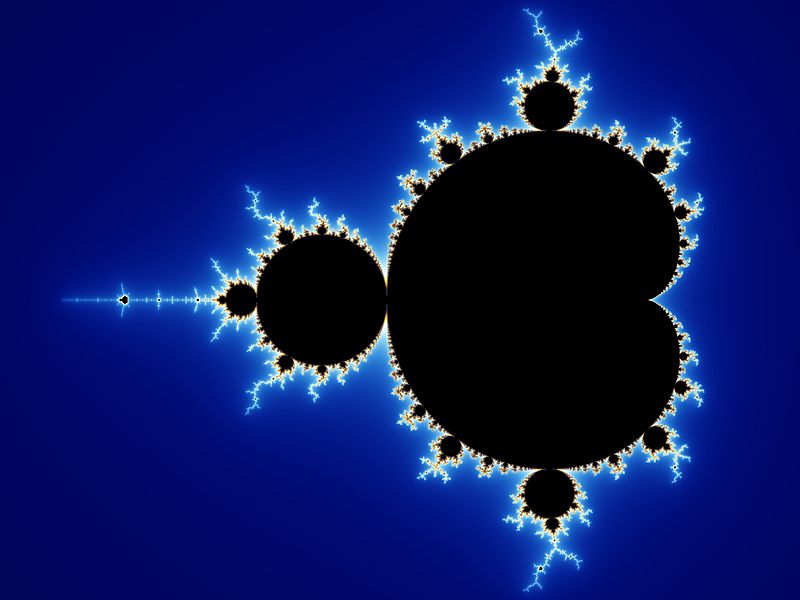

Two other famous fractals are the Koch Snowflake and the Mandelbrot Set.

The Koch Snowflake looks like:

(source: wikipedia)

(source: wikipedia)

It is constructed by going in 1/3 of the of the side of an equilateral triangle and creating another equilateral triangle. You can determine the area of a Koch Snowflake by following this link.

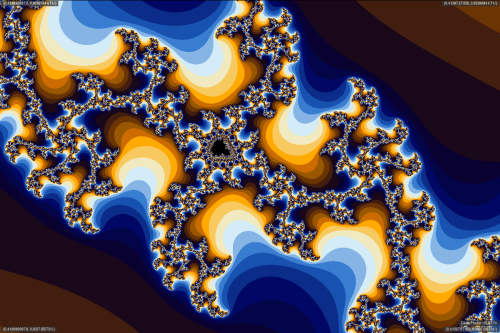

The Mandelbrot set…

… is:

the set of values of c in the complex plane for which the orbit of 0 under iteration of the complex quadratic polynomial zn+1 = zn2 + c remains bounded. (source: wikipedia)

It is a popular fractal named after Benoît Mandelbrot. More on creating a Mandelbrot set is found here, as well as additional information.

You can create your own fractals with this fractal generator.

But what makes fractals extraordinary?

Fractals are not simply theoretical creations. They exist aspatterns in nature! Forests can model them, so can clouds and interstellar gas!

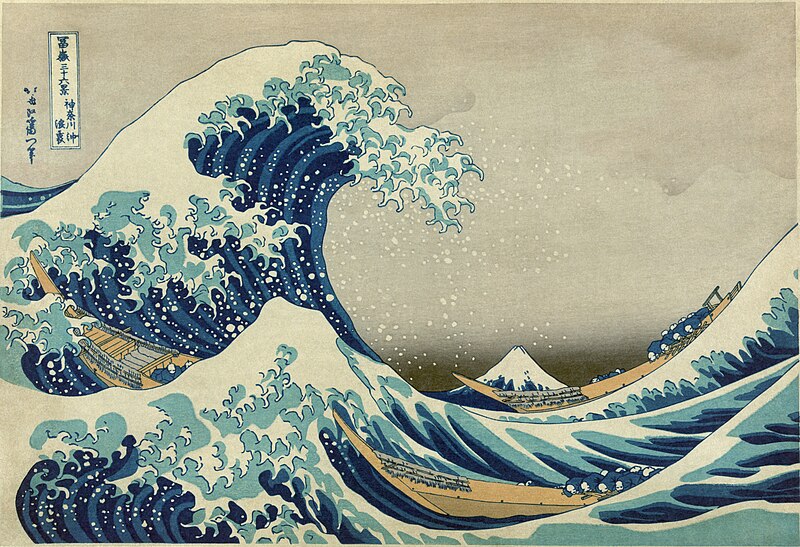

Artists are fascinated by them, as well. Consider The Great Wave off Kanagawa by Katsushika Hokusai:

Even graphic artists use fractals to create mountains or ocean waves. You can watch Nova’s episode of Hunting the Hidden Dimensionfor more information.

Post link

![[Image source] What exactly is “redshift”? Redshift is defined as: a shift toward lon [Image source] What exactly is “redshift”? Redshift is defined as: a shift toward lon](https://64.media.tumblr.com/tumblr_lqedrn3vaN1qmyxvuo1_500.jpg)